Configure GCP Logs

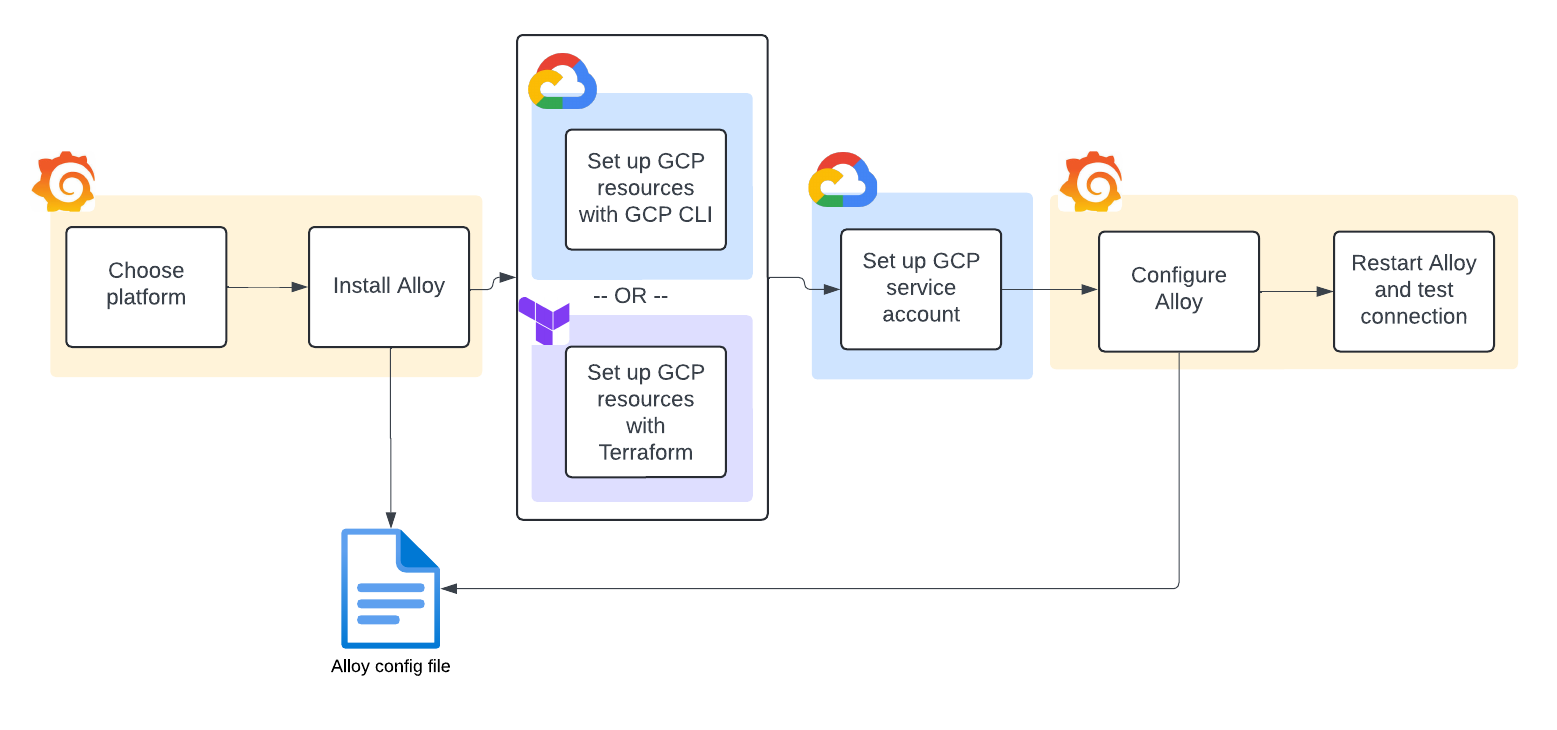

Complete the following steps to configure GCP Logs as shown in the following diagram.

Select your platform

Select the platform from the drop-down menu.

Install Grafana Alloy

Grafana Alloy reads the logs from the Pub/Sub subscription.

- If you have not already installed Alloy where you intend to run GCP Logs, click Run Grafana Alloy.

- At the Alloy configuration screen, either create a token or use an existing token.

- To create a token, enter a token name, then click Create token. The token displays on the screen and is added to the command for running Alloy. Copy the command and paste it into the terminal.

- To use an existing token, paste it into the token field, then click Proceed to install integration.

- Copy the command and paste it into the terminal.

- Click Proceed to install integration.

Set up GCP logs

Click Setup instructions.

At the Set up GCP resources screen, create the required resources for GCP Log collection. A customizable logging sink routes logs from Cloud Logging to Pub/Sub. Grafana Alloy then pulls these logs from the Pub/Sub topic.

Select either the gcloud CLI or with Terraform configuration method.

Configure with gcloud CLI

Ensure you have these prerequisites before setup:

- Installed gcloud CLI

- Roles for

pubsub.editorandlogging.configWriter

Complete these steps in the glcoud CLI, then click Close to return to the next step.

Configure with Terraform

If you need help working with Terraform and GCP, refer to this tutorial. Complete these steps with Terraform, then click Close to return to the next set of steps.

Copy the following into a TF file.

// Provider module provider "google" { project = "$GCP_PROJECT_ID" } // Topic resource "google_pubsub_topic" "main" { name = "cloud-logs" } // Log sink variable "inclusion_filter" { type = string description = "Optional GCP Logs query which can filter logs being routed to the pub/sub topic and promtail" } resource "google_logging_project_sink" "main" { name = "cloud-logs" destination = "pubsub.googleapis.com/${google_pubsub_topic.main.id}" filter = var.inclusion_filter unique_writer_identity = true } resource "google_pubsub_topic_iam_binding" "log-writer" { topic = google_pubsub_topic.main.name role = "roles/pubsub.publisher" members = [ google_logging_project_sink.main.writer_identity, ] } // Subscription resource "google_pubsub_subscription" "main" { name = "cloud-logs" topic = google_pubsub_topic.main.name }Create the new resources by running the following after replacing the required variable

<GCP Logs query of what logs to include>.terraform apply \ -var="inclusion_filter=<GCP Logs query of what logs to include>"

Set up GCP service account

In the glcoud CLI, create a service account with the pubsub.subscriber permission.

The service account and its permission enables Grafana Alloy to read log entries from the Pub/Sub subscription.

Configure Alloy to consume GCP Pub/Sub

Configure Grafana Alloy to scrape logs from GCP Pub/Sub.

Navigate to the configuration file for your Alloy instance.

Copy the following and append it to your Alloy configuration file.

discovery.relabel "logs_integrations_integrations_gcp" { targets = [] rule { source_labels = ["__gcp_logname"] target_label = "logname" } rule { source_labels = ["__gcp_resource_type"] target_label = "resource_type" } } loki.source.gcplog "logs_integrations_integrations_gcp" { pull { project_id = "<gcp_project_id>" subscription = "<gcp_pubsub_subscription_name>" labels = { job = "integrations/gcp", } } forward_to = [loki.write.grafana_cloud_loki.receiver] relabel_rules = discovery.relabel.logs_integrations_integrations_gcp.rules }Find the following values and replace them as follows with values you created when you set up GCP Logs:

gcp_project_id: replace with the GCP project IDgcp_pubsub_subscription_name: replace with the subscription name

discovery.relabeldefines any relabeling needed before sending logs to Loki.loki.source.gcplogretrieves logs from cloud resources such as GCS buckets, load balancers, or Kubernetes clusters running on GCP by making use of Pub/Sub subscriptions.To view additional configuration examples, click Advanced configurations.

Examples of advanced configuration

You can further modify the loki.source.gcplog component for more advanced usages.

Multiple consumers, single subscription

loki.source.gcplog "logs_integrations_integrations_gcp" {

pull {

project_id = "project-1"

subscription = "subscription-1"

labels = {

job = "integrations/gcp",

}

}

forward_to = [loki.write.grafana_cloud_loki.receiver]

relabel_rules = discovery.relabel.logs_integrations_integrations_gcp.rules

}

loki.source.gcplog "logs_integrations_integrations_gcp" {

pull {

project_id = "project-1"

subscription = "subscription-1"

labels = {

job = "integrations/gcp2",

}

}

forward_to = [loki.write.grafana_cloud_loki.receiver]

relabel_rules = discovery.relabel.logs_integrations_integrations_gcp.rules

}Multiple subscriptions

loki.source.gcplog "logs_integrations_integrations_gcp" {

pull {

project_id = "project-1"

subscription = "subscription-2"

labels = {

job = "integrations/gcp",

}

}

forward_to = [loki.write.grafana_cloud_loki.receiver]

relabel_rules = discovery.relabel.logs_integrations_integrations_gcp.rules

}

loki.source.gcplog "logs_integrations_integrations_gcp" {

pull {

project_id = "project-1"

subscription = "subscription-1"

labels = {

job = "integrations/gcp2",

}

}

forward_to = [loki.write.grafana_cloud_loki.receiver]

relabel_rules = discovery.relabel.logs_integrations_integrations_gcp.rules

}Restart Grafana Alloy

Run the command appropriate for your platform to restart Grafana Alloy so your changes can take effect.

Test connection

Click Test connection to test that Grafana Alloy is collecting data and sending it to Grafana Cloud.

View your logs

Click the Logs tab to view your logs.