Out-of-memory issues

To handle and try to prevent OOMKilled (Out-Of-Memory Killed) errors, it’s important to understand what causes them. The Linux kernel’s OOM Killer process is responsible for terminating processes on Nodes that are critically low on memory.

When Kubernetes runs on a Linux-based system, the OOM Killer kills any container that exceeds its memory limits, which triggers an out-of-memory event. In such a case, Kubelet will attempt to restart the container if the Pod is still scheduled on the same Node.

Memory requests and limits

To help containers run more predictably, you set memory requests and limits. A memory request is how much memory should be reserved for a container. A memory limit is the maximum amount of memory a container can use. The Kubernetes scheduler assigns Pods to Nodes based on constraints and available resources, including memory resources.

Analyze KubePodCrashLooping

Kubernetes Monitoring includes KubePodCrashLooping, a prebuilt alert which detects Pods that are in a state of CrashLoopBackOff. An OOM condition is one possible cause of this alert.

When you receive such an alert:

- Go to the list of alerts on either the Kubernetes Overview or Alerts page, and filter for the

KubePodCrashLoopingalert. - Click the name of the container to go to the container detail page.

- Change the time range selector to at least the last twelve hours.

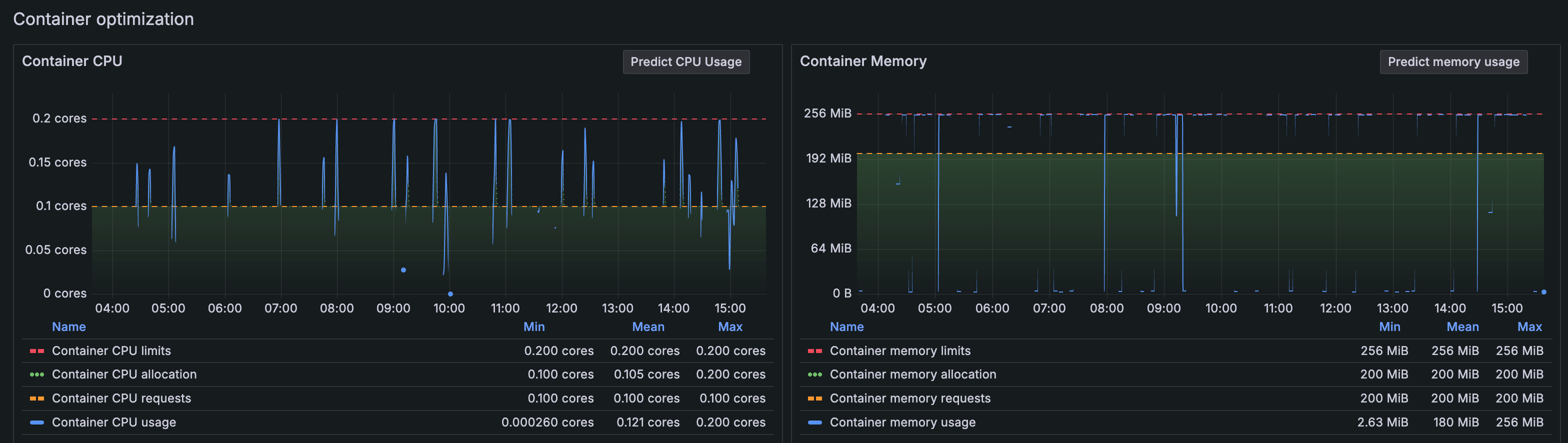

- Navigate to the Container Optimization section on the detail page. In this example, the Container Memory graph shows memory usage hitting the memory limit.

![Container CPU and Memory graphs showing issues with CPU and memory Container CPU and Memory graphs showing issues with CPU and memory]()

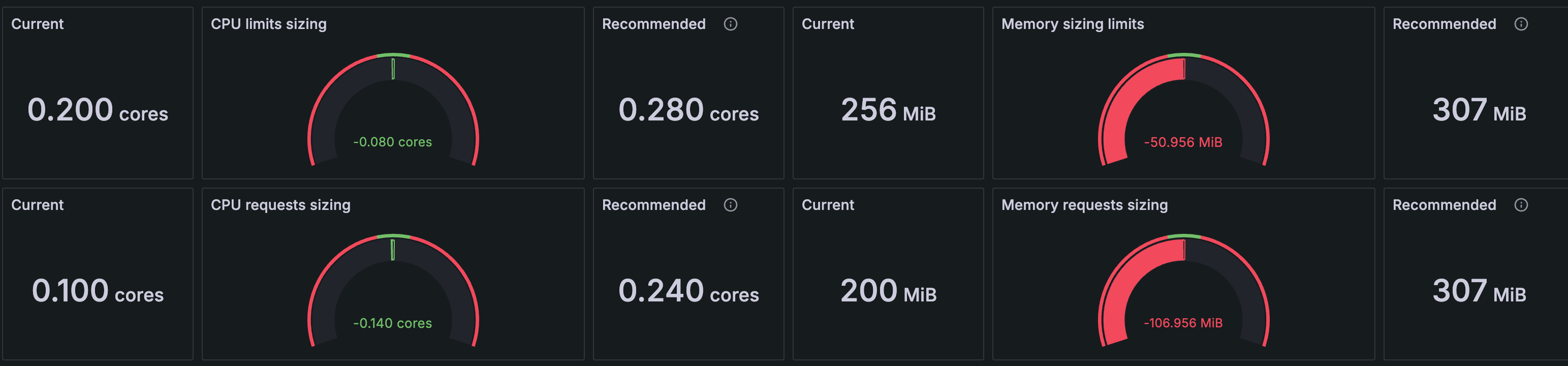

Container CPU and Memory graphs showing issues with CPU and memory - Check the current and recommended memory limits for the container. Notice in this example:

- The memory requests and limits are not the same amount even though they should be.

- Kubernetes Monitoring recommends an increase for both memory requests and limits.

![Recommendations for requests and limits Recommendations for requests and limits]()

Recommendations for requests and limits

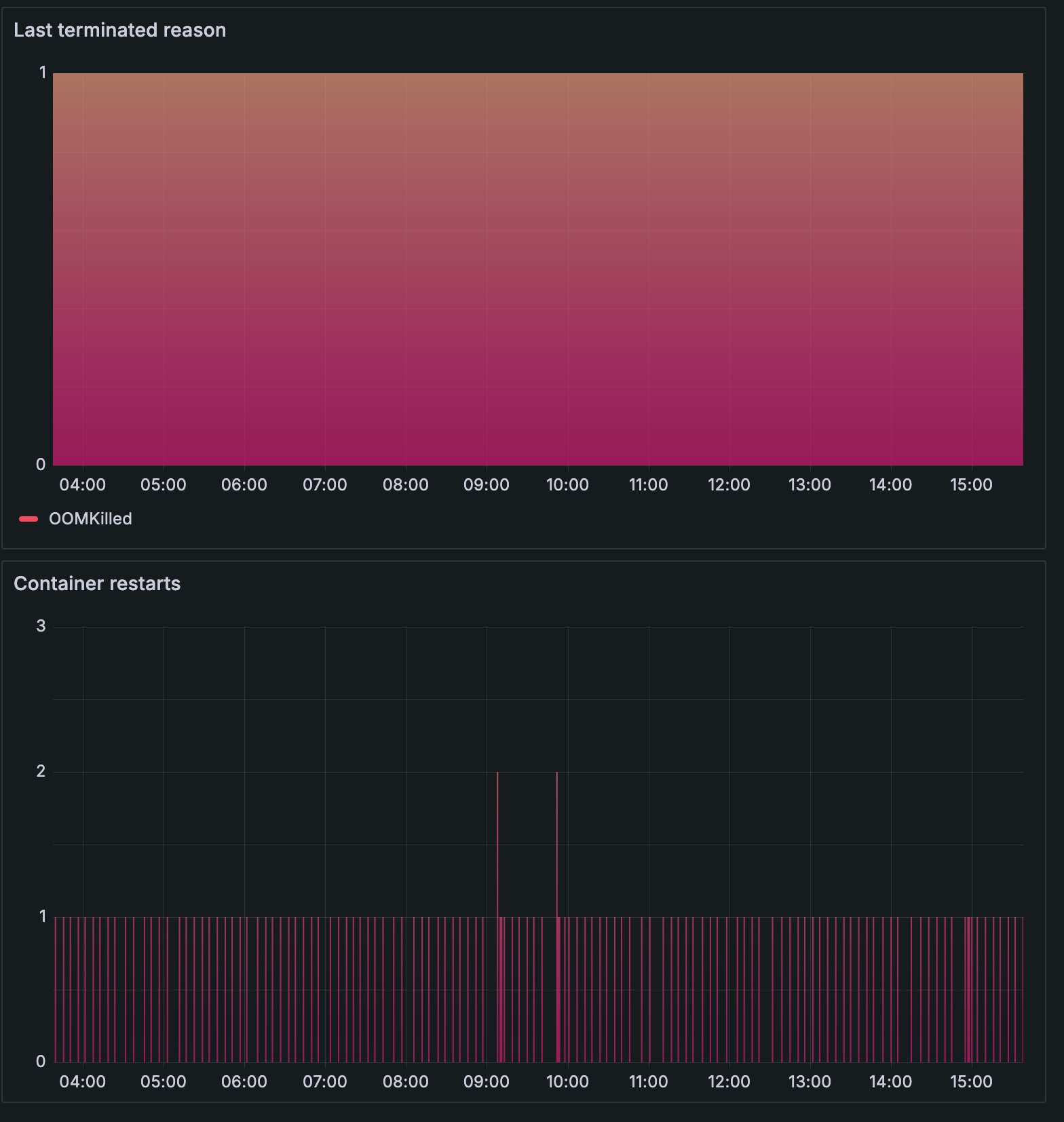

- Check the Container restarts graph. In this case, it indicates multiple restarts with OOMKilled as the last terminated reason.

![Container restarts graph and last terminated reason Container restarts graph and last terminated reason]()

Container restarts graph and last terminated reason - Attempt to resolve the issue.

- If the last terminated reason is OOMKilled, you can follow the recommendations given in the Container Memory section to increase memory requests and limits.

- If the last terminated reason is Error, review the Pod logs to check for application errors such as a Go panic. In this case, it doesn’t help to increase memory requests and limits.

Best practices for prevention

Use best practices when setting memory requests and limits.

Equal amounts for requests and limits

Make sure the requests and limits you set for memory are an equal amount. Limits that are set higher than requests can cause Pod evictions because the Cluster needs to get back the memory it needs when the Pod is going over the requested amount.

Follow recommendations

Use Kubernetes Monitoring to monitor memory usage and understand patterns, and use the recommendations for memory requests and limits for containers.

Check for memory leaks

When a program is using memory but does not release it after use, the container’s memory use increases over time and can eventually lead to being OOMKilled.