What's new from Grafana Labs

Grafana Labs products, projects, and features can go through multiple release stages before becoming generally available. These stages in the release life cycle can present varying degrees of stability and support. For more information, refer to release life cycle for Grafana Labs.

No results found. Please adjust your filters or search criteria.

There was an error with your request.

Page:

We’re excited to announce the public preview release of secrets management for Synthetic Monitoring, available to all Grafana Cloud users.

Secrets management gives you a centralized place to securely store sensitive data like API keys, passwords, and tokens.

We’re excited to announce the integration of the Model Context Protocol (MCP) into Grafana Cloud Traces and into open-source Tempo (merged, available in Tempo 2.9). MCP, a standard developed by Anthropic, allows data sources to expose data and functionality to Large Language Models (LLMs) via an agent.

This integration opens up new possibilities for interacting with tracing data. You can now connect LLM-powered tools like Claude Code or Cursor to Grafana Cloud Traces, enabling you to:

- Explore services and understand interactions: LLMs can be used to teach new developers about service interactions within an application by analyzing tracing data. For instance, a new developer could ask the AI to explain how their services interact. The AI would use live tracing data from Cloud Traces to answer these questions.

- Diagnose and investigate errors: You can leverage LLMs to identify and diagnose errors in your systems. The AI can answer questions like “Are there errors in my services?”, “What endpoints are being impacted”, etc?

- Optimize performance and reduce latency: LLMs can assist in identifying the causes of latency and guiding optimization efforts. By analyzing trace data, an LLM can summarize operations in a request path, pinpoint bottlenecks, and even suggest code changes to improve performance, such as parallelizing operations.

Grafana IRM outgoing webhooks now support incident events, providing a unified experience for automating alert group and incident workflows. You can configure webhooks from the Outgoing Webhooks tab and trigger requests based on key incident lifecycle events, such as when an incident is declared, updated, or resolved.

With this update, you can:

- Customize webhook requests with support for any HTTP method

- Use templated URLs, headers, and request bodies

- Dynamically reference incident data and prior webhook responses

- View webhook execution details directly from the incident or alert group timeline

- Manage incident-related webhook configurations using Terraform

We’re excited to share a new integration between Tailscale and Grafana Cloud that lets you query data sources on your Tailscale network directly from your Grafana Cloud stack.

Tailscale allows you to create a secure network (called a tailnet) by directly connecting users, devices, and resources. This new integration adds an ephemeral machine to your tailnet on your behalf. You can add tags to these machines, which allow you to configure Tailscale ACLs and Grants, giving you full control of what your Grafana Cloud stack can access.

The canvas visualization editor now offers a completely re-engineered pan and zoom experience.

You can now place elements anywhere—even beyond panel edges—without disrupting connections or layouts. Background images stay consistent, connection anchors rotate with elements, and an optional Zoom to content toggle automatically fits your canvas content to any view. Constraints remain intact thanks to a transparent root container, ensuring layout behavior stays reliable across pan and zoom operations.

Constraint system support

Grafana SLO now supports exporting existing SLOs in HCL format and generating HCL during new SLO creation so users can use Terraform to manage their SLOs.

- Users looking to export an existing SLO into HCL format should locate their SLO on the Manage SLO screen, click the More drop-down, and select Export

- Users looking to create a new SLO to be exported should navigate to the Manage SLO screen, click the more drop-down on the top right of the page, and select New SLO for export

The process for configuring alerting using Faro data coming from your frontend apps has just gotten a whole lot easier. Introducing Grafana Cloud Frontend Observability out of the box alerting. We have taken the first step in helping users configure Grafana-managed alerts without needing any previous experience with alerting in Grafana Cloud.

Using our simple workflows you can enable and configure alerts based on your web app’s errors and web vital metrics. Find and troubleshoot issues sooner now that alert configuration and rules are handled automatically. Additionally, these alerts can serve as templates for you to expand the alerting coverage of your frontend apps.

You can now add Status updates to incidents in Grafana IRM to help keep your team and stakeholders informed during an incident.

Status updates are structured messages that communicate key information throughout the incident lifecycle. Whether you’re confirming impact, escalating to another team, or resolving the issue, use status updates to help track and share progress in a consistent, high-signal way.

The Grafana Advisor is designed to help Grafana server administrators keep their instances running smoothly, securely and in keeping with best practices.

It performs a series of periodic checks against your Grafana instance to highlight issues requiring the server administrator’s attention.

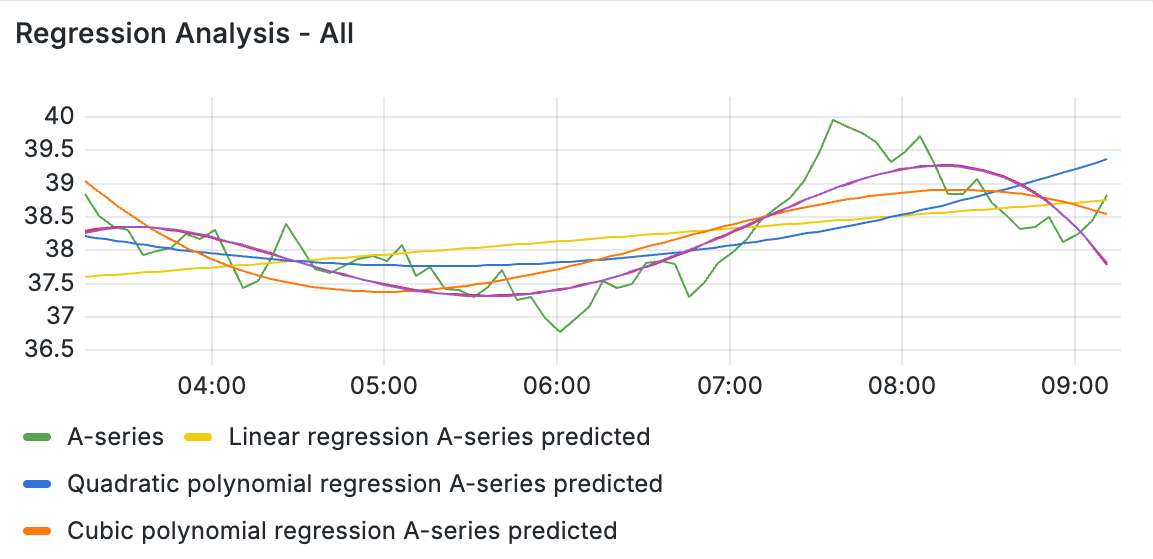

Apply this transformation to any dataset to add a trendline as a new series, fitted to your data using linear regression. This allows you to infer what the data was at a point that may not be exactly represented in the original dataset, or plot predicted values in the future. Trendlines are great for spotting patterns in fluctuating or inconsistent time series. This transformation is extra useful because trendlines can be styled and used just like any other series in your visualization. The Trendline transformation supports both linear and polynomial regression models.

See examples of this transformation on Grafana Play.

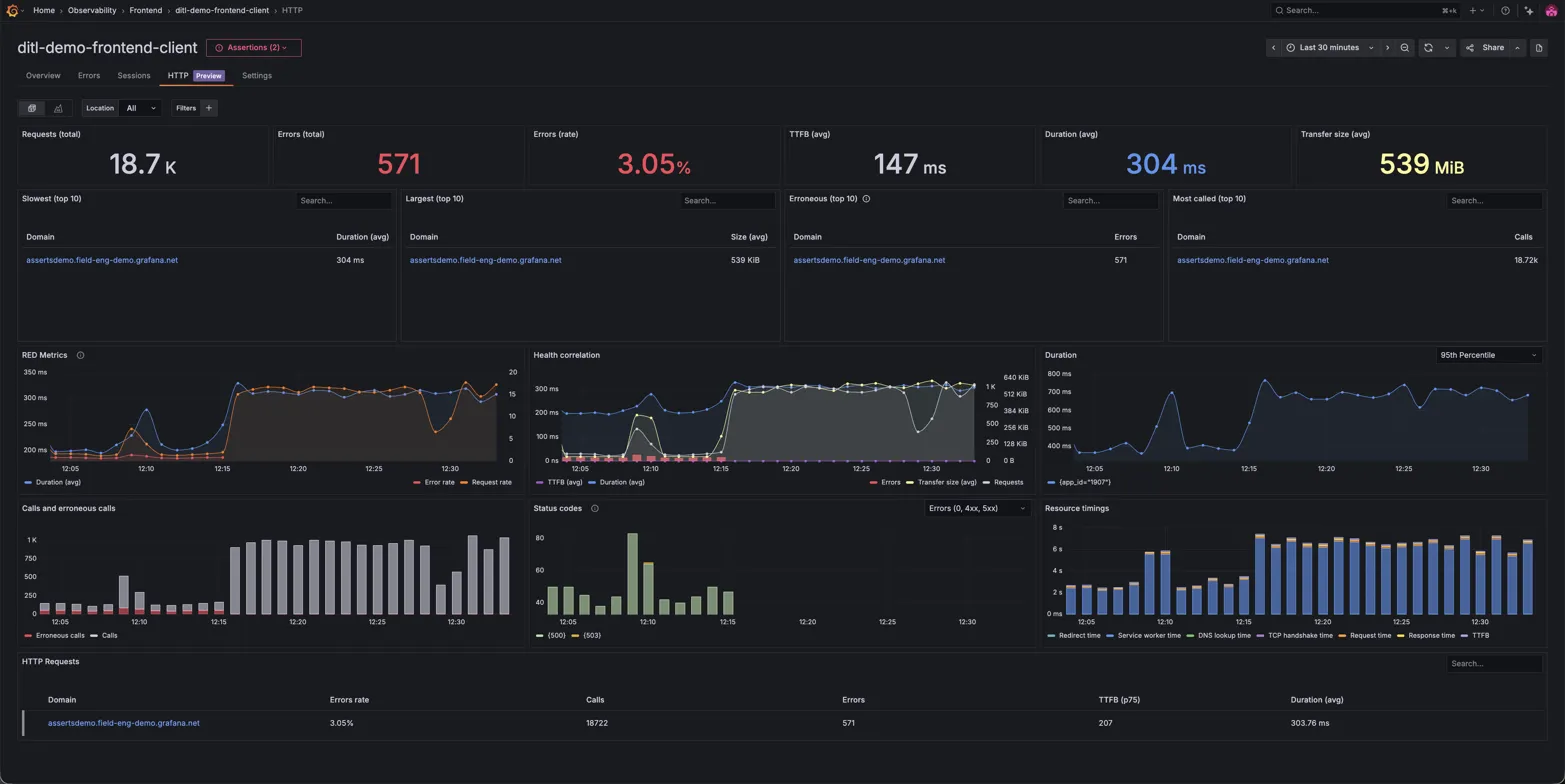

You can now visualize HTTP Performance Insights in Grafana Cloud Frontend Observability. This feature provides a unified view of your slowest endpoints, largest requests, and most error-prone calls, helping you quickly identify and resolve application performance issues.

No more hunting through scattered logs or custom dashboards. These views and breakdowns help you discover insights and gauge impact. You can understand:

- Which API endpoints or assets are causing slowdowns or errors?

- How widespread is a specific performance issue?

- What are the biggest opportunities for optimization based on how issues occur over time.

Instantly pivot from Kubernetes Monitoring to the exact EC2 instance in Cloud Provider Observability that’s impacting performance or stability. Whether it’s a failing node, resource exhaustion, or an unreachable instance, this seamless cross-layer visibility removes manual guesswork and eliminates the need for context-switching.

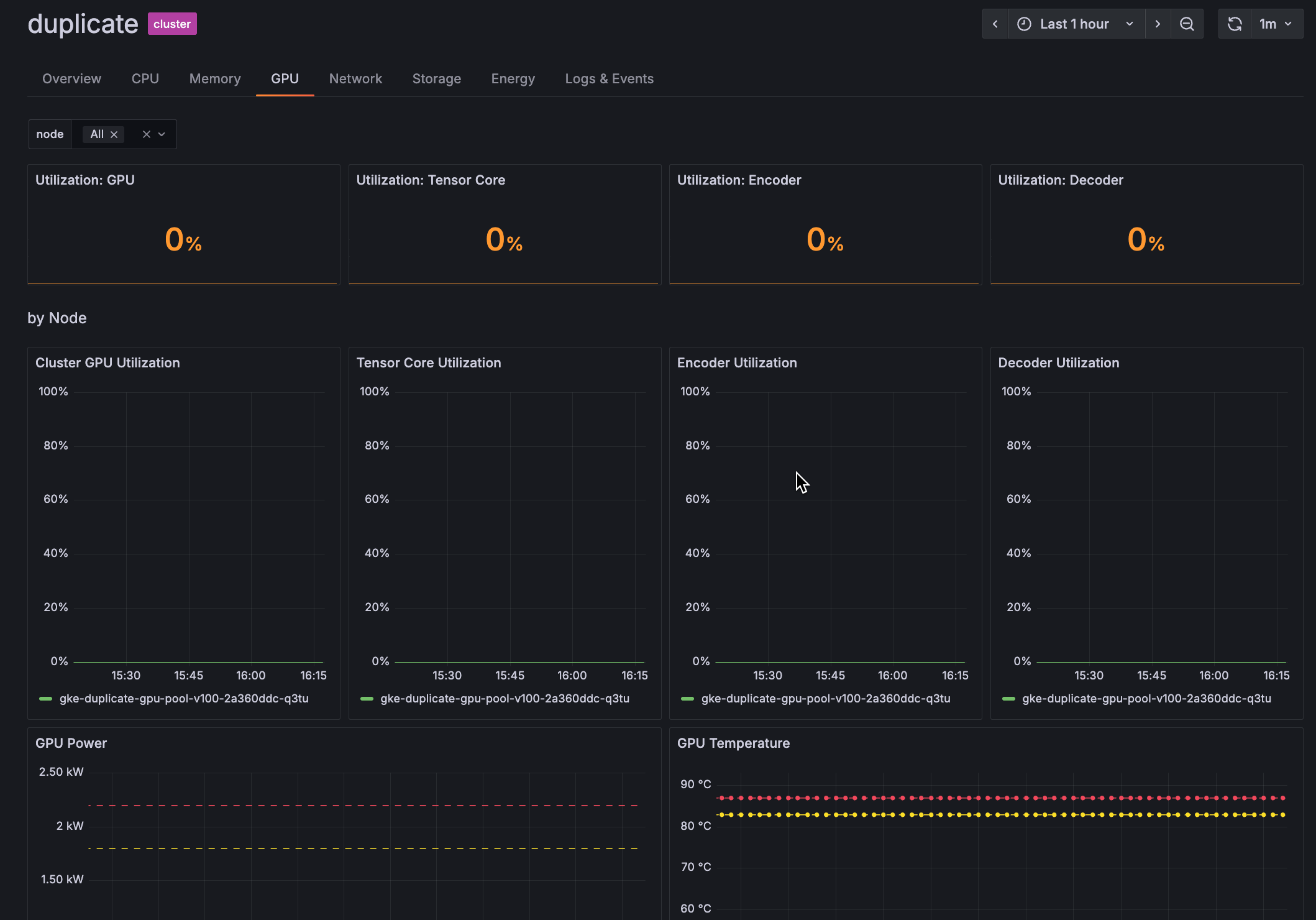

View GPU utilization panels on the GPU tabs of Cluster and Node detail pages to discover whether Nvidia GPUs inside the Cluster are appropriately utilized and whether workloads are getting and using the GPU resources that have been made available.

Segmentation makes it easy for you to manage Adaptive Logs by team, business unit, or any other logical division.

Using segmentation, you can decentralize log management. Shift responsibility from a central team to smaller units, empowering each to manage their own Adaptive Logs rules and control log intake with confidence.

Grafana Assume Role is now Generally Available (GA) for CloudWatch and Athena data sources! Grafana Assume Role allows you to authenticate with AWS without having to create and maintain long-term AWS users or rotate their access and secret keys. Instead, you can create an IAM role that has permissions to access CloudWatch or Athena and a trust relationship with Grafana’s AWS account. Grafana’s AWS account then makes an STS request to AWS to create temporary credentials to access your AWS data. More information can be found in the AWS authentication docs.

On-call engineers need to be instantly aware when critical incidents occur, even in noisy environments or during deep sleep. The Grafana IRM Mobile App now includes five new high-intensity alarm sounds specifically designed to cut through ambient noise and grab your attention when it matters most.

The new sound collection features aircraft alarms, emergency warnings, fire alarms, loud buzzers, and severe warning alarms - each available in both constant and fade-in variations. These sounds were carefully selected based on customer feedback requesting more effective notification options that ensure alerts are never missed.