This is documentation for the next version of Grafana Alloy Documentation. For the latest stable release, go to the latest version.

Components

Components are the building blocks of Alloy. Each component performs a single task, such as retrieving secrets or collecting Prometheus metrics.

Components consist of the following:

- Arguments: Settings that configure a component.

- Exports: Named values that a component makes available to other components.

Each component has a name that describes its responsibility.

For example, the local.file component retrieves the contents of files on disk.

You define components in the configuration file by specifying the component’s name with a user-defined label, followed by arguments to configure the component.

discovery.kubernetes "pods" {

role = "pod"

}

discovery.kubernetes "nodes" {

role = "node"

}You reference components by combining their name with their label.

For example, you can reference a local.file component labeled foo as local.file.foo.

The combination of a component’s name and label must be unique within the configuration file. This naming approach allows you to define multiple instances of a component, as long as each instance has a unique label.

Pipelines

Most arguments for a component in a configuration file are constant values, such as setting a log_level attribute to "debug".

log_level = "debug"You use expressions to compute an argument’s value dynamically at runtime.

Expressions can retrieve environment variable values (log_level = sys.env("LOG_LEVEL")) or reference an exported field of another component (log_level = local.file.log_level.content).

A dependent relationship is created when a component’s argument references an exported field of another component. The component’s arguments depend on the other component’s exports. The input of the component is re-evaluated whenever the referenced component’s exports are updated.

The flow of data through these references forms a pipeline.

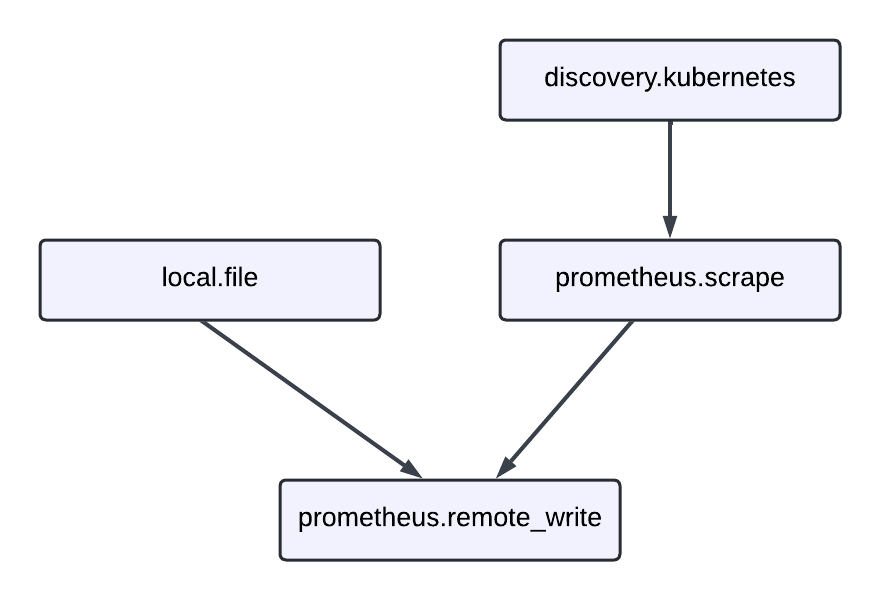

An example pipeline might look like this:

- A

local.filecomponent watches a file containing an API key. - A

prometheus.remote_writecomponent receives metrics and forwards them to an external database using the API key from thelocal.filefor authentication. - A

discovery.kubernetescomponent discovers and exports Kubernetes Pods where metrics can be collected. - A

prometheus.scrapecomponent references the exports of the previous component and sends collected metrics to theprometheus.remote_writecomponent.

The following configuration file represents the pipeline.