How to capture Spring Boot metrics with the OpenTelemetry Java Instrumentation Agent

Note: The current version of OpenTelemetry’s Java instrumentation agent picks up Spring Boot’s Micrometer metrics automatically. It is no longer necessary to manually bridge OpenTelemetry and Micrometer.

In a previous blog post, Adam Quan presented a great introduction to setting up observability for a Spring Boot application. For metrics, Adam used the Prometheus Java Client library and showed how to link metrics and traces using exemplars.

However, the Prometheus Java Client library is not the only way to get metrics out of a Spring Boot app. One alternative is to use the OpenTelemetry Java instrumentation agent for exposing Spring’s metrics directly in OpenTelemetry format.

This blog post shows how to capture Spring Boot metrics with the OpenTelemetry Java instrumentation agent.

Setting up an example application

We will use a simple Hello World REST service as an example application throughout this blog post. The source code is from the ./complete/ directory of the example code of Spring’s Building a RESTful Web Service guide.

git clone https://github.com/spring-guides/gs-rest-service.git

cd gs-rest-service/complete/

./mvnw clean package

java -jar target/rest-service-complete-0.0.1-SNAPSHOT.jar

The application exposes a REST service on port 8080 where you can greet different names, like http://localhost:8080/greeting?name=Grafana. It does not yet expose any metrics.

Exposing a Prometheus metric endpoint

As the first step, we enable metrics in our example application and expose these metrics directly in Prometheus format. We will not yet use the OpenTelemetry Java instrumentation agent.

We need two additional dependencies in pom.xml:

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-actuator</artifactId>

</dependency>

<dependency>

<groupId>io.micrometer</groupId>

<artifactId>micrometer-registry-prometheus</artifactId>

<scope>runtime</scope>

</dependency>

The spring-boot-starter-actuator provides the metrics API and some out-of-the-box metrics. Under the hood, it uses the Micrometer metrics library. The micrometer-registry-prometheus is for exposing Micrometer metrics in Prometheus format.

Next, we need to enable the Prometheus endpoint. Create a file ./src/main/resources/application.properties with the following line:

management.endpoints.web.exposure.include=prometheus

After recompiling and restarting the application, you will see the metrics on http://localhost:8080/actuator/prometheus. The out-of-the-box metrics include some JVM metrics like jvm_gc_pause_seconds, some metrics from the logging framework like logback_events_total, and some metrics from the REST endpoint like http_server_requests.

Finally, we want to have a custom metric to play with. Custom metrics must be registered with a MeterRegistry provided by Spring Boot. So the first step is to inject the MeterRegistry to the GreetingController, for example via constructor injection:

// ...

import io.micrometer.core.instrument.MeterRegistry;

@RestController

public class GreetingController {

// ...

private final MeterRegistry registry;

// Use constructor injection to get the MeterRegistry

public GreetingController(MeterRegistry registry) {

this.registry = registry;

}

// ...

}

Now, we can add our custom metric. We will create a Counter tracking the greeting calls by name. We add the counter to the existing implementation of the greeting() REST endpoint:

@GetMapping("/greeting")

public Greeting greeting(@RequestParam(value = "name", defaultValue = "World") String name){

// Add a counter tracking the greeting calls by name

registry.counter("greetings.total", "name", name).increment();

// ...

}

Let’s try it: Recompile and restart the application, and call the greeting endpoint with different names, like http://localhost:8080/greeting?name=Grafana. On http://localhost:8080/actuator/prometheus you will see the metric greetings_total counting the number of calls per name:

# HELP greetings_total··

# TYPE greetings_total counter

greetings_total{name="Grafana",} 2.0

greetings_total{name="Prometheus",} 1.0

Note that it is generally a bad idea to use user input as label values, as this can easily lead to a cardinality explosion (i.e., a new metric created for each name). However, it is convenient in our example because it gives us an easy way to try out different label values.

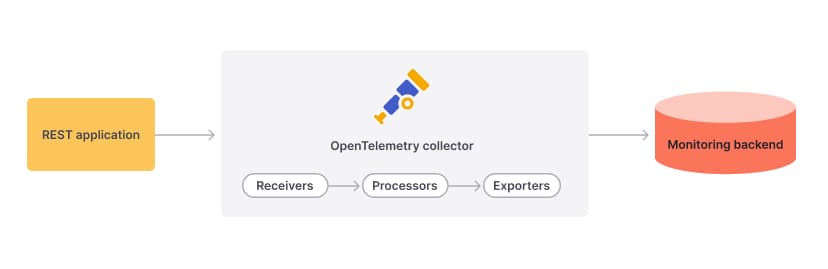

Putting the OpenTelemetry collector in the middle

The OpenTelemetry collector is a component to receive, process, and export telemetry data. It usually sits in the middle, between the applications to be monitored and the monitoring backend.

As the next step, we will configure an OpenTelemetry collector to scrape the metrics from the Prometheus endpoint and expose them in Prometheus format.

So far this will not add any functionality, except that we get the OpenTelemetry collector as a new infrastructure component. The metrics exposed by the collector on port 8889 should be the same as the metrics exposed by the application on port 8080.

Download the latest otelcol_*.tar.gz release from https://github.com/open-telemetry/opentelemetry-collector-releases/releases, and unpack it. It should contain an executable named otelcol. At the time of writing, the latest release was otelcol_0.47.0_linux_amd64.tar.gz.

Create a config file named config.yaml with the following content:

receivers:

prometheus:

config:

scrape_configs:

- job_name: "example"

scrape_interval: 5s

metrics_path: "/actuator/prometheus"

static_configs:

- targets: ["localhost:8080"]

processors:

batch:

exporters:

prometheus:

endpoint: "localhost:8889"

service:

pipelines:

metrics:

receivers: [prometheus]

processors: [batch]

exporters: [prometheus]

Now run the collector with

./otelcol --config=config.yaml

You can access the metrics on http://localhost:8889/metrics.

Attaching the OpenTelemetry Java instrumentation agent

We are now ready to switch our application from exposing Prometheus metrics to providing OpenTelemetry metrics directly. We will get rid of the Prometheus endpoint in the application and use the OpenTelemetry Java instrumentation agent for exposing metrics.

First, we have to re-configure the receiver side of the OpenTelemetry collector to use the OpenTelemetry Line Protocol (otlp) instead of scraping metrics from a Prometheus endpoint:

receivers:

otlp:

protocols:

grpc:

http:

processors:

batch:

exporters:

prometheus:

endpoint: "localhost:8889"

service:

pipelines:

metrics:

receivers: [otlp]

processors: [batch]

exporters: [prometheus]

Now, download the latest version of the OpenTelemetry Java instrumentation agent from https://github.com/open-telemetry/opentelemetry-java-instrumentation/releases. Metrics are disabled in the agent by default, so you need to enable them by setting the environment variable OTEL_METRICS_EXPORTER=otlp. Then, restart the example application with the agent attached:

export OTEL_METRICS_EXPORTER=otlp

java -javaagent:./opentelemetry-javaagent.jar -jar ./target/rest-service-complete-0.0.1-SNAPSHOT.jar

After a minute or so, you will see that the collector exposes metrics on http://localhost:8889/metrics again. However, this time they are shipped to the collector directly using the OpenTelemetry line protocol. The Prometheus endpoint of the application is no longer involved. You can now remove the Prometheus endpoint configuration in application.properties and remove the micrometer-registry-prometheus dependency from pom.xml.

However, if you look closely, you will see that the metrics differ from what was exposed before.

Bridging OpenTelemetry and Micrometer

The metrics we are seeing now in the collector are coming from the OpenTelemetry Java instrumentation agent itself. They are not the original metrics maintained by the Spring Boot application. The agent does a good job in giving us some out-of-the-box metrics on the REST endpoint calls, like http_server_duration. However, some metrics are clearly missing, like the logback_events_total metric that was originally provided by the Spring framework. And our custom metric greetings_total is no longer available.

In order to understand the reason, we need to have a look at how Spring Boot metrics work internally. Spring uses Micrometer as its metric library. Micrometer provides a generic API for application developers and offers a flexible meter registry for vendors to expose metrics for their specific monitoring backend.

In the first step above, we used the Prometheus meter registry, which is the Micrometer registry for exposing metrics for Prometheus.

Capturing Micrometer metrics with the OpenTelemetry Java instrumentation agent almost works out of the box: The agent detects Micrometer and registers an OpenTelemetryMeterRegistry on the fly.

Unfortunately the agent registers with Micrometer’s Metrics.globalRegistry, while Spring uses its own registry instance via dependency injection. If the OpenTelemetryMeterRegistry ends up in the wrong MeterRegistry instance, it is not used by Spring.

In order to fix this, we need to make OpenTelemetry’s OpenTelemetryMeterRegistry available as a Spring bean, so that Spring can register it correctly when it sets up dependency injection. This can be done by adding the following code to your Spring boot application:

@SpringBootApplication

public class RestServiceApplication {

// Unregister the OpenTelemetryMeterRegistry from Metrics.globalRegistry and make it available

// as a Spring bean instead.

@Bean

@ConditionalOnClass(name = "io.opentelemetry.javaagent.OpenTelemetryAgent")

public MeterRegistry otelRegistry() {

Optional<MeterRegistry> otelRegistry = Metrics.globalRegistry.getRegistries().stream()

.filter(r -> r.getClass().getName().contains("OpenTelemetryMeterRegistry"))

.findAny();

otelRegistry.ifPresent(Metrics.globalRegistry::remove);

return otelRegistry.orElse(null);

}

// ...

}

The snippet above unregisters the OpenTelemetryMeterRegistry from Micrometer’s Metrics.globalRegistry and exposes it as a Spring bean instead. It will only run if the agent is attached, which is achieved with the @ConditionalOnClass annotation.

After recompiling and restarting the application, all metrics will be made available to the OpenTelemetry collector, including all original Spring Boot metrics and our custom greetings_total.

Some information is redundant, so you can even compare the information in http_server_requests provided by Spring Boot with the information in http_server_duration added by OpenTelemetry’s Java instrumentation agent.

Summary

In this blog post, we showed you how to capture Spring Boot metrics with the OpenTelemetry Java instrumentation agent. We started off by exposing Spring Boot metrics in the Prometheus format, then put the OpenTelemetry collector in the middle, and then switched the example application from exposing Prometheus to exposing OpenTelemetry line protocol directly.

Finally, we highlighted a few lines of code you need to add to your Java application to bridge Spring Boot’s Micrometer metrics to the OpenTelemetryMeterRegistry provided by the OpenTelemetry Java instrumentation agent.

Grafana Cloud is the easiest way to get started with metrics, logs, traces, and dashboards. We have a generous free forever tier and plans for every use case. Sign up for free now!