How we responded to a 2+ hour partial outage in Grafana Cloud

On Tuesday, Feb. 18, 2025, we experienced an outage that lasted approximately 150 minutes and impacted roughly 25% of our Grafana Cloud services. To our customers: we are very sorry and more than a little embarrassed that we stepped outside our own processes and advice to cause this.

You rely on us to help monitor and troubleshoot your environments, and this type of incident obviously makes it harder for you to do that. At Grafana Labs, we want to practice our belief in a transparent and blameless culture, in which we learn from our mistakes to improve ourselves and the services we provide to our customers.

We take this outage very seriously. After an extensive incident review, we put together this blog post to explain what went wrong, how it was resolved, and what we are doing to prevent something similar from happening again.

Summary

On Tuesday, Feb. 18, 2025 at 08:41 UTC, several members of our Grafana Labs application teams realized that some of their endpoints were no longer available. Our investigation quickly revealed that we had lost the load balancers for these endpoints due to a recent configuration change involving updates to our TLS policies.

We fully recovered most applications by 11:10 UTC, after we rolled back the change.

What seemed like a good, simple update cascaded to an outage because we assumed a small change was low-risk. However, we then failed to fully test, and failed to reduce the blast radius of the change. Both of these steps are part of our standard release and deployment process, but on this occasion we failed to follow our own practice and our system allowed development, staging, and production environments to be changed simultaneously.

To be clear, this outage was not a security incident and no customer data were leaked or exposed as a result of this incident. However, many of our customers lost access to their Grafana Cloud services, and those that were unable to ingest metrics or logs may have lost some data before they reached us. This was the result of our poorly expedited change, and for that we sincerely apologize.

A closer look at the incident and the resolution

An engineer who was upgrading the TLS policy version on our load balancers, which are used for many services including the ingest of both metrics and logs data, updated and tested configurations in our development environment. This work was manually tested and proved that the change could be safely made without redeploying load balancers.

Code to implement this change was then updated in our configuration management system. We use Crossplane, an open source control plane framework, to manage many of our cloud service provider (CSP) resources with Kubernetes. An update was made to allow the default TLS policy to be overridden by changing the merge policy for the Crossplane composition. It was then possible to observe that the overridden change remained in place after reconciliation of the running configuration.

Once the results of the code change were seen to function as expected, a full redeployment and test of the code should have been performed in development. We assumed that seeing the override work was enough of a test, but this assumption was wrong.

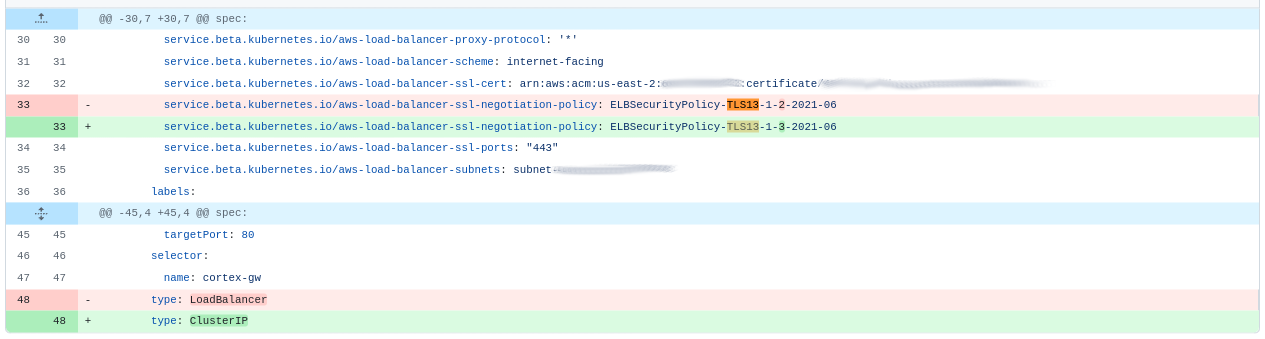

The change actually caused a default value from the base configuration to be unintentionally overridden, resulting in an undesired change in many Kubernetes services from type LoadBalancer to type ClusterIP, causing Kubernetes to destroy the load balancers.

To resolve the problem, we needed to roll back the code change, and then recreate the affected Kubernetes services in order to have Kubernetes recreate the load balancers.

Customers whose agents could not buffer for the period of their services’ outage may have lost some data before they were ingested.

Deployment in waves through to production

At Grafana Labs, we follow good—and no doubt expected—engineering practices. This is evidenced in how we have maintained our SOC II and ISO 27001 compliance certifications. These practices include mandatory code reviews and protections against accidental merging of code in our continuous integration (CI) and continuous deployment (CD) pipeline. The code that made the problematic change on this occasion was raised in a GitHub pull request (PR) and reviewed by a qualified peer of the author before being merged and deployed.

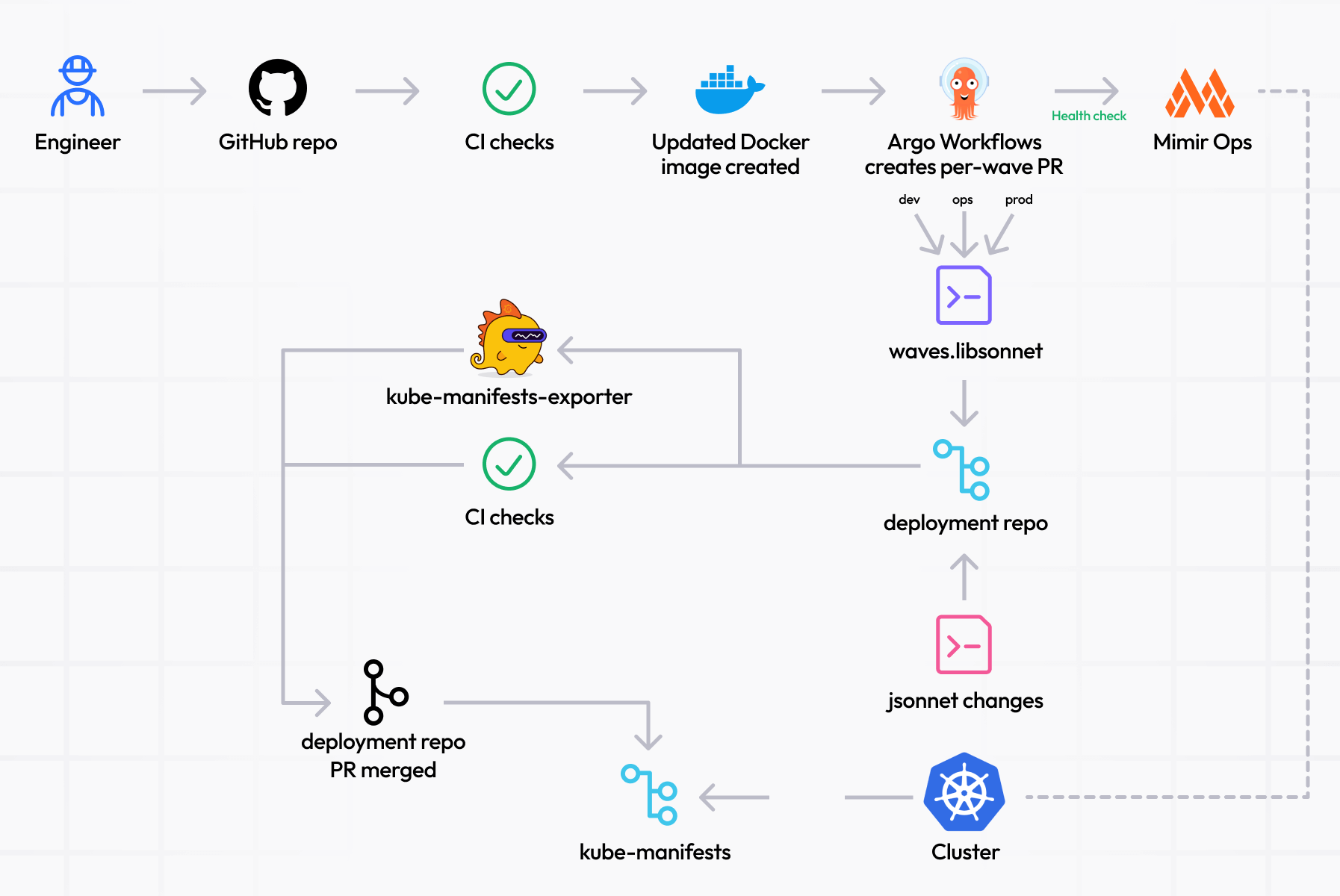

We use the concept of waves to stagger the deployment of changes—first to our development environment, then to staging before canary deployments in production and gradually across production. After each wave we observe the systems to be assured of their health before we continue. Typically, this observation period lasts at least 24 hours. We believe and speak about reducing the blast radius of changes, and we use waves to practice this.

The diagram below illustrates how the PR to create a wave is dependent on the health of the previous wave.

In a case like this TLS update, we would employ what is effectively a feature flag to conditionally apply changes to specific waves. When a library change is made, the updated version would be staggered through the waves, beginning in the development environment.

Without this flag, we would deploy without waves. This is what happened in the case of this accidental load balancers removal. We did not deploy the change as a wave into the development environment through our automated process—it was done manually—so we missed the unexpected behavior of the Kubernetes Crossplane operator and its effect on services.

What we’re doing to prevent this from happening again

It is nowhere near enough to say that we should have followed our policies and standards to prevent this from happening. Our use of waves needs to be enforced by our systems, not just expected in our processes. Any break-glass exceptions need to be exactly that, exceptions, not the default behavior when waves are not chosen. Reducing the blast radius needs to be technically enforced, not just procedurally expected.

This helps with testing, too. The issue isn’t that this change wasn’t tested, but that it wasn’t tested to the correct extent. A full end-to-end test of many sometimes opaque systems—such as the CSP load balancer controller and our own toolchain components—was required to observe that only the small intended change resulted from this update. Forcing ourselves to only apply the development wave first would have pushed us to proper testing.

We have already implemented some immediate changes, and we’re actively working on some larger efforts that will take more design but have been started:

- We now have a CI check to prevent a deployment to more than one wave, meaning that any exception to the

dev→staging→canary→production0…→productionNexpectation is caught. - We have had more sophisticated designs for waves in discussion, and this work has been made a higher priority towards an agreed improvement and implementation.

- We have begun to enable load balancer deletion protection to prevent accidental removal. Deletion protection was already enabled at the Crossplane level, but in this case the Crossplane resources weren’t deleted. They remained, but they changed the underlying objects to an invalid state. Enabling deletion protection on the load balancers protects us one layer deeper.

- Of course, we use more CSP resources than just load balancers, so we are working through the other critical resources to enable deletion protection in the right places.

Over the last quarter we have been developing a Crossplane Managed Resources exporter to help us improve change validation and visibility. This is now in beta testing within Platform engineering. When we simulate its use against the change that caused this outage, we can see a clear diff.

Support for the reconfiguration of the TLS policy has also been successfully and properly completed and tested, and is ready for applications teams to deploy.