Lessons learned from integrating OpenAI into a Grafana data source

Interest in generative AI and large language models (LLMs) has exploded in popularity thanks to a slew of announcements and product releases, such as Stable Diffusion, Midjourney, OpenAI’s DALL-E, and ChatGPT. The arrival of ChatGPT in particular was a bellwether moment, especially for developers. For the first time, an LLM was readily available and good enough that even non-technical people could use it to generate prose, re-write emails, and generate code in seconds.

The LLM landscape is exciting, but it’s also constantly changing. At Grafana Labs, we’ve been focused on how it fits within the context of an open source company, both in terms of how it can help our engineers and how it can be incorporated into our existing projects and products.

On the partner data source team, we saw a potential enhancement to our query editor. So we started experimenting, and there have been a few interesting findings from those experiments that we want to share here. But first, let’s back up a bit. What is an LLM again?

Context and background on our adoption of OpenAI

An LLM (large language model) is a model that generates text (language). At a very basic level, an LLM generates what is statistically the next probable token (token more or less meaning a word) in a phrase or sentence. Good deep-dives on the topic can be found here by Jay Alammar and here by Stephen Wolfram. ChatGPT is a product released by OpenAI and based on the GPT-3 (and now GPT-4) family of models, but it’s trained using Reinforcement Learning from Human Feedback (RLHF). This combination of RLHF + GPT-3 and GPT-4 is what pushed ChatGPT’s performance well past previous LLM products.

Probability and the impact on LLMs

If you’re using or working with an LLM, it’s important to remember that these systems are not deterministic, meaning they do not produce the same output given the same input.

Here’s a contrived example: if you ask ChatGPT to factor pi to the tenth digit, you might get a correct answer and you might not, but you probably won’t get the same answer every time. It’s probabilistic.

Here’s an actual interaction with ChatGPT via Simon Wilison’s wonderful llm tool, where I asked ChatGPT to calculate pi to the tenth digit back to back:

The first answer was elaborate:

To calculate pi to the 10th decimal place, you can use various methods. One common method is to use the Machin-like formula:

π/4 = 4 * arctan(1/5) - arctan(1/239)

By evaluating this formula up to a high precision, we can approximate pi to the desired accuracy. Here’s the approximation using Python programming language:

```python

import math

# Calculate pi using Machin-like formula

pi = 4 * (4 * math.atan(1/5) - math.atan(1/239))

# Print pi to 10 decimal places

formatted_pi = “{:.10f}".format(pi)

print(formatted_pi)

```

The output will be:

3.1415926535

Therefore, pi approximated to the 10th decimal place is 3.1415926535.

The second response was just the answer to the question:

Pi (π) to the 10th digit is approximately:

3.1415926535

Different answers, same prompt. This variation makes sense though, as these models are predicting the next token. Inherent in prediction is the chance the prediction will be different a second time.

A very recent example is the blowback from Mozilla Developer Network (MDN) adding an AI-powered explain button. MDN is the place for documentation around HTML, CSS, and JS. The core of the problem was that the statements from the LLM were presented as factual without any fact checking or even a disclaimer about the accuracy of the results. This is a case where assuming the results will be correct every time led to a bad product experience.

Where and how do LLMs fit?

As we’ve seen so far, we have a system that can be right or wrong — but when it is right, it’s incredibly impressive. So, how does a system like that fit into a traditional, deterministic system like a Grafana data source? One possibility is in the query editor.

At its core, a data source allows the user to query data from an external system and present the result of that query in Grafana. To see how we could integrate an LLM into one of these plugins, we started with the Azure Data Explorer (ADX) data source, which features a query editor that supports the Kusto Query Language (KQL).

The Copilot model

We can’t trust a LLM to generate correct KQL queries every time, so how do we allow an LLM to be wrong without breaking the query editor? We can leverage what we’re calling “the Copilot model.” GitHub introduced their Copilot AI assistant in 2021, and they’ve continued to improve it since. Microsoft, which owns GitHub, has taken that idea to the next level with Microsoft 365 Copilot. One of the breakthroughs, in our opinion, is that Github Copilot generates code inline, which means the user can easily edit or delete anything that is generated if it’s incorrect for some reason.

In short, the Copilot model positions the user as the final authority — not the LLM. This simple alignment means an LLM failure doesn’t really cost or break anything; users can just delete it and keep working. Much like “progressive enhancement” for the web means striving to deliver content despite adverse network conditions or device limitations, “assuming the worst” when working with a LLM is key to ensuring a good user experience.

ADX and OpenAI

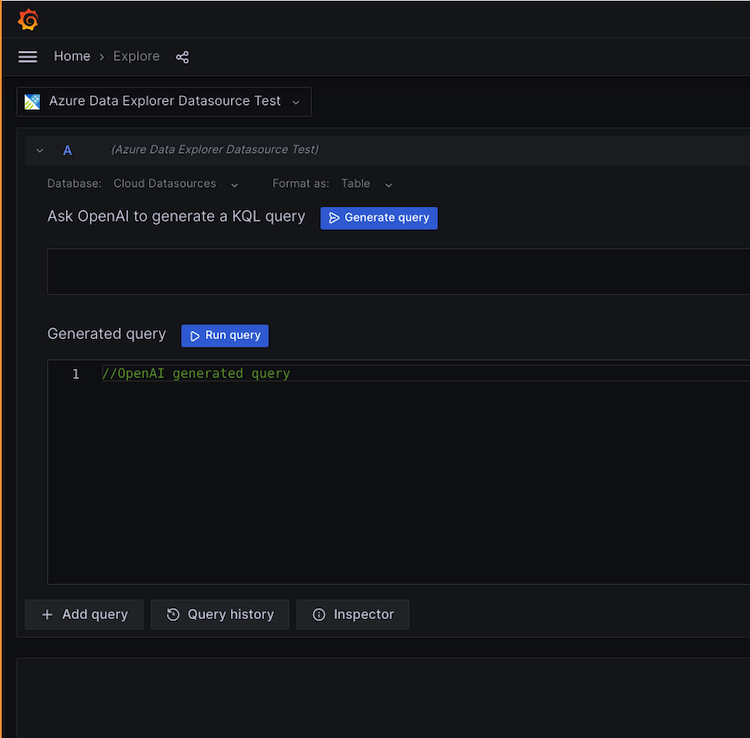

We integrated OpenAI in the query editor for ADX via a prompt input on top of our traditional query editor:

A user can describe in natural language what they want to query, and we take the response from OpenAI and insert it into the query editor below so that the user can examine the resulting query and make sure it’s correct before running it.

Only as good as the prompt

Working with a LLM is primarily prompt-based. This means you “prompt” — or query — the LLM, and the model responds with an answer. Taking a cue from Simon Wilison’s previously mentioned llm tool, we crafted a prompt that goes before the user’s natural language query, instructing ChatGPT to act a certain way, namely:

- Be fluent in KQL

- Only respond with the correct KQL code snippets and no explanations

The latter point is important, because ChatGPT tends to be very helpful by default. This is great in a chat interface but not very helpful in a query editor interface, since it returns detailed, line-by-line explanations of the query and unwanted characters, like backticks around code. The results are usually good enough that it’s worth including the integration. ChatGPT can still return nonsense or non-KQL answers, but those are easy enough to delete and try again.

We’ve been really pleased with how the rollout of this new capability went. Integrating with OpenAI from a technical perspective is quite straightforward and pleasant. There’s more work to come around improving the UX and additional features, so stay tuned!

How we’re looking to expand the use of LLMs going forward

For now, our users must supply their own OpenAI API key in the ADX configuration to utilize this feature. We view this as a benefit, since it means users can use their own organizational or personal OpenAI accounts, instead of funneling every query through Grafana’s OpenAI account.

You can see what we’ve outlined for the next few features or ideas for the integration with OpenAI on the ADX Github repo. I’m particularly curious about an “explain” feature for understanding KQL and potentially better results by sending the schema along with any prompts. We’re also planning to expand to other data sources in the months ahead.

We love feedback as well, if you have an idea for additional ways to leverage LLMs in ADX, open a Github discussion thread!