How to use Grafana and Prometheus to Rickroll your friends (or enemies)

Jacob Colvin is a Site Reliability Engineer at 84.51°, a retail data science, insights, and media company. In his free time, he enjoys working on open source and his homelab.

For some time now there’s been an ongoing trend to play videos using creative or unexpected mediums. To give a few examples, you can find solutions for playing Bad Apple through everything from Desmos to Task Manager.

Meanwhile, there have been a few projects that store images in Prometheus, which I was first exposed to by Giedrius’s blog post on storing ASCII art in Prometheus. Recently, Atibhi Agrawal introduced support for backfilling Prometheus metrics via promtool, which makes these types of projects much faster and easier. Also, Łukasz Mierzwa recently implemented dark mode which gives us more display options in the Prometheus UI.

All this means — you guessed it — it’s the perfect time for some observability A/V shenanigans.

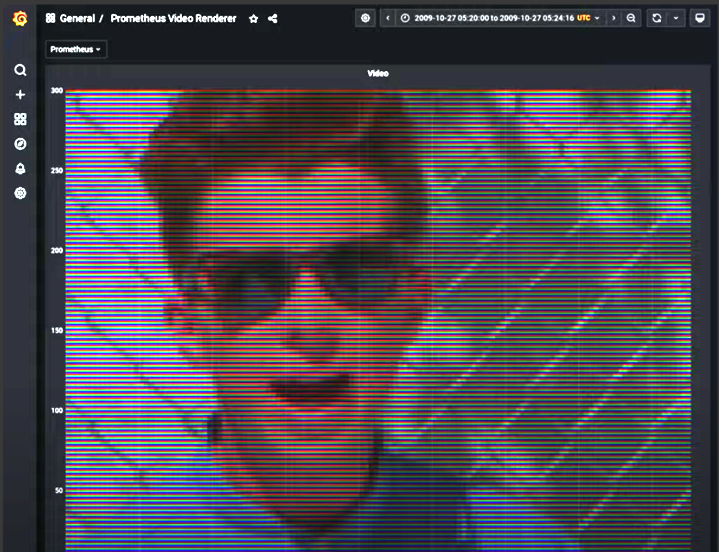

Here you’ll learn how to use Grafana and Prometheus to create the popular Rickroll meme, when a seemingly innocent hyperlink surprises users with the music video for Rick Astley’s classic ’80s hit “Never Gonna Give You Up.”

Playing video with Prometheus

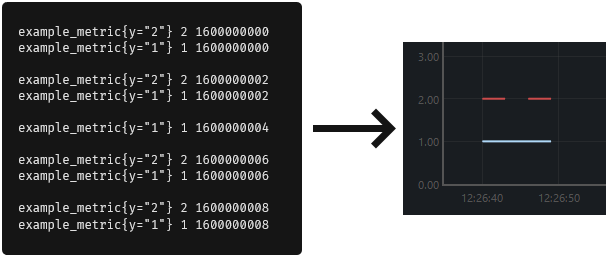

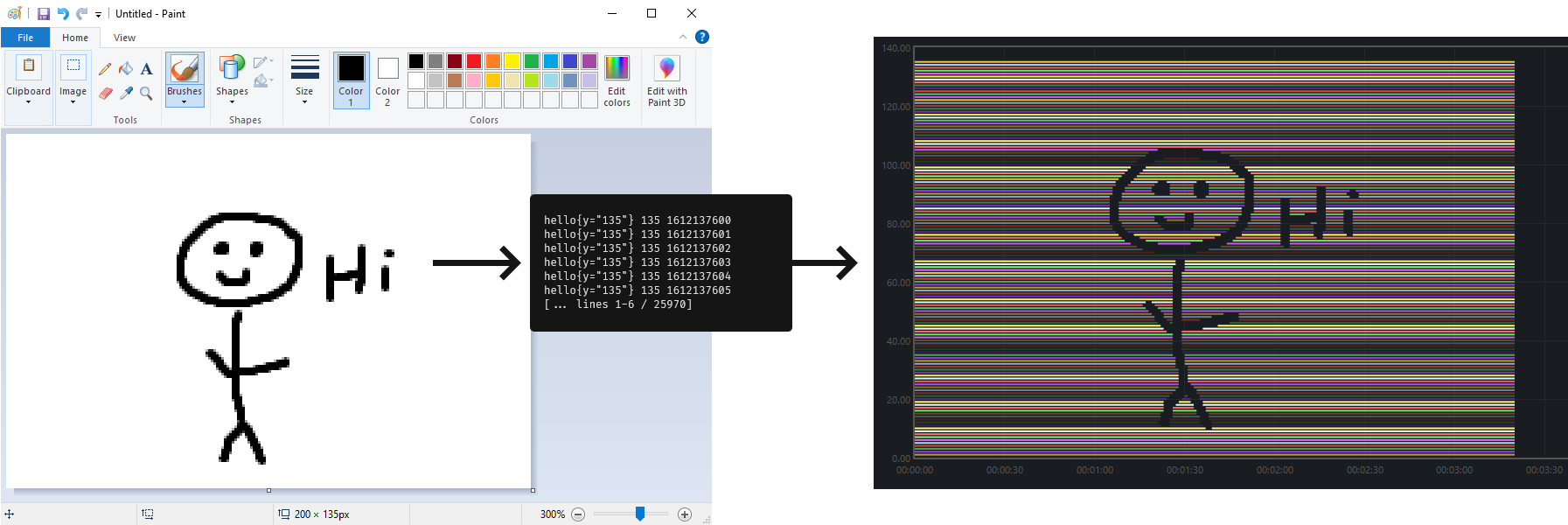

To play video with Prometheus, first we’ll need to display a single image through the Prometheus UI. To do this, we can think of the sample value as the y-coordinate of a given pixel, the timestamp as the x-coordinate, and its existence or lack thereof as its color. Then we give each sample a label equal to its y-coordinate/value, making a unique dimension for each value. For example, we can draw 😑 by hand:

Now we just need to generate these metrics automatically from an image file and backfill all those metrics via promtool. We can use Go’s image/png package for this, and the docs give us an example of how we can read the brightness of each pixel. If the brightness is above 50%, we can write a sample, and if it’s lower, we write nothing. The coordinates are also pretty straightforward, we just need to invert the y-axis since the top left of png files is (0,0) where for us it’s the bottom left. We can also pre-determine the distance between samples on the x-axis (which I still call the “scrape interval” even though we’re backfilling everything) and multiply the x-coordinate by however much time that is.

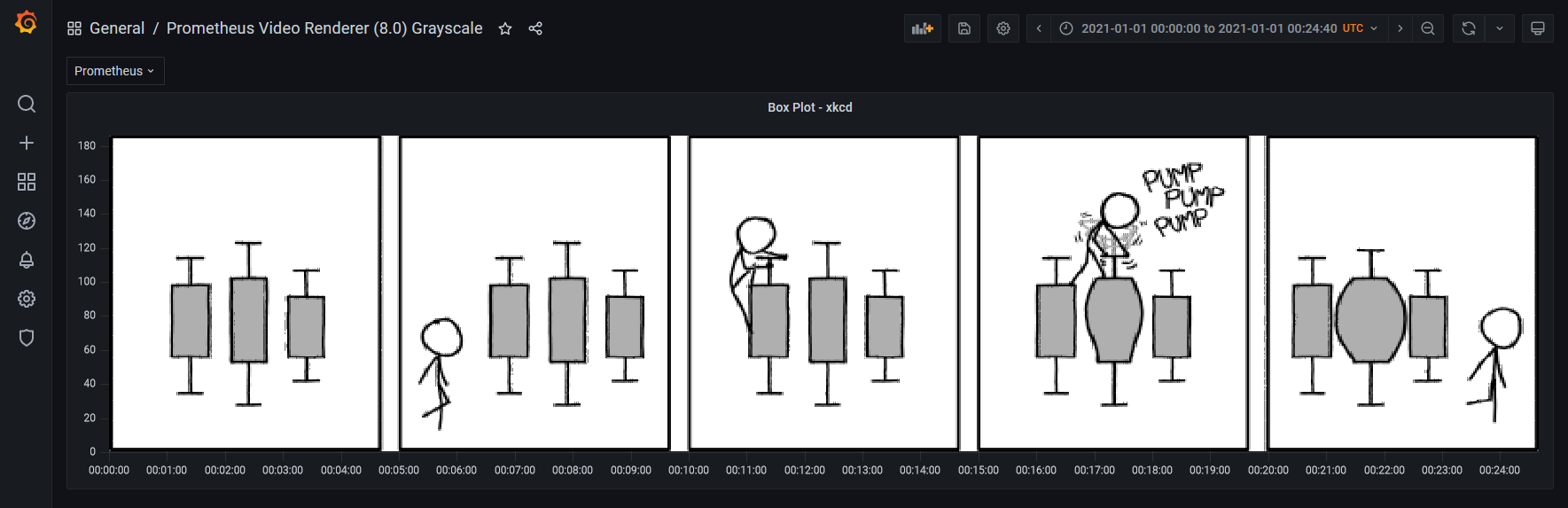

For video, we just need to do this for every frame in the video, consecutively. We can just assume that every image is the same resolution, which makes it simple to calculate the start and end time of each frame. Essentially, we make a film strip inside Prometheus.

Now, if we position our graph so that we’re viewing a single frame, moving forward in time will shift us to be in between our frames and moving again will land us on the next frame. We can remove these intermediate half-frames either by just quickly clicking twice or by editing the calcShiftRange function in our browser to not divide the range by 2. We can either record ourselves cycling through frames at a fixed interval (and likely later speed the video up) or take screenshots after metrics load and convert those screenshots to a video with ffmpeg.

Moving to Grafana

Playing video in the Prometheus UI is cool and all, but the graph is bare bones by design. What if we want to display something that isn’t visible, or at least understandable, with only black or white? Grafana is perfect for this task due its overrides, which allow us to assign colors of our choice to a particular metric. So, to get this working, we just add an additional label to our metrics to define how bright the pixel is. For example, color="0" for black or color="255" for white.

Then, we can add an override for each possible value of color. For example, matching 0 would set the color to rgb(0,0,0). I spent about 2 hours adding each override in the Grafana UI … just kidding. Luckily for us, Grafana maintains a Jsonnet library, Grafonnet, which makes creating this dashboard completely trivial. You can find all my code for this here.

Now, we can backfill Prometheus again, this time with our additional label, and load our generated dashboard.

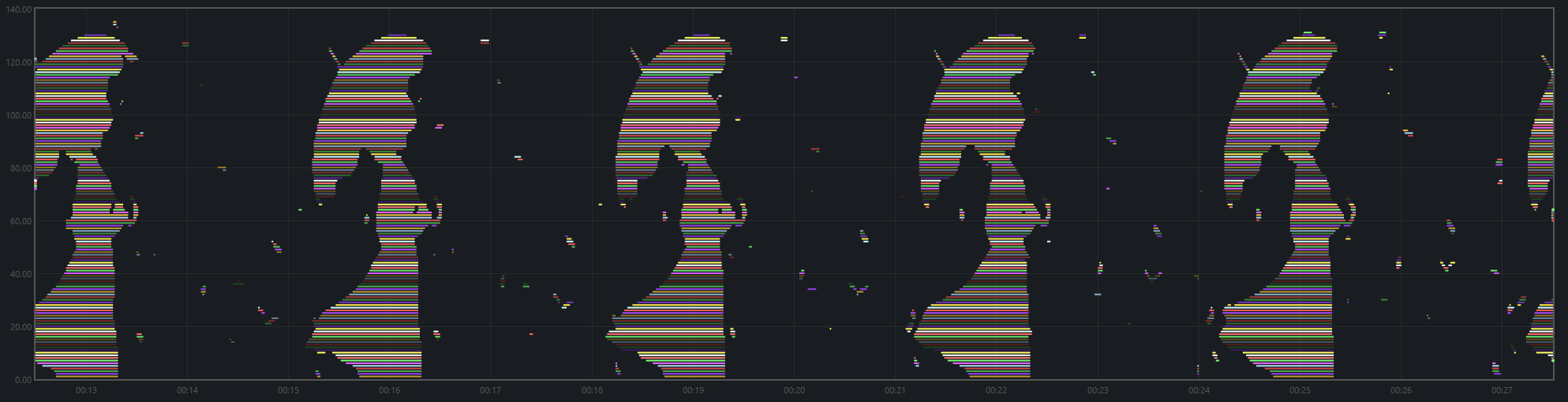

However, there’s a lot more data here than there was previously. Unless you’re okay with <0.1 FPS playback, clicking the forward button repeatedly is no longer practical. At this point, I turned to grafana-image-renderer, which lets us curl Grafana for what is effectively a screenshot of our dashboard with a particular start and end timestamp. We can make a few thousand requests with the correct times and convert the results to a video with ffmpeg. Eventually, I ended up writing a small Go program to assist with this, which you can find here. Using Go also allows us to easily take advantage of grafana-image-renderer’s clustered rendering mode and request a number of images concurrently.

Getting colorful

I guess the next step is to somehow map the colors used in the UI and the colors in a video so that it would be possible to do this with not just black/white videos

— @stag1e on Twitter

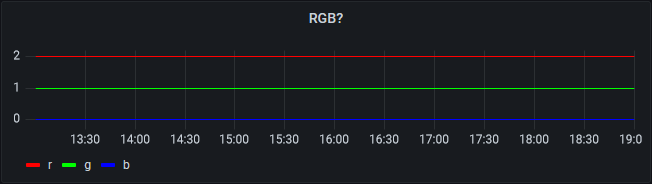

Extending this to get full color should be easy, right? We can just have a label for red, green, and blue, or have a single label with the hex color of the pixel.

This approach reveals a classic problem, however. With a single channel that goes from black to white, 256 possible brightness levels correspond to 256 overrides. However, if we add an additional channel, we end up multiplying the possible combinations. So with two channels (e.g. red and green), with 256 levels for each, we need 65,536 overrides. Adding the third channel for full RGB color means we would need 16,777,216 overrides. This degree of metric cardinality on my Prometheus instance might be just about manageable, but I did not have such high hopes for Chrome, and also the thought of copying around massive 100 million line dashboards did not seem appealing.

At this point I thought of another solution, one that could give full RGB color, and more importantly I thought it would be cool.

The answer is … screen-ception. Basically, the pixels on the device you’re using are really small. They could be significantly larger and would still maintain the color-mixing illusion.

Here we have three distinct channels:

Mush them together, and that looks a lot like white to me!

The result of this will essentially be three of the previous grayscale panels running side by side. Since all the heavy multiplication is done by your eyeballs, we only need 256 overrides for each channel, for a total of 768 overrides. So, we can simulate as many colors as we could possibly want, free from any cardinality explosions. We can re-use our Jsonnet from before to generate this, by generating and passing new overrides and targets to the same dashboard.

The possibilities are now endless. (Be sure to watch fullscreen in 4k for the best experience.)

Audio

Is sound next?

— bbrazil on Reddit

The finishing touch for this whole project was to get some kind of solution working for audio. We can’t play anything back through Grafana directly without making some kind of plugin for that, and I wanted to keep my Grafana instance mostly vanilla. However, it would be possible to write a simple client to read data directly from Prometheus and play it back.

If you think about it, Prometheus is pretty ideal for storing audio. Each audio channel is a single wave, and that wave is just a series of numbers. I used the go-wav package to read wav files into an integer slice, and then just backfilled those alongside the relevant metadata (e.g. the sample rate) as labels.

Any time we want to play back our file, we can use Prometheus’s client_golang to pull small chunks back in order, convert the time series back to wav with the same go-wav package, and play that audio through our speakers with beep.

While audio is not actually playing through Grafana, we can still get a visualization by perfectly lining up the audio and video samples. This will result in relatively low audio quality since the frequency is effectively bound by the horizontal resolution of each frame. Technically we could increase resolution or use different scrape intervals for video and audio, but I did not want to add this complexity. Plus it makes the whole thing a little more believable!

Coming full circle: The Prometheus Dev Summit recording

The culmination of many of these efforts was creating this Prometheus Dev Summit video. This video was over 2 hours long at 24 FPS, meaning there were about 175,000 frames, equating to 30GB of Prometheus metrics after compaction. The whole video took 3 days to render with grafana-image-renderer clustering max concurrency set to 25.

Conclusion

All this was made possible due to a huge number of contributions made by a lot of different people. If you’re one of those people, thank you. This project probably wouldn’t have been possible without features that were introduced just in 2021.

Finally, if you want to try any of this out for yourself, you can find all of my code and documentation here.