Plugins 〉Kafka

Kafka

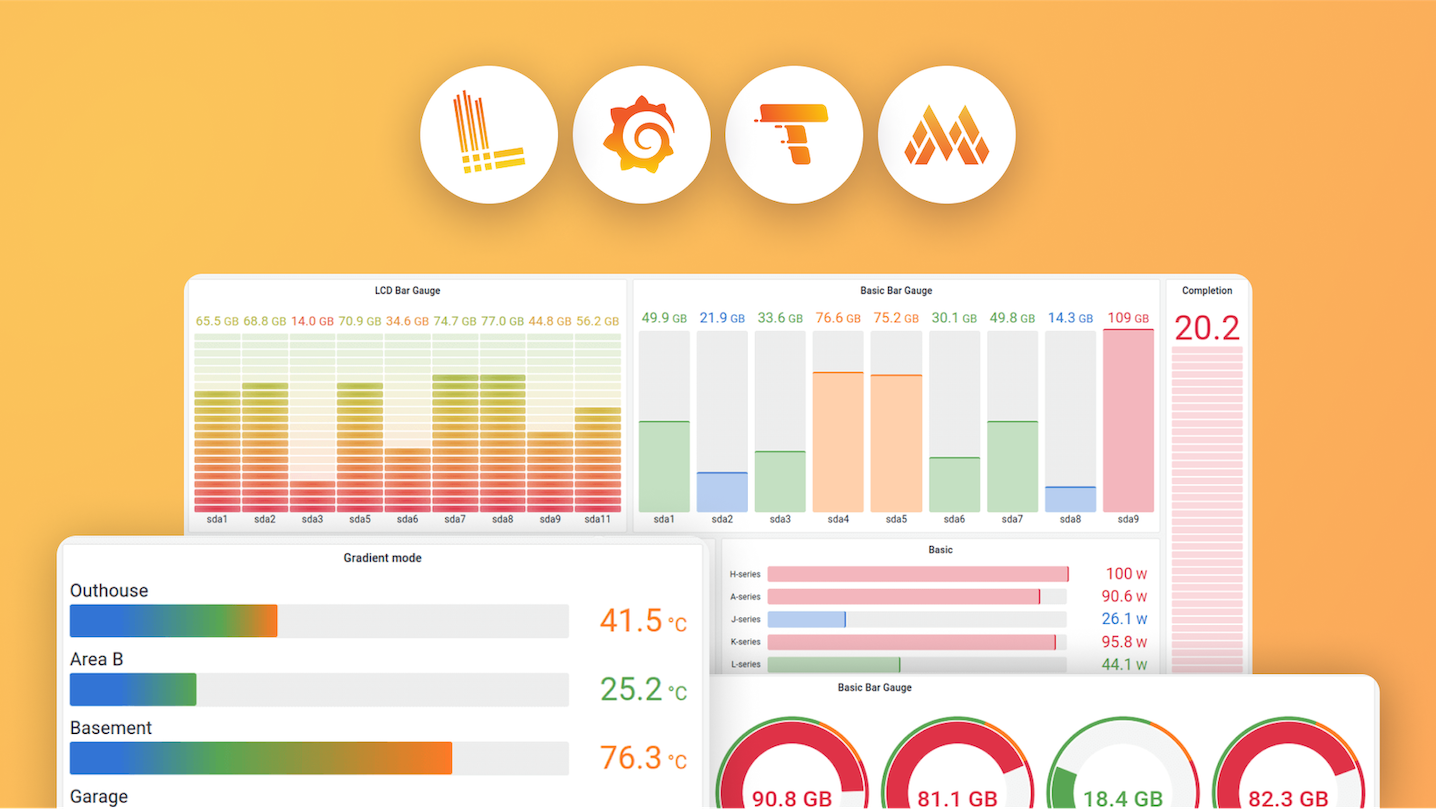

Kafka Datasource for Grafana

Visualize real-time Kafka data in Grafana dashboards.

Why Kafka Datasource?

- Live streaming: Monitor Kafka topics in real time.

- Flexible queries: Select topics, partitions, offsets, and timestamp modes.

- Rich JSON support: Handles flat, nested, and array data.

- Avro support: Integrates with Schema Registry for Avro messages.

- Secure: SASL authentication & SSL/TLS encryption.

- Easy setup: Install and configure in minutes.

How It Works

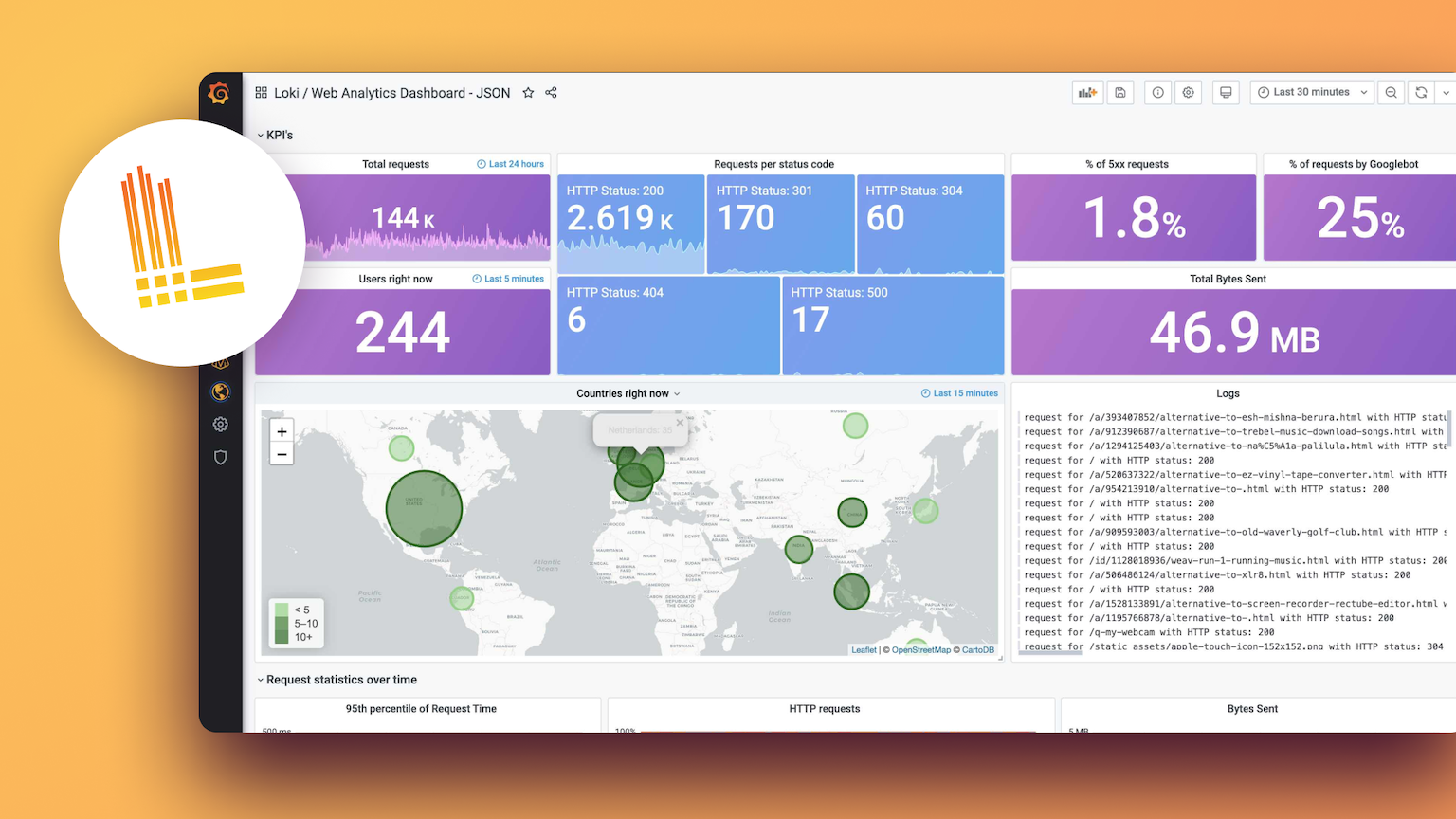

This plugin connects your Grafana instance directly to Kafka brokers, allowing you to query, visualize, and explore streaming data with powerful time-series panels and dashboards.

Requirements

- Apache Kafka v0.9+

- Grafana v10.2+

Note: This is a backend plugin, so the Grafana server should have access to the Kafka broker.

Features

- Real-time monitoring of Kafka topics

- Kafka authentication (SASL) & encryption (SSL/TLS)

- Query all or specific partitions

- Autocomplete for topic names

- Flexible offset options (latest, last N, earliest)

- Timestamp modes (Kafka event time, dashboard received time)

- Advanced JSON support (flat, nested, arrays, mixed types)

- Avro support with Schema Registry integration (inline schema or Schema Registry)

- Configurable flattening depth (default: 5)

- Configurable max fields per message (default: 1000)

- Customizable query aliases with placeholders

Installation

Via grafana-cli

grafana-cli plugins install hamedkarbasi93-kafka-datasource

Via zip file

Download the latest release and unpack it into your Grafana plugins directory (default: /var/lib/grafana/plugins).

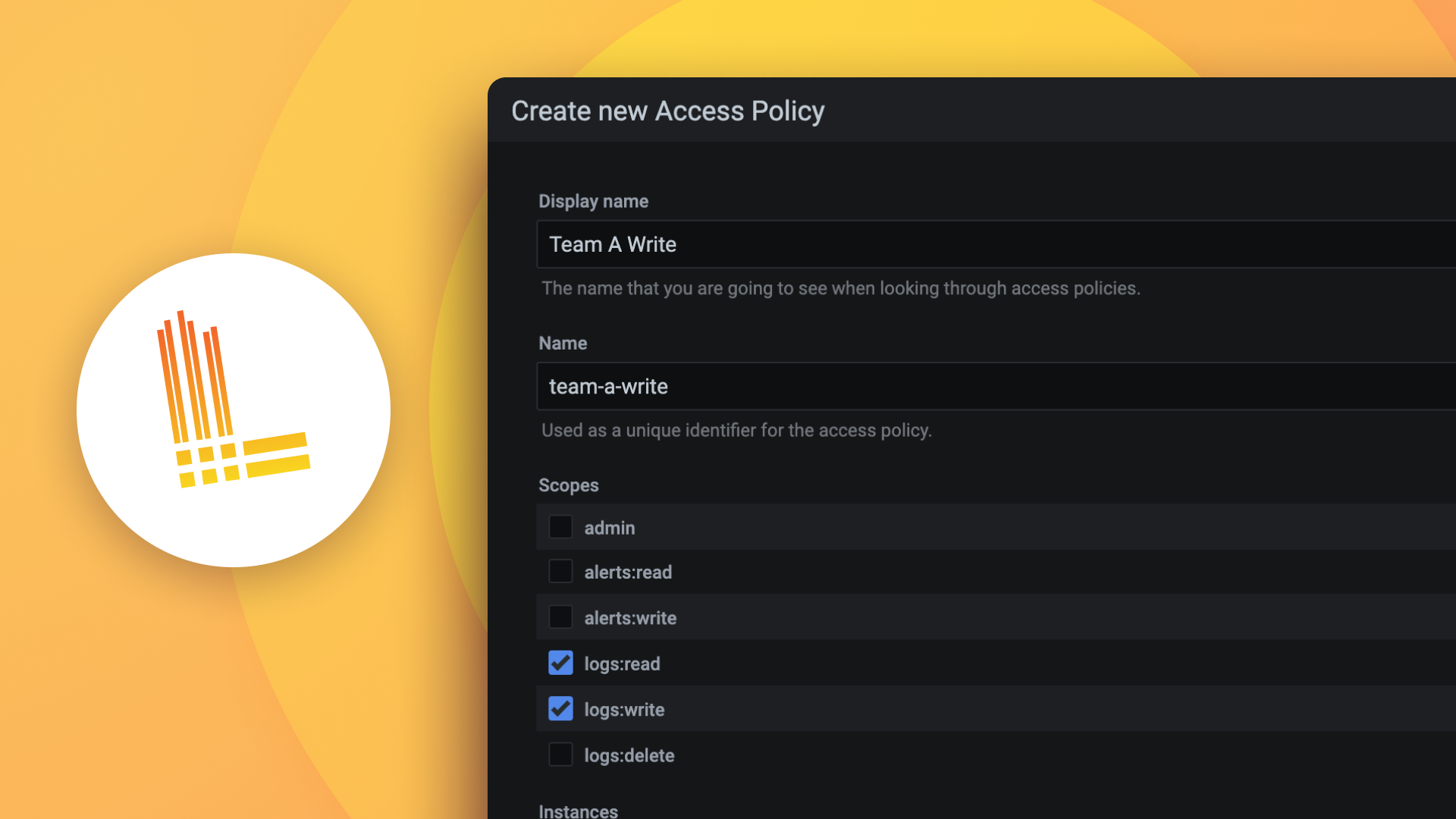

Provisioning

You can automatically configure the Kafka datasource using Grafana's provisioning feature. For a ready-to-use template and configuration options, refer to provisioning/datasources/datasource.yaml in this repository.

Usage

Configuration

- Add a new data source in Grafana and select "Kafka Datasource".

- Configure connection settings:

- Broker address (e.g.

localhost:9094orkafka:9092) - Authentication (SASL, SSL/TLS, optional)

- Avro Schema Registry (if using Avro format)

- Timeout settings (default: two seconds)

- Broker address (e.g.

Build the Query

- Create a new dashboard panel in Grafana.

- Select your Kafka data source.

- Configure the query:

- Topic: Enter or select your Kafka topic (autocomplete available).

- Fetch Partitions: Click to retrieve available partitions.

- Partition: Choose a specific partition or "all" for all partitions.

- Message Format:

JSON: For JSON messagesAvro: For Avro messages (requires schema)

- Offset Reset:

latest: Only new messageslast N messages: Start from the most recent N messages (set N in the UI)earliest: Start from the oldest message

- Timestamp Mode: Choose between Kafka event time or dashboard received time.

- Alias: Optional custom name for the query series. Supports template placeholders:

{{topic}}: The Kafka topic name{{field}}: The field name (for field display names){{partition}}: The partition number{{refid}}: The query RefID (e.g., A, B)

Tip: Numeric fields become time series, string fields are labels, arrays and nested objects are automatically flattened for visualization.

Supported JSON Structures

- Flat objects

- Nested objects (flattened)

- Top-level arrays

- Mixed types

Examples:

Simple flat object:

{

"temperature": 23.5,

"humidity": 65.2,

"status": "active"

}

Nested object (flattened as user.name, user.age, settings.theme):

{

"user": {

"name": "John Doe",

"age": 30

},

"settings": {

"theme": "dark"

}

}

Top-level array (flattened as item_0.id, item_0.value, item_1.id, etc.):

[

{ "id": 1, "value": 10.5 },

{ "id": 2, "value": 20.3 }

]

Limitations

- Protobuf not yet supported (Avro is supported with schema registry integration)

Live Demo

Sample Data Generator

Want to test the plugin? Use our Go sample producer to generate realistic Kafka messages:

go run example/go/producer.go -broker localhost:9094 -topic test -interval 500 -num-partitions 3 -shape nested

Avro example (produce Avro messages using Schema Registry):

go run example/go/producer.go -broker localhost:9094 -topic test-avro -interval 500 -num-partitions 3 -format avro -schema-registry http://localhost:8081

Supports flat, nested, and array JSON payloads, plus Avro format with full schema registry integration. Features verbose logging for debugging. See example/README.md for details.

FAQ & Troubleshooting

- Can I use this with any Kafka broker? Yes, supports Apache Kafka v0.9+ and compatible brokers.

- Does it support secure connections? Yes, SASL and SSL/TLS are supported.

- What JSON formats are supported? Flat, nested, arrays, mixed types.

- How do I generate test data? Use the included Go or Python producers.

- Where do I find more help? See this README or open an issue.

Documentation & Links

Support & Community

If you find this plugin useful, please consider giving it a ⭐ on GitHub or supporting development:

For more information, see the documentation files above or open an issue/PR.

Grafana Cloud Free

- Free tier: Limited to 3 users

- Paid plans: $55 / user / month above included usage

- Access to all Enterprise Plugins

- Fully managed service (not available to self-manage)

Self-hosted Grafana Enterprise

- Access to all Enterprise plugins

- All Grafana Enterprise features

- Self-manage on your own infrastructure

Grafana Cloud Free

- Free tier: Limited to 3 users

- Paid plans: $55 / user / month above included usage

- Access to all Enterprise Plugins

- Fully managed service (not available to self-manage)

Self-hosted Grafana Enterprise

- Access to all Enterprise plugins

- All Grafana Enterprise features

- Self-manage on your own infrastructure

Grafana Cloud Free

- Free tier: Limited to 3 users

- Paid plans: $55 / user / month above included usage

- Access to all Enterprise Plugins

- Fully managed service (not available to self-manage)

Self-hosted Grafana Enterprise

- Access to all Enterprise plugins

- All Grafana Enterprise features

- Self-manage on your own infrastructure

Grafana Cloud Free

- Free tier: Limited to 3 users

- Paid plans: $55 / user / month above included usage

- Access to all Enterprise Plugins

- Fully managed service (not available to self-manage)

Self-hosted Grafana Enterprise

- Access to all Enterprise plugins

- All Grafana Enterprise features

- Self-manage on your own infrastructure

Grafana Cloud Free

- Free tier: Limited to 3 users

- Paid plans: $55 / user / month above included usage

- Access to all Enterprise Plugins

- Fully managed service (not available to self-manage)

Self-hosted Grafana Enterprise

- Access to all Enterprise plugins

- All Grafana Enterprise features

- Self-manage on your own infrastructure

Installing Kafka on Grafana Cloud:

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

Warning

Plugin installation from this page will be removed in February 2026. Use the Plugin Catalog in your Grafana instance instead. Refer to Install a plugin in the Grafana documentation for more information.

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

Warning

Plugin installation from this page will be removed in February 2026. Use the Plugin Catalog in your Grafana instance instead. Refer to Install a plugin in the Grafana documentation for more information.

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

Warning

Plugin installation from this page will be removed in February 2026. Use the Plugin Catalog in your Grafana instance instead. Refer to Install a plugin in the Grafana documentation for more information.

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

Warning

Plugin installation from this page will be removed in February 2026. Use the Plugin Catalog in your Grafana instance instead. Refer to Install a plugin in the Grafana documentation for more information.

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

Warning

Plugin installation from this page will be removed in February 2026. Use the Plugin Catalog in your Grafana instance instead. Refer to Install a plugin in the Grafana documentation for more information.

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

Warning

Plugin installation from this page will be removed in February 2026. Use the Plugin Catalog in your Grafana instance instead. Refer to Install a plugin in the Grafana documentation for more information.

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

For more information, visit the docs on plugin installation.

Installing on a local Grafana:

For local instances, plugins are installed and updated via a simple CLI command. Plugins are not updated automatically, however you will be notified when updates are available right within your Grafana.

1. Install the Data Source

Use the grafana-cli tool to install Kafka from the commandline:

grafana-cli plugins install The plugin will be installed into your grafana plugins directory; the default is /var/lib/grafana/plugins. More information on the cli tool.

Alternatively, you can manually download the .zip file for your architecture below and unpack it into your grafana plugins directory.

Alternatively, you can manually download the .zip file and unpack it into your grafana plugins directory.

2. Configure the Data Source

Accessed from the Grafana main menu, newly installed data sources can be added immediately within the Data Sources section.

Next, click the Add data source button in the upper right. The data source will be available for selection in the Type select box.

To see a list of installed data sources, click the Plugins item in the main menu. Both core data sources and installed data sources will appear.

Changelog

v1.3.0

- Enhancement: Upgrade dependencies to address security vulnerabilities (#126)

- Fix: Improve SASL defaulting, error clarity, and health check timeout handling (#123)

- Feat: Add support for

refidandaliasin queries (#122) - Fix: Multiple query support by making streaming stateless (#121)

v1.2.3

- Enhancement: Change info levels to debug for all requests in the backend (#118)

v1.2.2

- Feat: Add PDC support for schema registry using grafana-plugin-sdk-http-client (#113)

- Enhancement: Add linting for frontend and backend with pre-commit hooks and CI updates (#115)

- Enhancement: Replace inline styling with emotion styling and update related tests (#116)

v1.2.1

- Refactor: Improve e2e test stability and maintainability (#112)

v1.2.0

- Feat: Implement Avro support (#96) — add Avro parsing and support for Avro-encoded messages in the plugin.

- Chore: Bump

@grafana/create-pluginconfiguration to 6.4.3 (#109)

v1.1.0

- Fix: Address security issues in dependencies and configuration (#107)

- Fix: Use type registry with nullable types to prevent data loss when encountering null values (#104)

- Perf: Pre-allocate frame fields to eliminate append overhead (#106)

- Feat: Configurable JSON flatten limits (#99)

- Add: Provisioned dashboard (#94)

- Fix: Mutation issue in config editor and query editor (#91)

- Fix: e2e tests for query editor for Grafana versions later than 12.2 (#102)

- Add: Devcontainer for development (#90)

- Test: Improve mutation tests with deep freezing (#93)

- CI: Skip publish-report for fork PRs (#103)

v1.0.1

- Restructure and split documentation (README, docs/development.md, docs/contributing.md, docs/code_of_conduct.md)

- Add “Create Plugin Update” GitHub Action

- Add release workflow pre‐check to ensure tag exists in CHANGELOG

- Bump plugin and package versions to 1.0.1

v1.0.0 (2025-08-14)

v0.6.0 (2025-08-13)

Topic Selection with Autocomplete #78

Improve the offset reset field #76

Add support for selecting all partitions #75

Is it possible to reset offset? #47

Clarify the options of the timestamp mode #77

v0.5.0 (2025-08-01)

Instruction for provisioning #43

Add tests #27

Replace deprecated Grafana SCSS styles #48

roll back required permissions for the attestations #74 (hoptical)

Source Dropdown in query editor #24

v0.4.0 (2025-07-19)

v0.3.0 (2025-05-24)

[BUG] Cannot connect to the brokers #55

[BUG] Plugin Unavailable #45

plugin unavailable #44

[BUG] 2 Kafka panels on 1 dashboard #39

[BUG] The yarn.lock is out of sync with package.json since the 0.2.0 commit #35

User has to refresh page to trigger streaming #28

Mage Error #6

use access policy token instead of the legacy one #66 (hoptical)

Add developer-friendly environment #52

Migrate from Grafana toolkit #50

Can you make an arm64-compatible version of this plugin? #49

Add Authentication & Authorization Configuration #20

Add support for using AWS MSK Managed Kafka #38 (ksquaredkey)

Issue 35 update readme node14 for dev (#1) #37 (ksquaredkey)

v0.2.0 (2022-07-26)

v0.1.0 (2021-11-14)

Use channel instead of time.after #14

Code base cleaning #12

Handle Messages with the specified format #5

Specify query editor #4

Specify Kafka Configuration in Config Editor #3

consumer-group stuck in rebalancing situation #2

modify description and minor information in plugin #18 (hoptical)

messages are handled via channel instead of time.after #15 (hoptical)