Synthetic Monitoring alerting

Synthetic Monitoring integrates with Grafana Cloud alerting via Alertmanager to provide alerts. The synthetic monitoring plugin provides some default alerting rules. These rules evaluate metrics published by the probes into your cloud Prometheus instance. Firing alert rules can be routed to notification receivers configured in Grafana Cloud alerting.

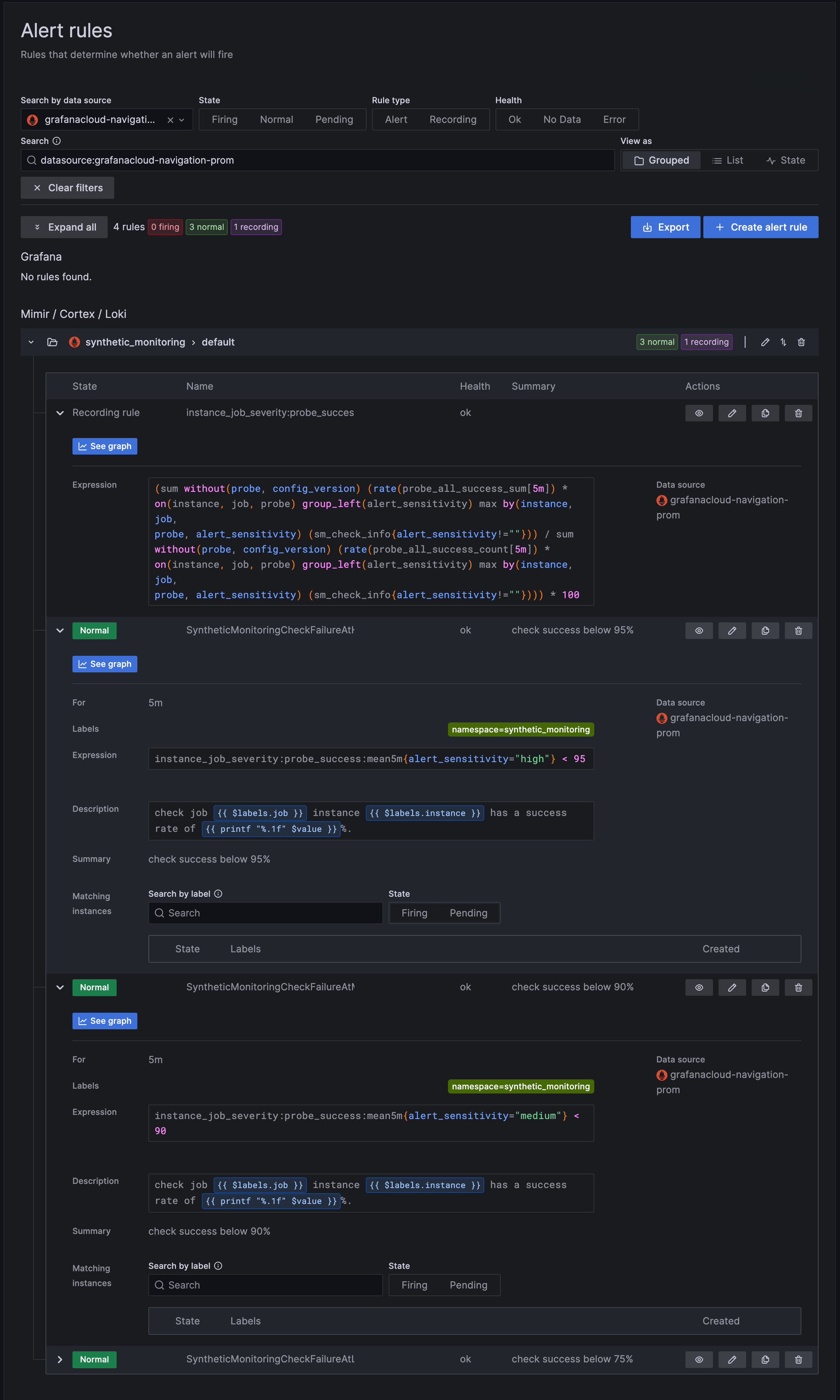

The default alerting rules are:

- HighSensitivity: If 5% of probes fail for 5 minutes, then fire an alert [via the routing that you have set up]

- MedSensitivity: If 10% of probes fail for 5 minutes, then fire an alert [via the routing that you have set up]

- LowSensitivity: If 25% of probes fail for 5 minutes, then fire an alert [via the routing that you have set up]

How to create an alert for a Synthetic Monitoring check

You can create an alert as part of creating or editing a check. You must log in to a Grafana Cloud instance to create or edit alerts. Alerting in Synthetic Monitoring happens in two phases: configuring a check to publish an alert sensitivity metric label value and configuring alert rules.

To configure a check to publish the alert sensitivity metric label value:

Navigate to Testing & synthetics > Synthetics > Checks.

Click New Check to create a new check or edit a preexisting check in the list.

Click the Alerting section to show the alerting fields.

Select a sensitivity level to associate with the check and click Save.

This sensitivity value is published to the

alert_sensitivitylabel on thesm_check_infometric each time the check runs on a probe. The default alerts use that label value to determine which checks to fire alerts for.

To configure alert rules:

- Navigate to Testing & synthetics > Synthetics > Alerts.

- If you have no default rules set up already for Synthetic Monitoring, click the Populate default alerts button.

- Some default rules will be generated for you. These rules represent sensitivity “buckets” based on probe success percentage. Checks that have been marked with a sensitivity level and whose success percentage drops below the threshold will cause the rule to fire. Checks that have a sensitivity level of “none” will not cause any of the default rules to fire.

How the default alert rules work under the hood

When the default rules generation results in 4 rules: one for each sensitivity level, and a recording rule. The recording rule evaluates the success rate of the check and looks at whether the alert_sensitivity label has a value. If alert_sensitivity is defined, the whole expression results in a value that gets recorded. Otherwise it’s ignored.

The remaining three alerting rules use the value created by the recording rule, look at the predefined values for the alert_sensitivty label, and map those to thresholds. The thresholds are editable, but the predefined values are not. For example, if a check has the alert_sensitivy=high, its success rate will be evaluated and compared to the default threshold (in this case, 95%). The threshold of 95% can be edited, but the value of alert_sensitivity has to be either none, low, medium, or high in order for the check to utilize the default alert rules. Users can add other alert_sensitiviy values if they like; the recording rule still produces a result, but there won’t be a predefined alert rule for that label value. That allows the user to create custom rules, possibly with custom thresholds. They can use Alertmanager to route based on the labels.

How alerts are evaluated

After a check has been configured to publish an alert sensitivity metric label value and alert rules have been configured, the alert rules will be evaluated each time the check runs on a probe. If the success rate of a check drops below the threshold for the sensitivity level associated with the check, the alert rule will enter a pending state. The duration of the pending state is editable but defaults to 5 minutes. If the success rate stays below the threshold for the configured amount of time, the rule will go into a firing state. You can read more about alert status in the Grafana Alerting docs.

How to edit an alert for a check

Alerts can be edited in Synthetic Monitoring on the alerts page or in the Cloud Alerting UI.

Note

It’s possible that substantially editing an alert rule in the Cloud Alerting UI causes it to no longer be editable in the Synthetic Monitoring UI. In that case, the alert rule will only be editable from Grafana Cloud alerting. For example, if you edit the value “0.9” to “0.75”, this change will propagate back to the synthetic monitoring alerts tab, and the alert will fire according to your edit. However, if you edit the value “0.9” to “steve”, the alert will be invalid and no longer editable in the synthetic monitoring alerts tab UI.

How to set up routing for default alerts

Default alerts contain only the alert rules. Without setting up routing, these alerts will not be routed to any notification receiver, so they won’t notify anybody when they fire. You must set up routing in Alertmanager within Grafana Cloud alerting.

Feel free to write your configuration for routing in the text box editor.

You may set up routing to places such as email addresses, Slack, PagerDuty, OpsGenie, and so on.

Step-by-step instructions can be found in this blog post.

To route the default synthetic monitoring alerts to a notification receiver, you can set up the conditions to match the namespace and alert_sensitivity labels.

route:

receiver: <your notification receiver>

match:

namespace: synthetic_monitoring

alert_sensitivity: highIf you don’t already have an SMTP server available for sending email alerts, refer to Grafana Alerting for information about how to use one supplied by Grafana Labs.

Where to access Synthetic Monitoring alerts from Grafana Cloud alerting

Alert rules can be found in the synthetic_monitoring namespace of Grafana Cloud alerting.

Default rules will be created inside the default rule group.

Recommendation to avoid alert-flapping

When enabling alerting for a check, we recommend running that check from multiple locations, preferably three or more. That way, if there’s a problem with a single probe or the network connectivity from that single location, you won’t be needlessly alerted, as the other locations running the same check will continue to report their results alongside the problematic location.

Grafana Alerting

Refer to the Grafana Alerting docs for details.

Next steps

You can check out the Top 5 user-requested synthetic monitoring alerts in Grafana Cloud and Best practices for alerting on Synthetic Monitoring metrics in Grafana Cloud blog posts to learn more.

Was this page helpful?

Related resources from Grafana Labs