Fleet Management architecture

Grafana Fleet Management is a Grafana Cloud application that lets you configure, monitor, and manage your collector fleet remotely and at scale. This topic describes the architecture of the Fleet Management service.

Core concepts

Fleet Management is built on the concepts of collectors and configuration pipelines.

Collectors

Collectors are individual Alloy instances registered in the Fleet Management service using a remotecfg block in their local configuration files.

Upon startup, the registered collector appears in the Fleet Management application, where you can monitor its health and assign configuration remotely.

Health monitoring is enabled by autogenerated self-monitoring pipelines that collect internal metrics and logs, send them to Grafana Cloud, and correlate the telemetry with the correct collector using the unique id set in the remotecfg block.

The following is an example of a remotecfg block.

To learn how to fill in the remotecfg block for the Fleet Management service, refer to the set up documentation.

remotecfg {

url = <SERVICE_URL>

basic_auth {

username = <USERNAME>

password_file = <PASSWORD_FILE>

}

id = constants.hostname

attributes = {"cluster" = "dev", "namespace" = "otlp-dev"}

poll_frequency = "5m"

}Configuration pipelines

Configuration pipelines are standalone fragments of configuration that are created and assigned remotely to collectors in Fleet Management. They are composed of a unique name, the components for the collector to load and run, and a list of attributes that match collectors with the pipeline.

Each configuration pipeline typically collects telemetry for different signals or from different applications and infrastructure components. You can combine them based on your observability needs to build dynamic and versatile remote configurations. Assign multiple configuration pipelines to a single collector or share one pipeline with multiple collectors in your fleet. For more details about creating pipelines, refer to Configuration pipelines.

Note

It is possible to assign duplicate pipelines to the same collector. If that happens, Alloy runs both pipelines without conflict because each pipeline has a unique name.

The remote configuration assigned to a registered collector, which is made up of one or more configuration pipelines, is determined at runtime.

A registered collector polls the Collector API on the poll_frequency set in its remotecfg block, looking for configuration pipelines with matching attributes.

You can view the remote configuration assigned to a collector in the details view for that collector.

On the Inventory tab, click on the collector row to open the details drawer and then switch to the Configuration tab.

Local and remote configurations: Concurrent and isolated

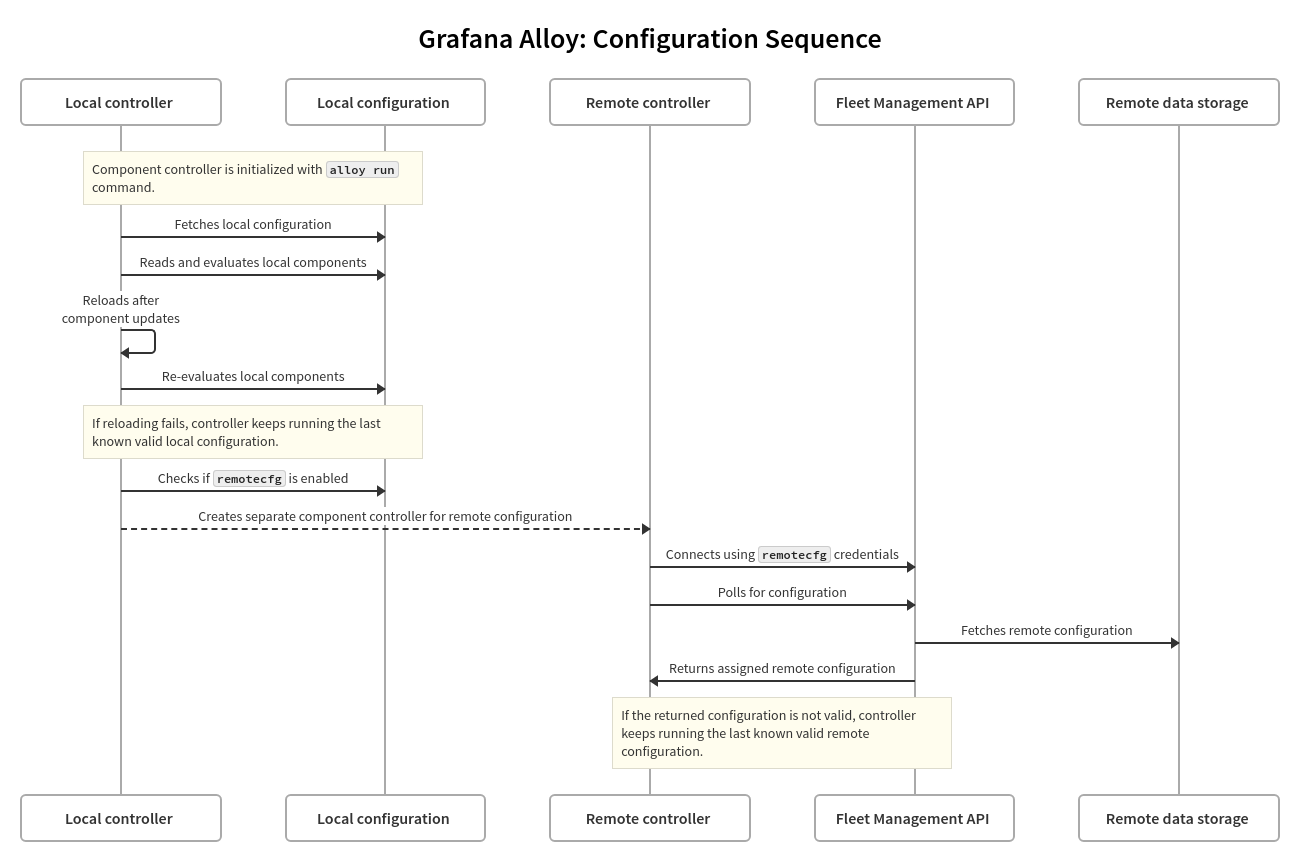

Alloy uses a hybrid configuration model where local configuration files can coexist with dynamic remote configurations. Collectors run the local configuration and remote configuration concurrently.

The component controller is the core part of Alloy that manages configuration components at runtime. The controller is responsible for reading and validating configuration files, managing the lifecycle and reporting the health of components, as well as evaluating the arguments used to configure them.

Local and remote configurations each have their own component controller, which means components loaded by one configuration are isolated from the other. This isolation is designed to protect runtime safety, ensuring that Alloy continues working despite temporary configuration errors or network hiccups. It also expands configuration options for different use cases. Users can mix and match local and remote configuration to fit their specific needs for cost management, operational independence across teams, or regulatory compliance. Refer to Configuration deployment strategies for more information.

Because local and remote configurations are isolated, the following statements are true:

Local and remote configurations cannot be in conflict. If a pipeline is duplicated in the local and remote configuration, it runs two times, separately and without conflict. The resulting data is written twice to Grafana Cloud. Depending on the type of data, you might see an error log about duplicate data being written.

Local and remote configurations cannot share components or values between them. But remote configuration pipelines can share components with other remote pipelines by injecting pipeline exports.

Any remote configuration errors do not impact local configuration. Local configuration errors can prevent Alloy from starting, so local misconfigurations could impact remote configurations.

Any configuration error on pipeline “A” does not affect how pipeline “B” is behaving. However, if pipelines are merged in a remote configuration file and one pipeline has an error, that configuration file is discarded and Alloy looks for and loads the last known valid configuration. This situation means that an error in one pipeline might cause Alloy to receive an older version of all pipelines that make up the remote configuration.

For example, a remote configuration contains two pipelines, “A” and “B”. Pipelines “A” and “B” are both updated before the collector next polls for remote configuration, creating v.2 of the remote configuration. The updated pipeline “A” has an error. Alloy cannot run remote configuration v.2 because of this error, so it looks for the last known valid configuration, which is remote configuration v.1, and loads it. Remote configuration v.1 does not contain the updates to pipelines “A” or “B”, even though only “A” contained the error. You can use the pipeline history feature to identify which change caused the error in pipeline “A”.

If any pipeline in a remote configuration file has an error and there is no previous valid configuration to fall back to, Alloy discards the invalid remote configuration and relies only on its local configuration.

The following sequence diagram shows how Alloy uses separate component controllers to manage local and remote configurations:

Local configuration

One or more local configuration files are passed to the Alloy run command.

If Alloy cannot load the local configuration, it fails to start.

Local configuration sets up core Alloy functionality, such as logging and tracing behavior and the HTTP server parameters. You can also form telemetry pipelines by configuring various components.

Local configuration mode is static.

To apply local file changes, you must reload the configuration file by sending a SIGHUP signal or calling the /-/reload endpoint.

Remote configuration

Alloy can opt in to remote configuration, but by default, the feature is disabled.

You can enable it by adding the remotecfg block to the local configuration of each Alloy instance.

If Alloy has remote configuration enabled, it periodically polls a remote server (in the case of Grafana Cloud, the Fleet Management API) for configuration updates and applies them automatically, without requiring Alloy to reload the configuration.

When you create a configuration pipeline in the Fleet Management application, you must give the pipeline a unique name to prevent conflict between pipelines.

The Fleet Management service wraps each pipeline in a declare block, and the declare blocks are marshalled and merged into a single file on the server side.

When Alloy polls, it receives a single complete remote configuration file to load.

The loaded remote configuration is cached in a remotecfg/ directory in Alloy’s storage path.

Alloy handles any errors in remote configuration gracefully.

If the remote configuration cannot be loaded, Alloy finds and falls back to the last known valid configuration to continue operations.

If there never was a valid remote configuration, none is loaded and Alloy continues to run using only its local configuration.

The remotecfg block and protocol

When the remotecfg block is defined in the local configuration, Alloy opts in to remote configuration.

The block defines the URL of the Fleet Management instance, authentication credentials, the polling interval, as well as a set of local attributes that characterize the Alloy instance, such as cluster, namespace, team, or owner.

In addition, the remotecfg block can configure additional authorization parameters, TLS settings, proxying behavior and more.

Refer to the remotecfg documentation for the full list of configuration options.

The Grafana Alloy Remote Config API, which is used for client-server communication to manage and fetch remote configuration, is available under the Apache 2.0 license.

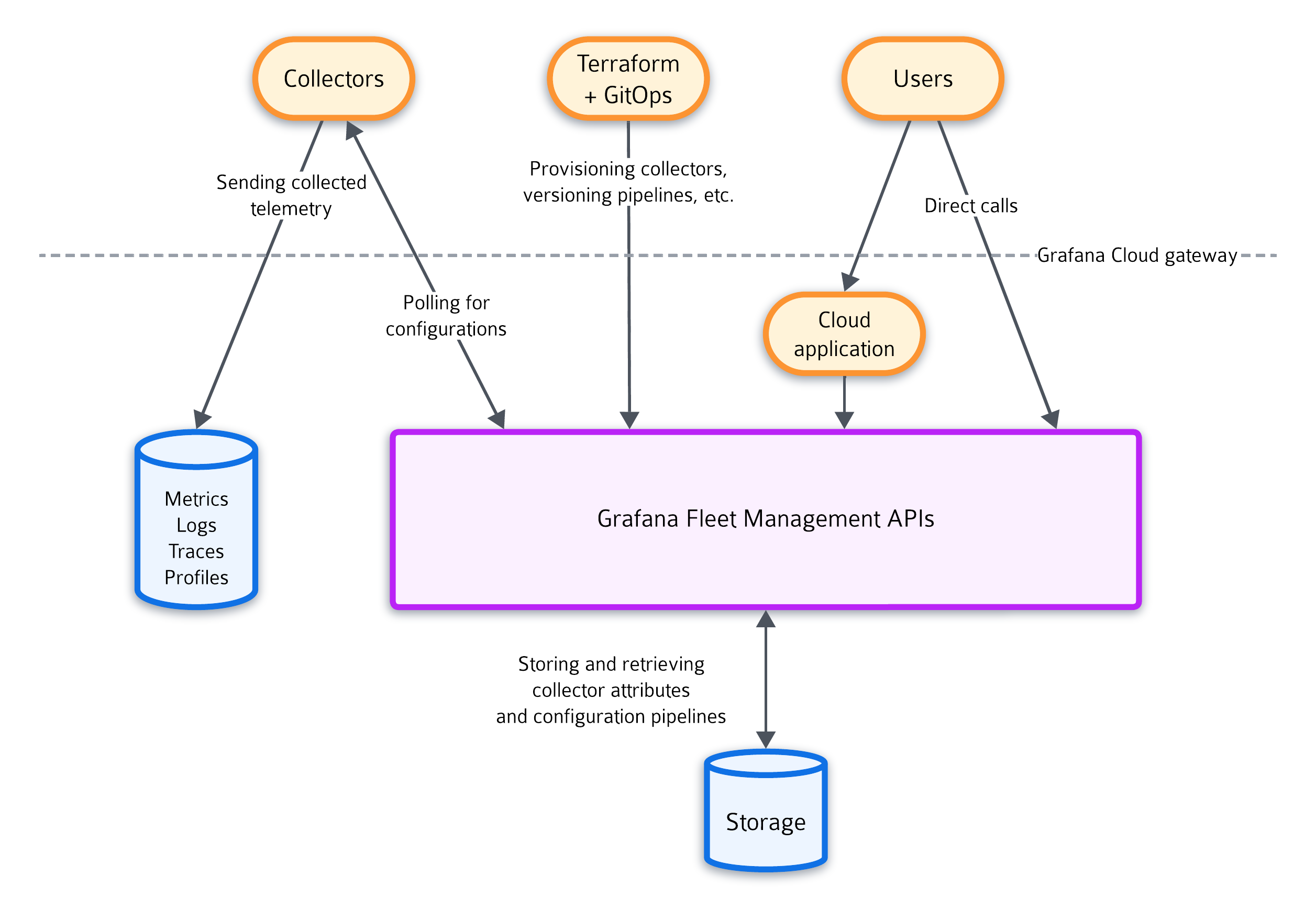

Service components

Fleet Management is deployed as a standard cloud-native service. The core APIs are deployed in a highly available setup and use hosted database instances for durable storage as well as an in-memory cluster for caching.

Collector and Pipeline APIs

Fleet Management exposes the Collector HTTP API and Pipeline HTTP API so you can perform CRUD operations on collectors and configuration pipelines. Calls to the APIs can come from several different sources:

- Collectors polling for configuration

- Grafana Terraform provider

- Other GitOps workflows

- Users accessing the Fleet Management application in Grafana Cloud

- Users making direct calls

Grafana Cloud gateway

The Grafana Cloud gateway handles authentication and routing within your stack to prevent unauthorized access. All Fleet Management API traffic passes through this gateway.

Storage

All data used to manage your fleet, including collector attributes and configuration pipelines, is durably stored in the Fleet Management database. Telemetry collected by your fleet is stored in your designated backend, such as Grafana Cloud.