Troubleshoot Kubernetes Monitoring

This section includes common errors encountered while installing and configuring Kubernetes Monitoring components, and tools you can use to troubleshoot.

User loses access

If you have granted a user the None basic role plus plugins.app:access, that user has no access to Kubernetes. Kubernetes Monitoring has two user roles to manage access:

plugins:grafana-k8s-app:adminplugins:grafana-k8s-app:reader

If a user is having trouble with access, make sure you have granted one of them one of these roles. To assign these roles, refer to Assign RBAC roles.

Troubleshooting tools

You can use the following to understand help you troubleshoot issues with installation and configuration.

Alloy tool

Grafana Alloy has a web user interface that shows every configuration

component the Alloy instance is using and the component status.

By default, the web UI runs on each Alloy pod on port 12345.

Since that UI is typically not exposed external to the Cluster, you can access it with port forwarding:

kubectl port-forward svc/grafana-k8s-monitoring-alloy 12345:12345

Then open a browser to http://localhost:12345 to view the GUI.

Access the Alloy web tool when:

Grafana Alloy isn’t collecting or exporting metrics/logs/traces properly. For example, you’re missing metrics in Grafana Cloud or Prometheus and need to confirm if Alloy is scraping the right targets.

A component is failing or in an error state. The UI shows each configuration component and its status (running, failed, initializing, and so on).

You’re validating configuration changes. After updating your alloy.yaml, you can confirm that the configuration loaded correctly and that all pipelines and receivers are active.

You suspect a dependency or connectivity issue. For example, Alloy when can’t reach Grafana Cloud endpoints, a local data source, or another collector, you can inspect component logs or connection statuses.

You’re debugging startup or runtime issues. Useful if Alloy pods are up but not behaving as expected (for example, metrics pipeline broken, missing exporters).

Debug Metrics tool

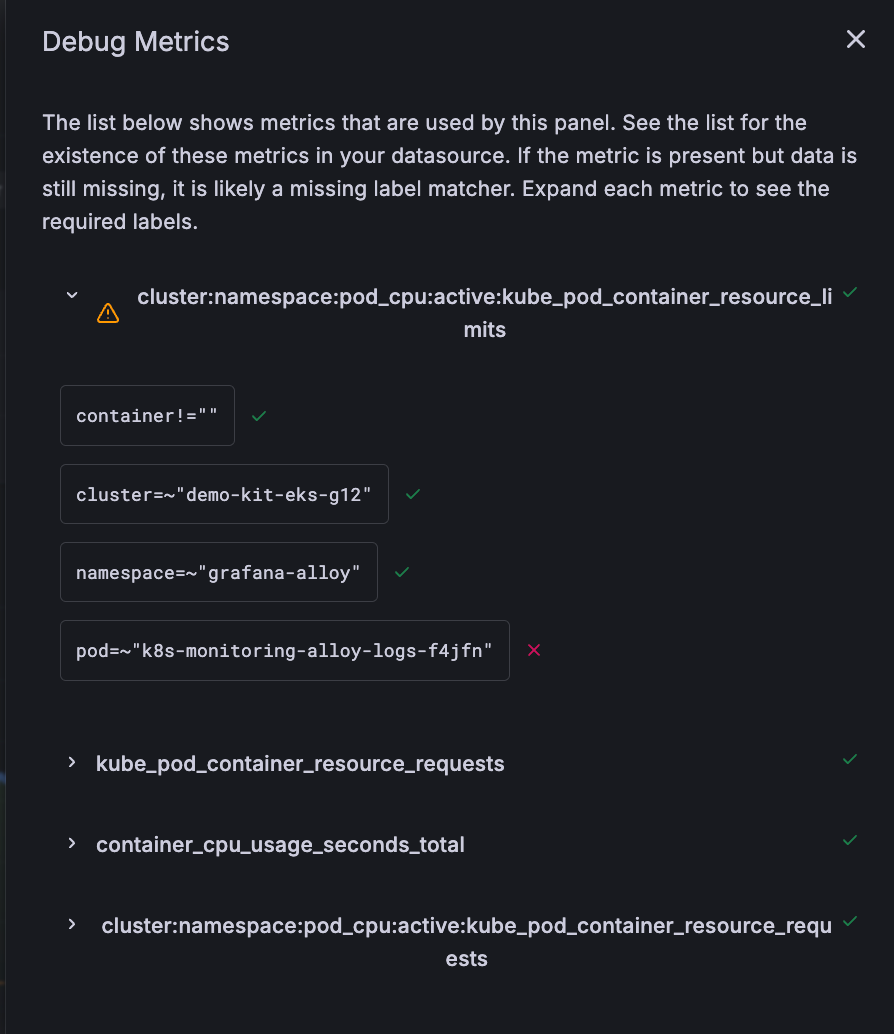

For any panel, click the menu icon and select Debug metrics for this panel.

Debug Metrics lists all metrics used for the panel along with any errors found.

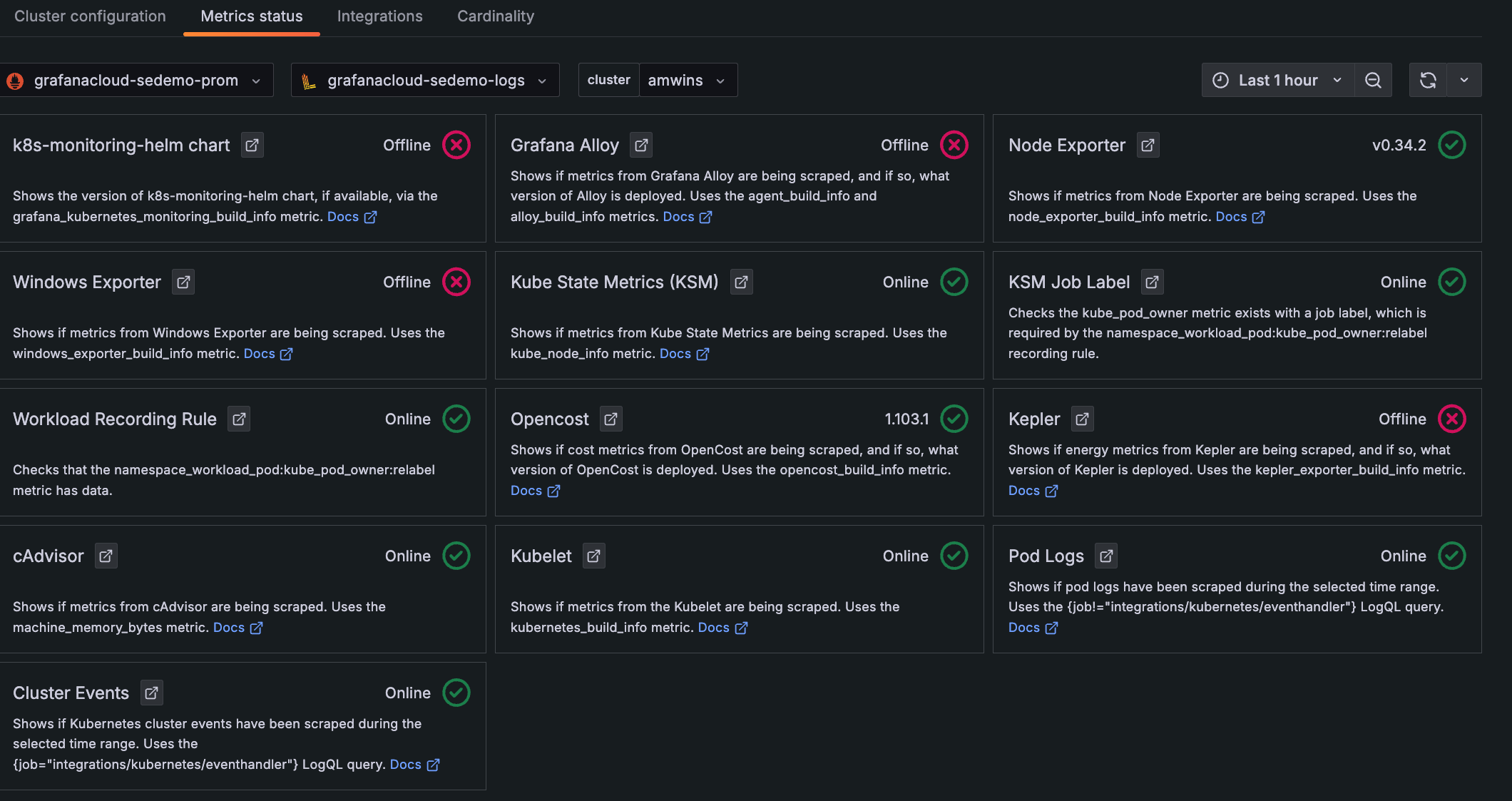

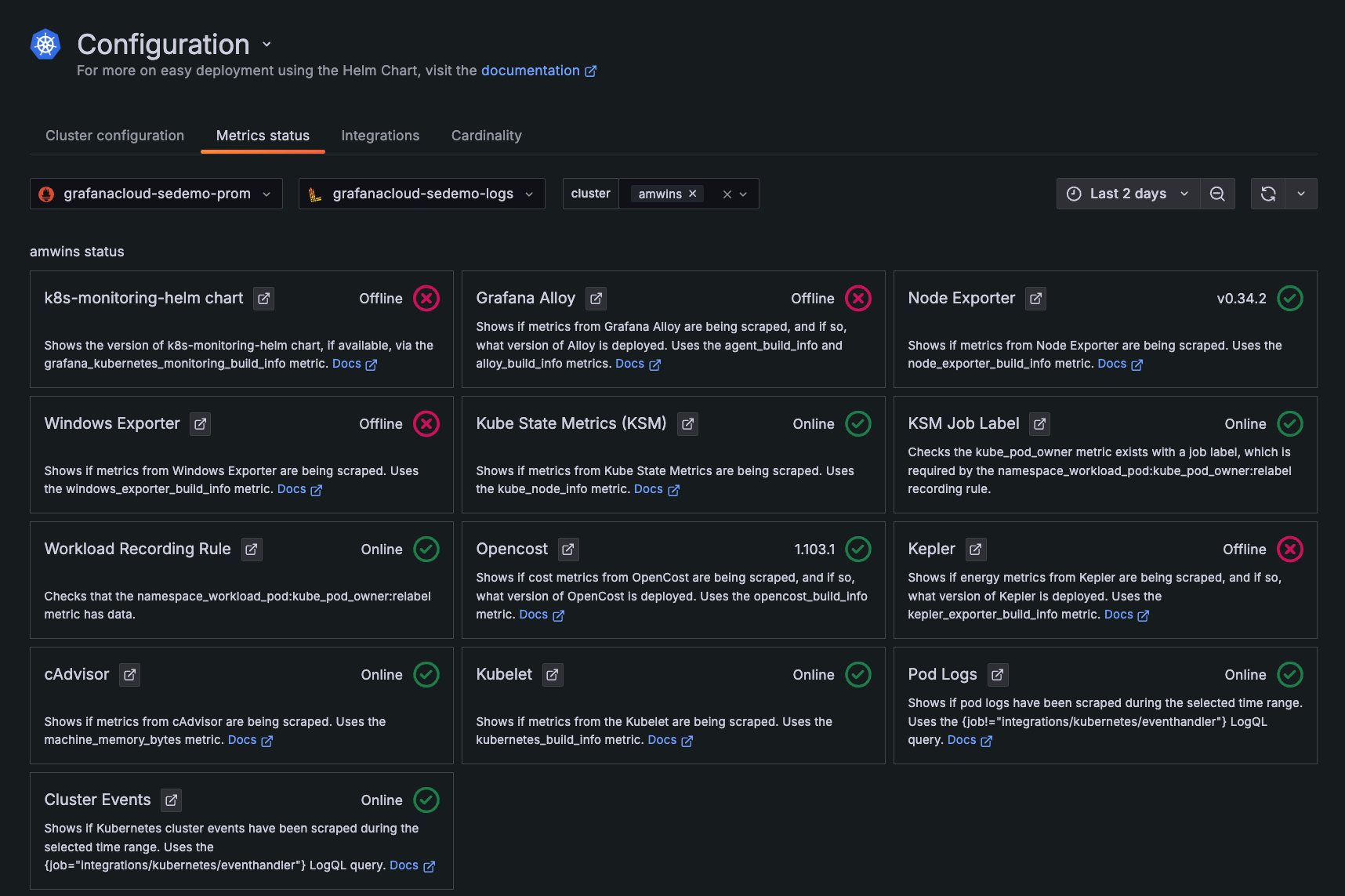

Metrics status tool

To view the status of metrics being collected, in Kubernetes Monitoring:

- Click Configuration on the menu.

- Click the Metrics status tab.

- Filter for the Cluster or Clusters you want to see the status of.

Status icons

Each panel of the Metrics status shows an icon that indicates the status of the incoming data, based on the selected data source, Cluster, and time range:

- Check mark in a circle (green): Data for this source is being collected. The version of the source or online status also displays (if available).

- Caution with exclamation mark (yellow): Duplicate data is being collected for the metric source.

- X in a circle (red): There is no data available for this item within the time range specified, and it appears to be offline.

Check initial configuration

When you initially configure, if any box shows a red X in a circle, it can be any of the following:

- The feature was not selected during Cluster configuration.

- The system is not running correctly.

- Alloy was not able to gather data correctly.

- No data was gathered during the time range specified.

View the query with Explore

If something in the metrics status looks incorrect, click the icon next to the panel title. This opens the query in Explore where you can examine the query for any issues, such as an incorrect label.

Look at a historical time range

Use the time range selector to understand what was occurring in the past. In the following example, Cluster events were being collected but are not currently.

View documentation for each status

For more information about each status, click the Docs link in each panel.

Troubleshooting deployment with Helm chart

Common issues that can occur when a Helm chart is not configured correctly:

If you have configured Kubernetes Monitoring with the Grafana Kubernetes Monitoring Helm chart, here are some general troubleshooting techniques:

- Within Kubernetes Monitoring, view the metrics status.

- Check for any changes with the command

helm template .... This produces anoutput.yamlfile to check the result. - Check the configuration with the command

helm test --logs. This provides a configuration validation, including all phases of metrics gathering through display. - Check the

extraConfigsection of the Helm chart to ensure this section is not used for modifications. This section is only for additional configuration not already in the chart, and not for modifications to the chart.

Duplicate metrics

Certain metric data sources (such as Node Exporter or kube-state-metrics) may already exist on the Cluster. When you deployed with the Kubernetes Monitoring Helm chart, these data sources are installed even if they were already present on your Cluster.

- Visit the Metrics status tab to view any duplicates.

- Remove the duplicates or adjust the Helm chart values to use the existing ones and skip deploying another instance.

Duplicate alerts

You may temporarily see duplicate alerts for the same condition in the Alerts page or in alert counts throughout Kubernetes Monitoring.

Cause

During the migration from Prometheus Alertmanager to Grafana Managed Alerts, both alerting systems may fire alerts simultaneously for the same conditions, resulting in duplicates. Kubernetes Monitoring queries both alert sources (ALERTS and GRAFANA_ALERTS metrics) to ensure all alerts are detected during this transition period.

Solution

This is expected behavior during the migration period and requires no action. Duplicate alerts will automatically resolve once your Grafana Cloud stack completes the migration to Grafana Managed Alerts.

If duplicate alerts persist after the migration is complete, contact Grafana Support.

Specific Cluster platform providers

Certain Kubernetes Cluster platforms require some specific configurations for the Kubernetes Monitoring Helm chart. If your Cluster is running on one of these platforms, refer to the example for the changes required to run the Helm chart:

Missing data

Here are some tips for missing data.

CPU usage negative and missing data

If you have not installed Kubernetes Monitoring with the Helm chart and instead used the OTel collector deployed as a DaemonSet, you could have issues with CPU usage data. The OTel collector should be deployed as a Deployment. By using a DaemonSet, multiple samples may be written out of order to the same time series. This can cause Kubernetes Monitoring to show:

- Negative rates for CPU usage

- Gaps in usage showing on Optimization panels

- Unevenly spaced data points indicative of multiple sample ingestion, which may also be interpreted as counter resets

CPU usage panels missing data

If there is no CPU usage data, the data scraping intervals of the collector and the data source may not match. The default scraping interval for Grafana Alloy is 60 seconds. If the scraping interval for your data source is not 60 seconds, this mismatch may interfere with the calculation for CPU rate of usage.

To resolve, synchronize the scraping interval for the collector and data source.

- If you configured the data source (meaning it wasn’t automatically provisioned by Grafana Cloud), change the scrape interval for the data source to match the collector.

- If the data source was provisioned for you by Grafana Cloud, contact support to request the scrape interval for the data source be changed to match the collector.

Data missing in a panel

If a panel in Kubernetes Monitoring seems to be missing data or shows a “No data” message, you can use either the Debug Metrics feature or open the query for the panel in Explore to determine which query is failing.

This can occur when new features are released. For example, if you see no data in the network bandwidth and saturation panels, it is likely you need to upgrade to the newest version of the Helm chart.

Data missing for a provider

If your cloud service provider name is not showing up in the Cluster list page, it’s likely due to a provider_id missing from some types of Clusters. This occurs in the case of an internal provider or bare metal Clusters. To ensure your provider shows up, create a relabeling rule for the provider.

metrics:

kube-state-metrics:

extraMetricRelabelingRules: |-

rule {

source_labels = ["__name__", "provider_id", "node"]

separator = "@"

regex = "kube_node_info@@(.*)"

replacement = "<cluster provider id>://${1}"

action = "replace"

target_label = "provider_id"

}Replace <cluster provider id> with the provider ID you would like to appear in the Kubernetes Monitoring Cluster list page.

Efficiency usage data missing

If CPU and memory usage within any table shows no data, it could be due to missing Node Exporter metrics. Navigate to the Metrics status tab to determine what is not being reported.

Job data missing

If you are missing jobs data, make sure you are collecting the following metrics:

kube_cronjob_infokube_cronjob_next_schedule_timekube_cronjob_spec_suspendkube_cronjob_status_last_schedule_timekube_cronjob_status_last_successful_timekube_job_infokube_job_ownerkube_job_spec_completionskube_job_status_completion_timekube_job_status_failedkube_job_status_start_timekube_job_status_succeededkube_namespace_status_phasekube_node_infokube_pod_completion_timekube_pod_container_status_last_terminated_timestampkube_pod_ownerkube_pod_restart_policy

Metrics missing

If metrics are missing even though the Metrics status tab is showing that the configuration is set up as you intended, check for an incorrectly configured label for the Node Exporter instance.

Make sure the Node Exporter instance label is set to the Node name. The labels for kube-state-metrics node and Node Exporter instance must contain the same values.

Methodology for missing metrics

It’s helpful to keep in mind the different phases of metrics gathering when debugging.

Discovery

Find the metric source. In this phase, find out whether the tool to gather metrics is working. For example, is Node Exporter running? Can Alloy find Node Exporter? Perhaps there’s configuration that is incorrect because Alloy is looking in a namespace or for a specific label.

Scraping

Ask whether the metrics were gathered correctly. As an example, most metric sources use HTTP, but the metric source you are trying to find uses HTTPS. Identify whether the configuration is set for scraping HTTPS.

Processing

Ask whether metrics were correctly processed. With Kubernetes Monitoring, metrics are filtered to a small subset of the useful metrics.

Delivery

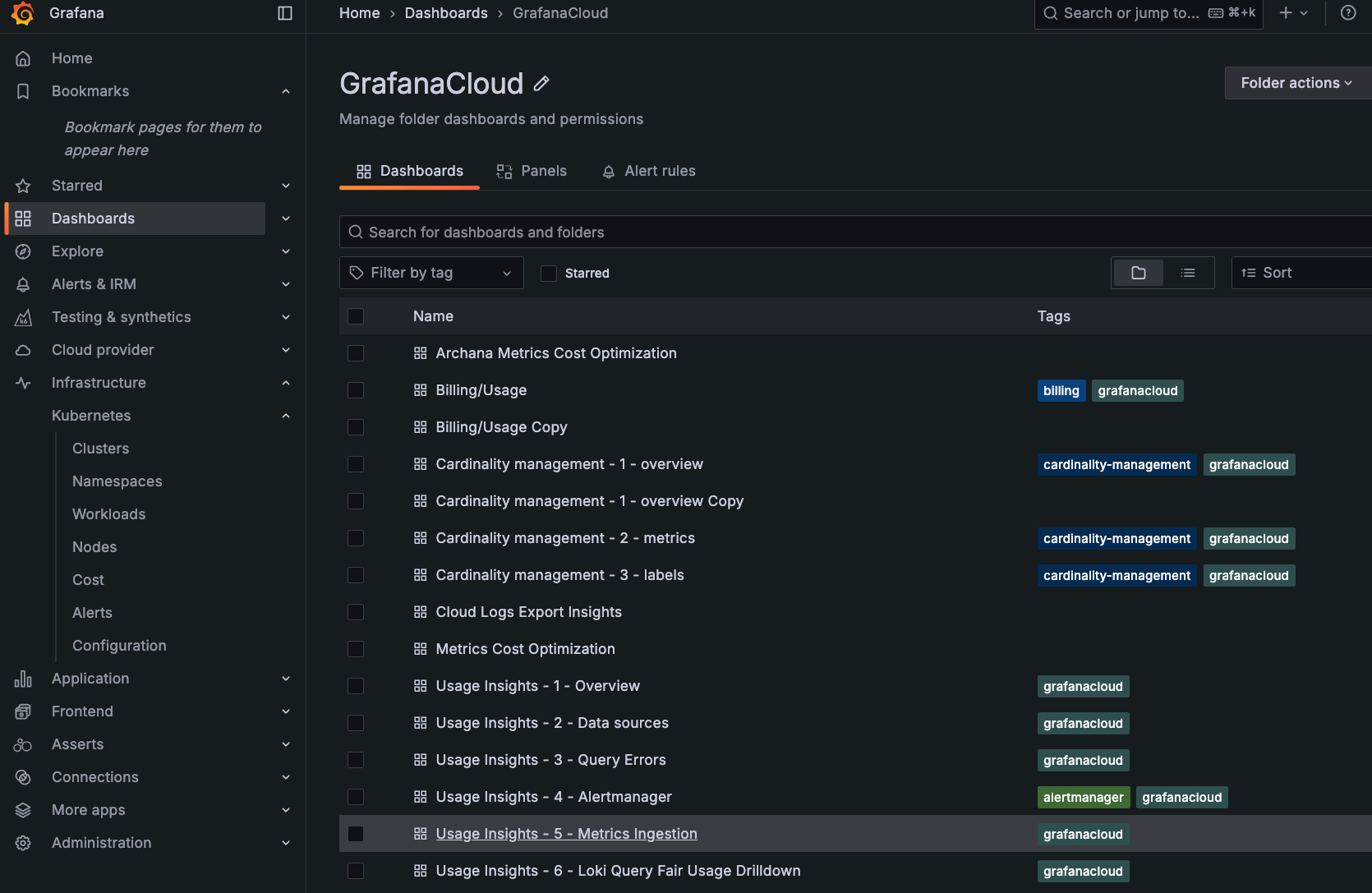

In this phase, metrics are sent to Grafana Cloud. If there is an issue, there are likely no metrics being delivered. This can occur if your account limits for metrics is reached. Check the Usage Insights - 5 - Metrics Ingestion dashboard.

Displaying

In this phase, a metric is not showing up in the Kubernetes Monitoring GUI. If you’ve determined the metrics are being delivered but some are not displaying, there may be a missing or incorrect label for the metric. Check the Metrics status tab.

Pod logs missing

If you are not seeing Pod logs and your platform is AWS EKS Fargate, these logs cannot be gathered using a hostpath volume mount. Instead, you can use API-based log gathering. For greater detail, refer to EKS Fargate.

Network metrics missing

If you have deployed on the AWS EKS Fargate platform, AWS prevents a level of access that Node Exporter requires to gather metrics for the network panels. EKS Fargate provides on-demand compute for Kubernetes objects instead of the traditional means where these objects run on Nodes.

Port conflicts and Node Exporter

Node Exporter opens host port 9100 on the Kubernetes Node. If there already is a Node Exporter being used, the two exporters experience conflict with their respective default ports. To avoid this conflict, you have two options.

You can change the Node Exporter port number, so the Node Exporter deployed by the Kubernetes Monitoring Helm chart does not conflict with the existing Node Exporter. To do this, customize the Helm chart by adding the following to your values.yaml file:

clusterMetrics:

node-exporter:

enabled: true

service:

port: 9101 # Choose an unused portAlternatively, you can disable the Node Exporter deployed by the Helm chart, and target the existing Node Exporter. To do this, customize the Helm chart by adding the following to your values.yaml file:

clusterMetrics:

node-exporter:

enabled: true

deploy: false

namespace: '<namespace of the existing Node Exporter>'

labelSelectors: # Customize to match the existing Node Exporter Pod labels

app.kubernetes.io/name: node-exporterWorkload data missing

If you are seeing Pod resource usage but not workloads usage data, the recording rules and alert rules are likely not installed.

- Navigate to the Configuration page.

- Click the Metrics status tab.

- In the Workload Recording Rule panel, click Install to install alert rules and recording rules.

Error messages

Here are tips for errors you may receive related to configuration.

Authentication error: invalid scope requested

To deliver telemetry data to Grafana Cloud, you use an Access Policy Token with the appropriate scopes.

Scopes define an action that can be done to a specific data type.

For example metrics:write permits writing metrics.

If sending data to Grafana Cloud, the Helm chart uses the <data>:write scopes for delivering data.

If your token does not have the correct scope, you will see errors in the Grafana Alloy logs.

For example, when trying to deliver profiles to Pyroscrope without the profiles:write scope:

msg="final error sending to profiles to endpoint" component=pyroscope.write.profiles_service endpoint=https://tempo-prod-1-prod-eu-west-2.grafana.net:443 err="unauthenticated: authentication error: invalid scope requested"The following table shows the scopes required for various actions done by this chart:

Couldn’t load repositories file

If you receive the following message when running the Helm chart installation generated by Grafana Cloud Error: Couldn't load repositories file (/root/.helm/repository/repositories.yaml). then run helm init. This is a common error for new installations of Kubernetes and K3s.

Invalid argument 300s

If you receive the following message when running the chart installation generated by Grafana Cloud Error: invalid argument 300s for --timeout flag: strconv.ParseInt: parsing 300s: invalid syntax, then you’re using an older version of Helm. Update to the latest version.

Kepler Pods crashing on AWS Graviton Nodes

Kepler cannot run on AWS Graviton Nodes and Pods; these Nodes will CrashLoopBackOff. To prevent this, you can add a Node selector to the Kepler deployment:

kepler:

nodeSelector:

kubernetes.io/arch: amd64Kubernetes Cluster unreachable

For K3s deployments, if you receive the following message when running the Helm chart installation generated by Grafana Cloud Error: Kubernetes cluster unreachable: Get http://localhost:8080/version: dial tcp 127.0.0.1:8080: connect: connection refused, then execute the following command before you run Helm: export KUBECONFIG=/etc/rancher/k3s/k3s.yaml.

OpenShift error

With OpenShift’s default SecurityContextConstraints (scc) of restricted (refer to the scc documentation for more info), you may run into the following errors while deploying Grafana Alloy using the default generated manifests:

msg="error creating the agent server entrypoint" err="creating HTTP listener: listen tcp 0.0.0.0:80: bind: permission denied"By default, the Alloy StatefulSet container attempts to bind to port 80, which is only allowed by the root user (0) and other privileged users. With the default restricted SCC on OpenShift, this results in the preceding error.

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedCreate 3m55s (x19 over 15m) daemonset-controller Error creating: pods "grafana-agent-logs-" is forbidden: unable to validate against any security context constraint: [provider "anyuid": Forbidden: not usable by user or serviceaccount, spec.volumes[1]: Invalid value: "hostPath": hostPath volumes are not allowed to be used, spec.volumes[2]: Invalid value: "hostPath": hostPath volumes are not allowed to be used, spec.containers[0].securityContext.runAsUser: Invalid value: 0: must be in the ranges: [1000650000, 1000659999], spec.containers[0].securityContext.privileged: Invalid value: true: Privileged containers are not allowed, provider "nonroot": Forbidden: not usable by user or serviceaccount, provider "hostmount-anyuid": Forbidden: not usable by user or serviceaccount, provider "machine-api-termination-handler": Forbidden: not usable by user or serviceaccount, provider "hostnetwork": Forbidden: not usable by user or serviceaccount, provider "hostaccess": Forbidden: not usable by user or serviceaccount, provider "node-exporter": Forbidden: not usable by user or serviceaccount, provider "privileged": Forbidden: not usable by user or serviceaccount]By default, the Alloy DaemonSet attempts to run as root user, and also attempts to access directories on the host (to tail logs). With the default restricted SCC on OpenShift, this results in the preceding error.

To solve these errors, use the hostmount-anyuid SCC provided by OpenShift, which allows containers to run as root and mount directories on the host.

If this does not meet your security needs, create a new SCC with the required tailored permissions, or investigate running Agent as a non-root container, which goes beyond the scope of this troubleshooting guide.

To use the hostmount-anyuid SCC, add the following stanza to the alloy and alloy-logs ClusterRoles:

---

- apiGroups:

- security.openshift.io

resources:

- securitycontextconstraints

verbs:

- use

resourceNames:

- hostmount-anyuidResourceExhausted error when sending traces

You might encounter the following if you have traces enabled and you see log entries in your alloy instance that looks like this:

Permanent error: rpc error: code = ResourceExhausted desc = grpc: received message after decompression larger than max (5268750 vs. 4194304)" dropped_items=11226

ts=2024-09-19T19:52:35.16668052Z level=info msg="rejoining peers" service=cluster peers_count=1 peers=6436336134343433.grafana-k8s-monitoring-alloy-cluster.default.svc.cluster.local.:12345This error is likely due to the span size being too large. To fix this, adjust the batch size:

receivers:

processors:

batch:

maxSize: 2000Start with 2000 and adjust as needed.

Traces missing with Istio service mesh

If traces are not appearing in Grafana Cloud Traces (powered by Grafana Tempo) when Istio service mesh is deployed in your cluster, this is likely due to Istio’s protocol detection requirements. Istio requires Kubernetes Service port names to be grpc or start with grpc- (for example, grpc-otlp) for proper gRPC protocol detection. Without following this naming convention, Istio cannot identify the port as using the gRPC protocol, and trace data from your applications will not be properly routed to the OpenTelemetry Collector.

To resolve this issue, ensure your Kubernetes Service port names follow Istio’s protocol naming convention when configuring OpenTelemetry trace collection with Istio. For example, when configuring OTLP gRPC receivers:

alloy-receiver:

enabled: true

alloy:

extraPorts:

- name: grpc-otlp # Must be "grpc" or "grpc-<suffix>" for Istio

port: 4317

targetPort: 4317

protocol: TCP

- name: otlp-http # HTTP ports don't require special naming

port: 4318

targetPort: 4318

protocol: TCPWarning

If your port name does not follow Istio’s naming convention (for example,

otlp-grpcorotlp), you must rename it to eithergrpcor a name starting withgrpc-(for example,grpc-otlp) when Istio is deployed in your Cluster.

This Istio protocol detection requirement applies to the Kubernetes Service that exposes the OTLP gRPC port for Grafana Alloy or any other OpenTelemetry Collector running under Istio service mesh. For more information about Istio protocol selection, refer to the Istio protocol selection documentation.

Update error

If you attempted to upgrade Kubernetes Monitoring with the Update button on the Cluster configuration tab under Configuration and received an error message, complete the following instructions.

Warning

When you uninstall Grafana Alloy, this deletes its associated alert and recording rule namespace. Alerts added to the default locations are also removed. Save a copy of any customized item if you modified the provisioned version.

- Click Uninstall.

- Click Install to reinstall.

- Complete the instructions in Configure with Grafana Kubernetes Monitoring Helm chart.