Get started with Grafana Alerting - Group alert notifications

This tutorial is a continuation of the Get started with Grafana Alerting - Alert routing tutorial.

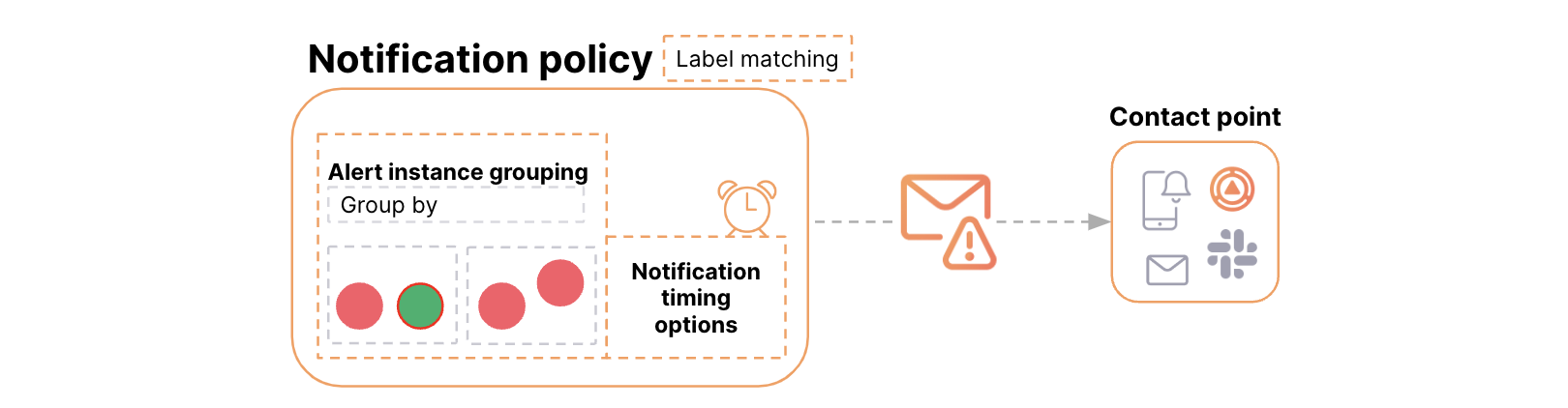

Grouping in Grafana Alerting reduces notification noise by combining related alert instances into a single, concise notification. This is useful for on-call engineers, ensuring they focus on resolving incidents instead of sorting through a flood of notifications.

Grouping is configured using labels in the notification policy. These labels reference those generated by alert instances or configured by the user.

Notification policies also allow you to define how often notifications are sent for each group of alert instances.

In this tutorial, you will:

- Learn how alert rule grouping works.

- Create a notification policy to handle grouping.

- Define alert rules for a real-world scenario.

- Receive and review grouped alert notifications.

Before you begin

There are different ways you can follow along with this tutorial.

Grafana Cloud

- As a Grafana Cloud user, you don’t have to install anything. Create your free account.

Continue to How alert rule grouping works.

Interactive learning environment

- Alternatively, you can try out this example in our interactive learning environment: Get started with Grafana Alerting - Grouping. It’s a fully configured environment with all the dependencies already installed.

Grafana OSS

If you opt to run a Grafana stack locally, ensure you have the following applications installed:

Docker Compose (included in Docker for Desktop for macOS and Windows)

Set up the Grafana stack (OSS users)

To demonstrate the observation of data using the Grafana stack, download and run the following files.

Clone the tutorial environment repository.

git clone https://github.com/grafana/tutorial-environment.gitChange to the directory where you cloned the repository:

cd tutorial-environmentRun the Grafana stack:

docker compose up -dThe first time you run

docker compose up -d, Docker downloads all the necessary resources for the tutorial. This might take a few minutes, depending on your internet connection.Note

If you already have Grafana, Loki, or Prometheus running on your system, you might see errors, because the Docker image is trying to use ports that your local installations are already using. If this is the case, stop the services, then run the command again.

How alert rule grouping works

Alert notification grouping is configured with labels and timing options:

- Labels map the alert rule with the notification policy and define the grouping.

- Timing options control when and how often notifications are sent.

Types of Labels

Reserved labels (default):

- Automatically generated by Grafana, e.g.,

alertname,grafana_folder. - Example:

alertname="High CPU usage".

User-configured labels:

- Added manually to the alert rule.

- Example:

severity,priority.

Query labels:

- Returned by the data source query.

- Example:

region,service,environment.

Timing Options

Group wait: Time before sending the first notification. Group interval: Time between notifications for a group. Repeat interval: Time before resending notifications for an unchanged group.

Alerts sharing the same label values are grouped together, and timing options determine notification frequency.

For more details, see:

A real-world example of alert grouping in action

Scenario: monitoring a distributed application

You’re monitoring metrics like CPU usage, memory utilization, and network latency across multiple regions. Some of these alert rules include labels such as region: us-west and region: us-east. If multiple alert rules trigger across these regions, they can result in notification floods.

How to manage grouping

To group alert rule notifications:

- Define labels: Use

region,metric, orinstancelabels to categorize alerts. - Configure Notification policies:

- Group alerts by the query label “region”.

- Example:

- Alert notifications for

region: us-westgo to the West Coast team. - Alert notifications for

region: us-eastgo to the East Coast team.

- Alert notifications for

- Specify the timing options for sending notifications to control their frequency.

- Example:

- Group interval: setting determines how often updates for the same alert group are sent. By default, this interval is set to 5 minutes, but you can customize it to be shorter or longer based on your needs.

- Example:

Setting up alert rule grouping

Notification Policy

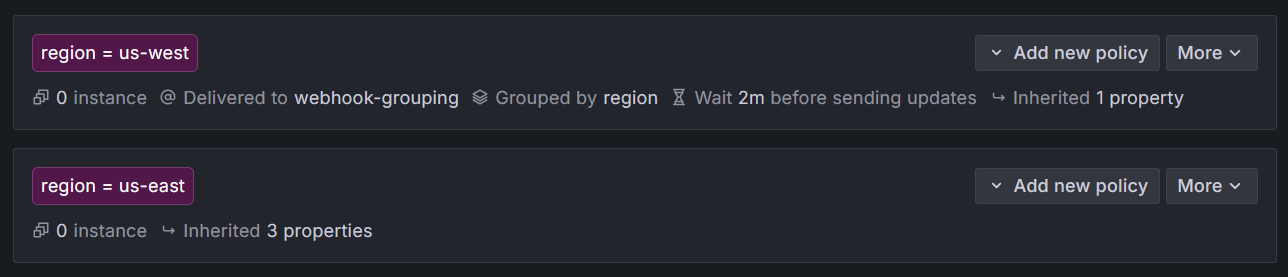

Following the above example, notification policies are created to route alert instances, which have a region label, to a specific contact point. The goal is to receive one consolidated notification per region. To demonstrate how grouping works, alert notifications for the East Coast team are not grouped. Regarding timing, a specific schedule is defined for that region. This setup overrides the parent’s settings to fine-tune the behavior for specific labels (i.e., regions).

Sign in to Grafana:

- Grafana Cloud users: Log in via Grafana Cloud.

- OSS users: Go to http://localhost:3000.

Navigate to Notification Policies:

- Go to Alerts & IRM > Alerting > Notification Policies.

Add a child policy:

In the Default policy, click + New child policy.

Label:

regionOperator:

=Value:

us-westThis label matches alert rules where the region label is us-west.

Choose a Contact point:

- Select Webhook.

If you don’t have any contact points, add a Contact point.

Enable Continue matching:

- Turn on Continue matching subsequent sibling nodes so the evaluation continues even after one or more labels (i.e. region label) match.

Override grouping settings:

Toggle Override grouping.

Group by: Add

regionas label. Remove any existing labels.Group by consolidates alerts that share the same grouping label into a single notification. For example, all alerts with

region=us-westwill be combined into one notification, making it easier to manage and reducing alert fatigue.

Set custom timing:

Toggle Override general timings.

Group interval:

2m. This ensures follow-up notifications for the same alert group will be sent at intervals of 2 minutes. While the default is 5 minutes, we chose 2 minutes here to provide faster feedback for demonstration purposes.Timing options control how often notifications are sent and can help balance timely alerting with minimizing noise.

Save and repeat:

- Repeat the steps above for

region = us-eastbut without overriding grouping and timing options. Use a different webhook endpoint as the contact point.

![Two nested notification policies to route and group alert notifications]()

These nested policies should route alert instances where the region label is either us-west or us-east. Only the us-west region team should receive grouped alert notifications.

Note

Label matchers are combined using the

ANDlogical operator. This means that all matchers must be satisfied for a rule to be linked to a policy. If you attempt to use the same label key (e.g., region) with different values (e.g., us-west and us-east), the condition will not match, because it is logically impossible for a single key to have multiple values simultaneously.However,

region!=us-east && region=!us-westcan match. For example, it would match a label set whereregion=eu-central. Alternatively, for identical label keys use regular expression matchers (e.g.,region=~us-west|us-east).- Repeat the steps above for

Create an alert rule

In this section we configure an alert rule based on our application monitoring example.

- Navigate to Alerts & IRM > Alerting > Alert rules.

- Click + New alert rule.

Enter an alert rule name

Make it short and descriptive as this appears in your alert notification. For instance, High CPU usage - Multi-region.

Define query and alert condition

In this section, we use the default options for Grafana-managed alert rule creation. The default options let us define the query, a expression (used to manipulate the data – the WHEN field in the UI), and the condition that must be met for the alert to be triggered (in default mode is the threshold).

Grafana includes a test data source that creates simulated time series data. This data source is included in the demo environment for this tutorial. If you’re working in Grafana Cloud or your own local Grafana instance, you can add the data source through the Connections menu.

From the drop-down menu, select TestData data source.

From Scenario select CSV Content.

Copy in the following CSV data:

Select TestData as the data source.

Set Scenario to CSV Content.

Use the following CSV data:

region,cpu-usage,service,instance us-west,35,web-server-1,server-01 us-west,81,web-server-1,server-02 us-east,79,web-server-2,server-03 us-east,52,web-server-2,server-04 us-west,45,db-server-1,server-05 us-east,77,db-server-2,server-06 us-west,82,db-server-1,server-07 us-east,93,db-server-2,server-08

The returned data simulates a data source returning multiple time series, each leading to the creation of an alert instance for that specific time series.

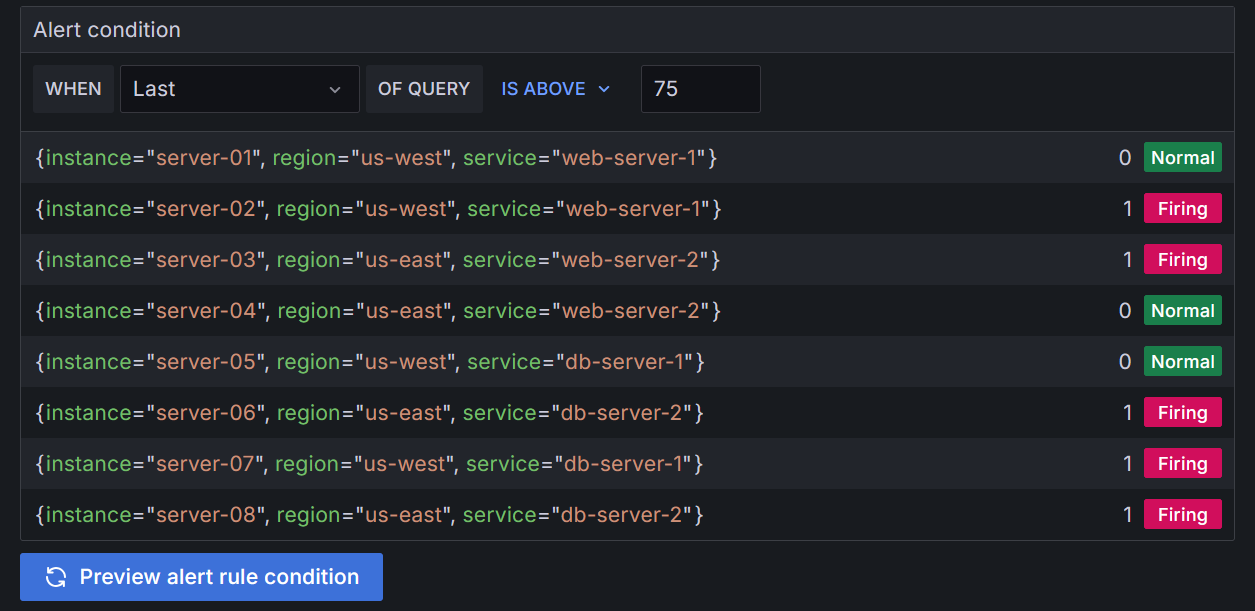

In the Alert condition section:

- Keep

Lastas the value for the reducer function (WHEN), andIS ABOVE 75as the threshold value. This is the value above which the alert rule should trigger.

- Keep

Click Preview alert rule condition to run the queries.

It should return 5 series in Firing state, two firing instances from the us-west region, and three from the us-east region.

![Preview of a query returning alert instances.]()

Add folders and labels

- In Folder, click + New folder and enter a name. For example:

Multi-region alerts. This folder contains our alert rules.

Set evaluation behavior

Every alert rule is assigned to an evaluation group. You can assign the alert rule to an existing evaluation group or create a new one.

- In the Evaluation group and interval, enter a name. For example:

Multi-region group. - Choose an Evaluation interval (how often the alert are evaluated). Choose

1m. - Set the pending period to

0s(zero seconds), so the alert rule fires the moment the condition is met (this minimizes the waiting time for the demonstration). - Set Keep firing for to,

0s, so the alert stops firing immediately after the condition is no longer true.

Configure notifications

Select who should receive a notification when an alert rule fires.

Select Use notification policy.

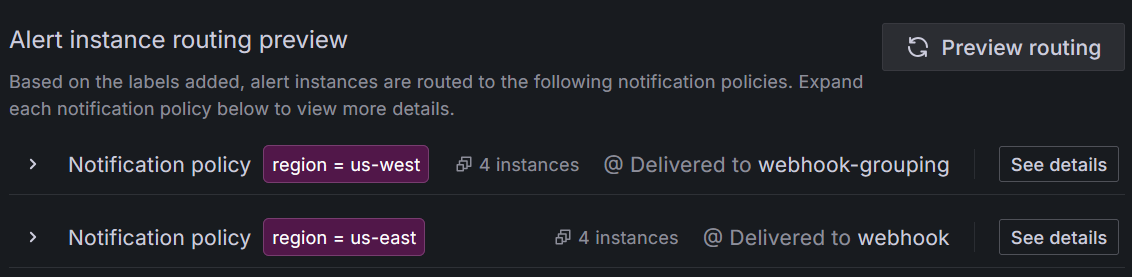

Click Preview routing to ensure correct matching.

![Preview of alert instance routing with the region label matcher]()

The preview should show that the region label from our data source is successfully matching the notification policies that we created earlier thanks to the label matcher that we configured.

Click Save rule and exit.

Create a second alert rule

Repeat the steps above to create a second alert rule that alerts on high memory usage.

Duplicate the alert rule by clicking on More > Duplicate.

Name it

High Memory usage - Multi-region.Use the below CSV data to simulate a data source returning memory usage.

region,memory-usage,service,instance us-west,42,cache-server-1,server-09 us-west,88,cache-server-1,server-10 us-east,74,api-server-1,server-11 us-east,90,api-server-1,server-12 us-west,53,analytics-server-1,server-13 us-east,81,analytics-server-2,server-14 us-west,77,analytics-server-1,server-15 us-east,94,analytics-server-2,server-16Click Save rule and exit.

Receiving grouped alert notifications

Now that the alert rules have been configured, you should receive alert notifications in the contact point(s) whenever alerts trigger.

When the configured alert rule detects CPU or memory usage higher than 75% across multiple regions, it will evaluate the metric every minute. If the condition persists, notifications will be grouped together, with a Group wait of 30 seconds before the first alert is sent. Follow-up notifications for the same alert group will be sent at intervals of 2 minutes (US-west alert instances only), increasing the frequency of the grouped alert notifications. US-east instances follow-up notifications should be sent at the default interval of 5 minutes. If the condition continues for an extended period, a Repeat interval of 4 hours ensures that the alert is only resent if the issue persists.

As a result, our notification policies should route three notifications: one grouped notification grouping both CPU and memory alert instances from the us-west region and two separate notifications with alert instances from the us-east region.

Grouped notifications example:

{

"receiver": "US-West-Alerts",

"status": "firing",

"alerts": [

{

"status": "firing",

"labels": {

"alertname": "High CPU usage - Multi-region",

"grafana_folder": "Multi-region alerts",

"instance": "server-05",

...

{

"status": "firing",

"labels": {

"alertname": "High Memory usage - Multi-region",

"grafana_folder": "Multi-region alerts",

"instance": "server-10",

},

...}Detail of CPU and memory alert instances grouped into a single notification for us-west contact point.

{

"receiver": "US-East-Alerts",

"status": "firing",

"alerts": [

{

"status": "firing",

"labels": {

"alertname": "High CPU usage - Multi-region",

"grafana_folder": "Multi-region alerts",

"instance": "server-03",

"region": "us-east",

"service": "web-server-2"

...}}}Detail of CPU alert instances grouped into a separate notification for us-east contact point.

{

"receiver": "US-East-Alerts",

"status": "firing",

"alerts": [

{

"status": "firing",

"labels": {

"alertname": "High memory usage - Multi-region",

"grafana_folder": "Multi-region memory alerts",

"instance": "server-12",

"region": "us-east"

...}}}Detail of memory alert instances grouped into a separate notification for us-east contact point.

Conclusion

By configuring notification policies and using labels (such as region), you can group alert notifications based on specific criteria and route them to the appropriate teams. Fine-tuning timing options—including group wait, group interval, and repeat interval—further can reduce noise and ensures notifications remain actionable without overwhelming on-call engineers.

Learn more in Grafana Alerting: Template your alert notifications

Tip

In Get started with Grafana Alerting: Template your alert notifications you learn how to use templates to create customized and concise notifications.