Plugins 〉Falcon LogScale

Falcon LogScale

Falcon LogScale data source for Grafana

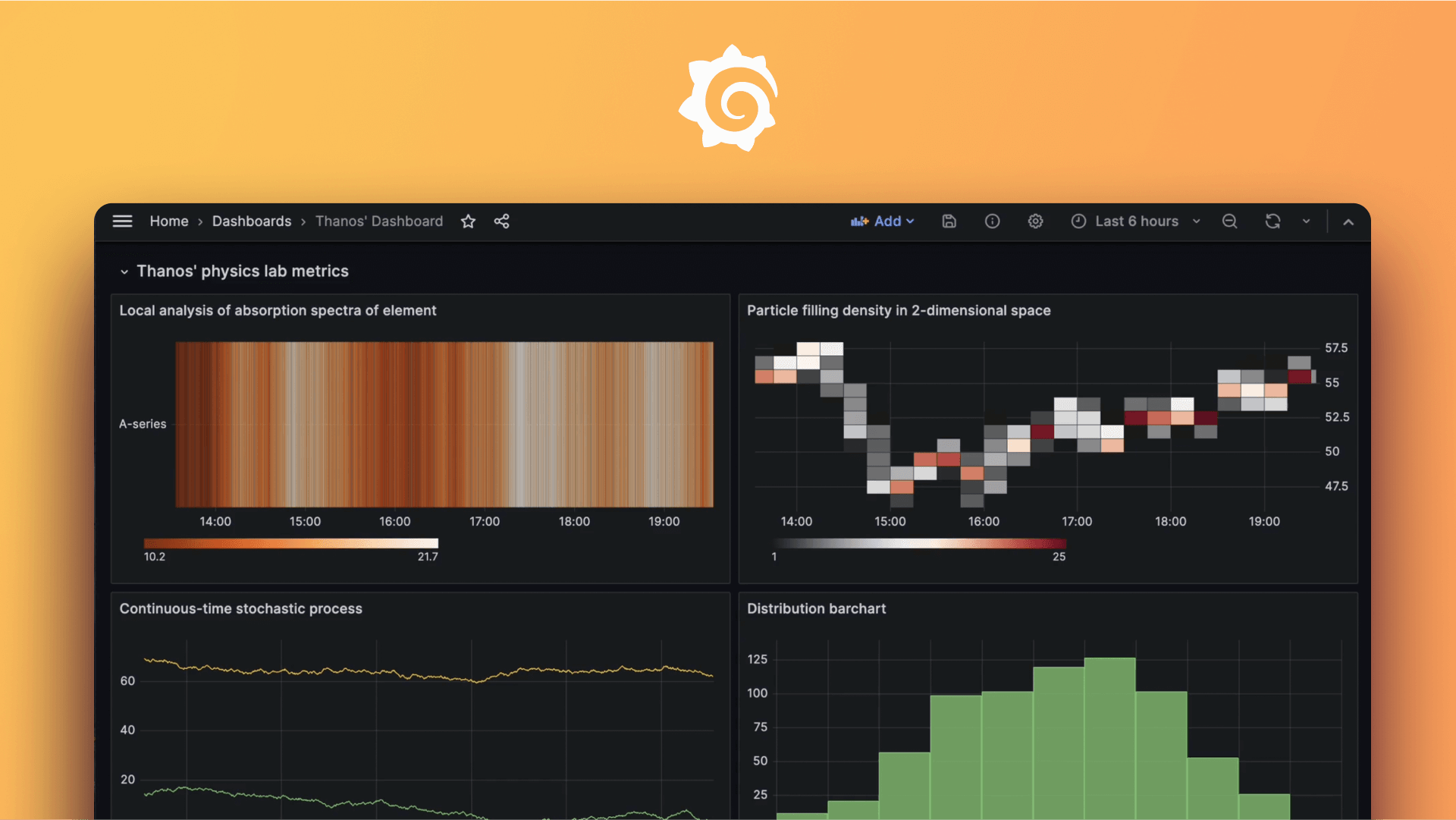

The CrowdStrike Falcon LogScale data source plugin allows you to query and visualize Falcon LogScale data from within Grafana.

Install the plugin

To install the data source, refer to Installation.

Configure the data source in Grafana

Add a data source by filling in the following fields:

Basic fields

| Field | Description |

|---|---|

| Name | A name for this particular Falcon LogScale data source. |

| URL | Where Falcon LogScale is hosted, for example, https://cloud.community.humio.com. |

| Timeout | HTTP request timeout in seconds. |

Authentication fields

| Field | Description |

|---|---|

| Basic auth | Enter a Falcon LogScale username and password. |

| TLS Client Auth | Built-in option for authenticating using Transport Layer Security. |

| Skip TLS Verify | Enable to skip TLS verification. |

| With Credentials | Enable to send credentials such as cookies or auth headers with cross-site requests. |

| With CA Cert | Enable to verify self-signed TLS Certs. |

Custom HTTP Header Data sources managed by provisioning within Grafana can be configured to add HTTP headers to all requests going to that data source. The header name is configured in the jsonData field, and the header value should be configured in secureJsonData. For more information about custom HTTP headers, refer to Custom HTTP Headers.

LogScale Token Authentication

You can authenticate using your personal LogScale token. To generate a personal access token, log into LogScale and navigate to User Menu > Manage Account > Personal API Token. Then, set or reset your token. Copy and paste the token into the token field.

Forward OAuth Identity

Note: The feature is experimental, which means it may not work as expected, it may cause Grafana to behave in an unexpected way, and breaking changes may be introduced in the future.

Prerequisites

OAuth identity forwarding is only possible with a self-hosted LogScale instance appropriately configured with the same OAuth provider as Grafana. Not all OAuth/OIDC configurations may be supported currently.

With this authentication method enabled, a token will not need to be provided to make use of a LogScale data source. Instead, users that are logged in to Grafana with the same OAuth provider as the LogScale instance will have their token forwarded to the data source and that will be used to authenticate any requests.

Note: Some Grafana features will not function as expected e.g. alerting. Grafana backend features require credentials to always be in scope which will not be the case with this authentication method.

Default LogScale Repository

You can set a default LogScale repository to use for your queries. If you do not specify a default repository, you must select a repository for each query.

Configure data links

Data links allow you to link to other data sources from your Grafana panels. For more information about data links, refer to Data links.

To configure a data link, click the add button in the data links section of the data source configuration page. Fill out the fields as follows:

| Field | Description |

|---|---|

| Field | The field that you want to link to. It can be the exact name or a regex pattern. |

| Label | This provides a meaningful name to the data link. |

| Regex | A regular expression to match the field value. If you want the entire value, use (.*) |

| URL or Query | A URL link or query provided to a selected data source. You can use variables in the URLs or queries. For more information on data link variables, refer to Configure data links |

| Internal link | Select this option to link to a Grafana data source. |

Configure the data source with provisioning

It is possible to configure data sources using configuration files with Grafana’s provisioning system. To read about how it works, including all the settings that you can set for this data source, refer to Provisioning Grafana data sources

Here are some provisioning examples for this data source using basic authentication:

apiVersion: 1

datasources:

- name: Falcon LogScale

type: grafana-falconlogscale-datasource

url: https://cloud.us.humio.com

jsonData:

defaultRepository: <defaultRepository or blank>

authenticateWithToken: true

secureJsonData:

accessToken: <accessToken>

Query the data source

The query editor allows you to write LogScale Query Language (LQL) queries. For more information about writing LQL queries, refer to Query Language Syntax. Select a repository from the drop-down menu to query. You will only see repositories that your data source account has access to.

Selecting $defaultRepo from the Repository dropdown automatically maps to the default repository configured for the datasource which enables switching between multiple LogScale datasources.

You can use your LogScale saved queries in Grafana. For more information about saved queries, refer to User Functions.

Here are some useful LQL functions to get you started with Grafana visualizations:

| Function | Description | Example |

|---|---|---|

| timeChart | Groups data into time buckets. This is useful for time series panels. | timeChart(span=1h, function=count()) |

| table | Returns a table with the provided fields. | table([statuscode, responsetime]) |

| groupBy | Group results by field values. This is useful for bar chart, stat, and gauge panels. | groupBy(_field, function=count()) |

Explore view

The Explore view allows you to run LQL queries and visualize the results as logs or charts. For more information about Explore, refer to Explore. For more information about Logs in Explore, refer to Explore logs.

Grafana v9.4.8 and later allows you to create data links in Tempo, Grafana Enterprise Traces, Jaeger, and Zipkin that target Falcon LogScale.

Templates and variables

To add a new Falcon LogScale query variable, refer to Add a query variable. Use your Falcon LogScale data source as your data source. Fill out the query field with your LQL query and select a Repository from the drop-down menu. The template variable will be populated with the first column from the results of your LQL query.

After creating a variable, you can use it in your Falcon LogScale queries using Variable syntax. For more information about variables, refer to Templates and variables.

Import a dashboard for Falcon LogScale

Follow these instructions for importing a dashboard.

You can find imported dashboards in Configuration > Data Sources > select your Falcon LogScale data source > select the Dashboards tab to see available pre-made dashboards.

Learn more

- Add Annotations.

- Configure and use Templates and variables.

- Add Transformations.

- Set up alerting; refer to Alerts overview.

Grafana Cloud Free

- Free tier: Limited to 3 users

- Paid plans: $55 / user / month above included usage

- Access to all Enterprise Plugins

- Fully managed service (not available to self-manage)

Self-hosted Grafana Enterprise

- Access to all Enterprise plugins

- All Grafana Enterprise features

- Self-manage on your own infrastructure

Grafana Cloud Free

- Free tier: Limited to 3 users

- Paid plans: $55 / user / month above included usage

- Access to all Enterprise Plugins

- Fully managed service (not available to self-manage)

Self-hosted Grafana Enterprise

- Access to all Enterprise plugins

- All Grafana Enterprise features

- Self-manage on your own infrastructure

Grafana Cloud Free

- Free tier: Limited to 3 users

- Paid plans: $55 / user / month above included usage

- Access to all Enterprise Plugins

- Fully managed service (not available to self-manage)

Self-hosted Grafana Enterprise

- Access to all Enterprise plugins

- All Grafana Enterprise features

- Self-manage on your own infrastructure

Grafana Cloud Free

- Free tier: Limited to 3 users

- Paid plans: $55 / user / month above included usage

- Access to all Enterprise Plugins

- Fully managed service (not available to self-manage)

Self-hosted Grafana Enterprise

- Access to all Enterprise plugins

- All Grafana Enterprise features

- Self-manage on your own infrastructure

Grafana Cloud Free

- Free tier: Limited to 3 users

- Paid plans: $55 / user / month above included usage

- Access to all Enterprise Plugins

- Fully managed service (not available to self-manage)

Self-hosted Grafana Enterprise

- Access to all Enterprise plugins

- All Grafana Enterprise features

- Self-manage on your own infrastructure

Installing Falcon LogScale on Grafana Cloud:

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

Installing plugins on a Grafana Cloud instance is a one-click install; same with updates. Cool, right?

Note that it could take up to 1 minute to see the plugin show up in your Grafana.

For more information, visit the docs on plugin installation.

Installing on a local Grafana:

For local instances, plugins are installed and updated via a simple CLI command. Plugins are not updated automatically, however you will be notified when updates are available right within your Grafana.

1. Install the Data Source

Use the grafana-cli tool to install Falcon LogScale from the commandline:

grafana-cli plugins install The plugin will be installed into your grafana plugins directory; the default is /var/lib/grafana/plugins. More information on the cli tool.

Alternatively, you can manually download the .zip file for your architecture below and unpack it into your grafana plugins directory.

Alternatively, you can manually download the .zip file and unpack it into your grafana plugins directory.

2. Configure the Data Source

Accessed from the Grafana main menu, newly installed data sources can be added immediately within the Data Sources section.

Next, click the Add data source button in the upper right. The data source will be available for selection in the Type select box.

To see a list of installed data sources, click the Plugins item in the main menu. Both core data sources and installed data sources will appear.

Changelog

1.7.0

- Feature: Upgrade

VariableEditorto remove usage of deprecated APIs and addrepositoryvariable query type. #303 - Dependency updates.

1.6.0

- Fix: State bug in

VariableEditor - Dependency updates.

1.5.0

- Experimental: Support OAuth token forwarding for authentication. See here for further details.

- Dependency updates.

1.4.1

- Prepend

@timestampfield to ensure it is always used as the timestamp value in the logs visualization. - Dependency updates.

1.4.0

- Add $defaultRepo option to Repository dropdown.

- Other minor dependency updates

1.3.1

- Error message is more descriptive when a repository has not been selected.

1.3.0

- Bump github.com/grafana/grafana-plugin-sdk-go from 0.180.0 to 0.195.0

- Other minor dependency updates

1.2.0

- Bug: Issue where users were unable to select default repository is fixed.

1.1.0

- Minimum Grafana required version is now 9.5.0

- Logs in explore view can be filtered by a value or filtered out by a value.

- The settings UI has been overhauled to use the new Grafana form and authentication components.

1.0.1

- Bug: TLS option are now correctly passed to the LogScale client.

1.0.0

- Documentation

- A default repository can be selected in the data source config.

- Support added for abstract queries.

- Fields are ordered according to meta-data response from LogScale. '@rawString' is always the first field.

- Log view is the default option in Explore.

- Grafana data frames are converted to wide format when using a LogScale group by function to support multiline time series.

- Bug: Data links do not throw an error if there is not a matching log.

0.1.0 (Unreleased)

- Logs in explore view

- Data links from LogScale logs to traces

- Bug: Remove unused auth options

- Bug: DataFrame types will be correctly converted when the first field is nil

0.0.0 (Unreleased)

Initial release.