ISP-Checker

Blame your ISP with evidence.

ISP-Checker

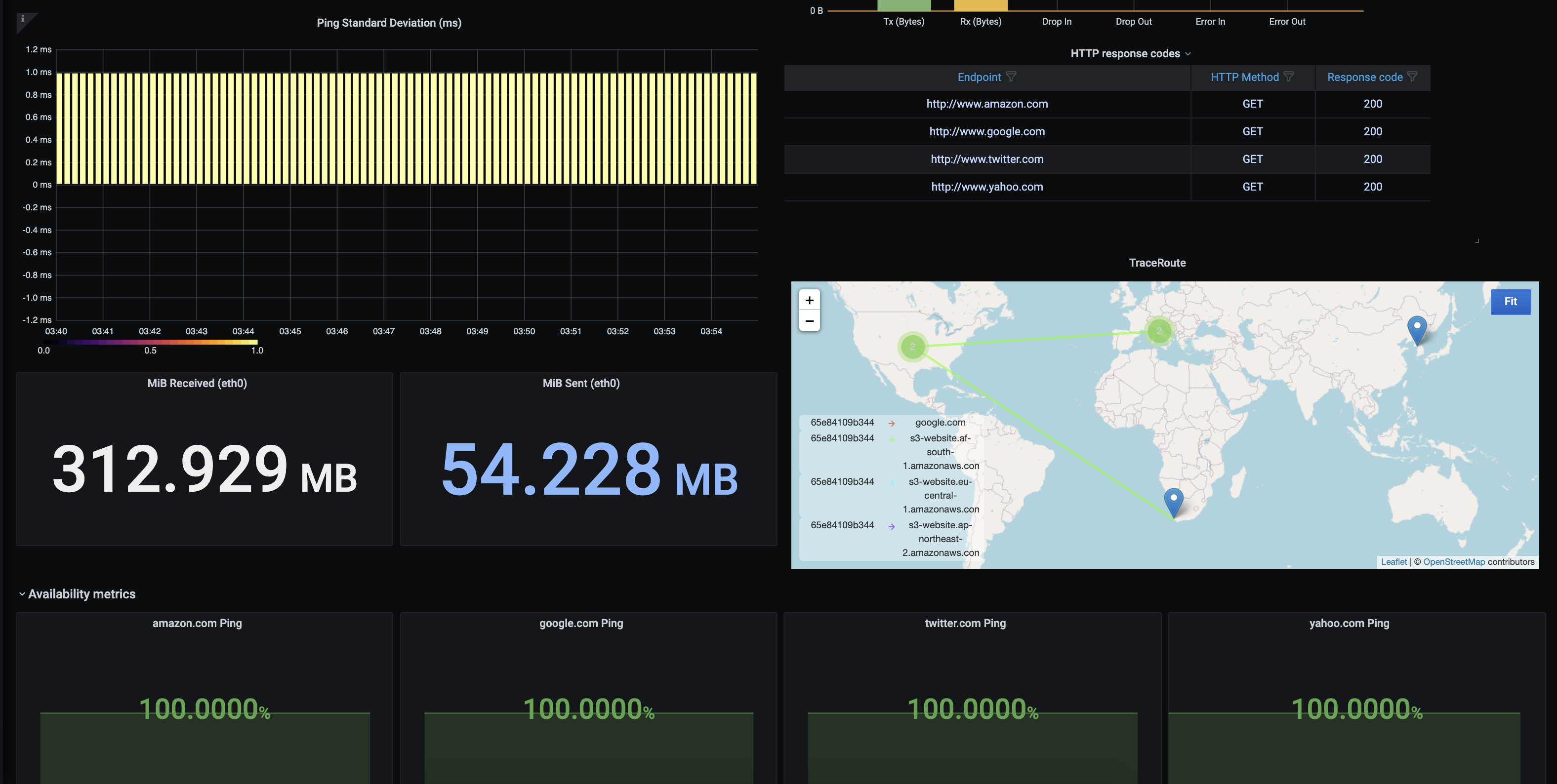

ISP-Checker implements a set of Telegraf checks that send metrics to InfluxDB (an OpenSource, time-series based database) and runs several kinds of metrics collectors to get average/aggregation/integral of values at first glance and focusing on service quality.

ISP-Checker tries to test things like ICMP packet loss, the average time for DNS queries resolution, HTTP Response times, ICMP latencies, ICMP Standard Deviation, Upload/Download speed (by using Speedtest-CLI), and a Graphical MTR/Traceroute version.

It’s easily extensible and it was built on top of Docker to make it portable and easy to run everywhere, importing automatically all components needed to perform checks.

You need to have MTR and Speedtest-CLI as a dependency.

Check https://github.com/fmdlc/ISP-Checker for more information.

Data source config

Collector config:

Dashboard revisions

Upload an updated version of an exported dashboard.json file from Grafana

| Revision | Decscription | Created | |

|---|---|---|---|

| Download |

Get this dashboard

Data source:

Dependencies: