Important: This documentation is about an older version. It's relevant only to the release noted, many of the features and functions have been updated or replaced. Please view the current version.

Caching

Caching is mainly used to improve query performance by storing bloom filters of all backend blocks which are accessed on every query.

Tempo uses an external cache to improve query performance. The supported implementations are Memcached and Redis.

Memcached

Memcached is one of the cache implementations supported by Tempo. It is used by default in the Tanka and Helm examples, see Deploying Tempo.

Connection limit

As a cluster grows in size, the number of instances of Tempo connecting to the cache servers also increases. By default, Memcached has a connection limit of 1024. If this limit is surpassed new connections are refused. This is resolved by increasing the connection limit of Memcached.

These errors can be observed using the cortex_memcache_request_duration_seconds_count metric.

For example, by using the following query:

sum by (status_code) (

rate(cortex_memcache_request_duration_seconds_count{}[$__rate_interval])

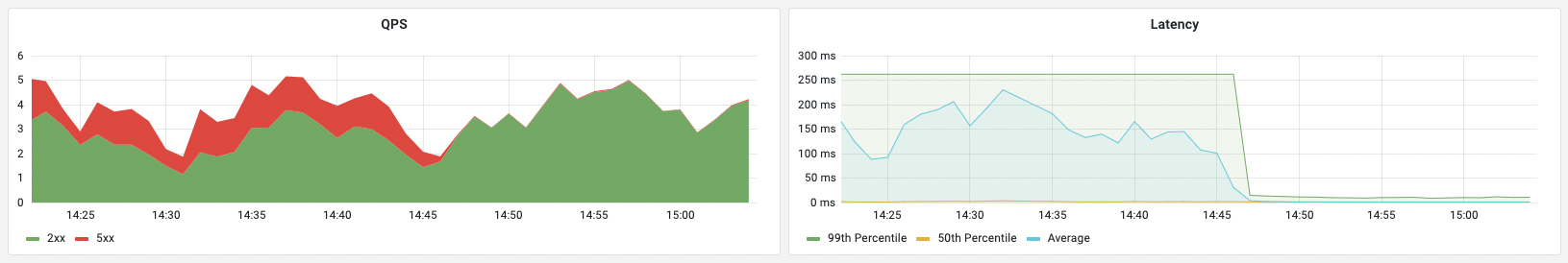

)This metric is also shown in the monitoring dashboards (the left panel):

Note that the already open connections continue to function, just new connections are refused.

Additionally, Memcached will log the following errors when it can’t accept any new requests:

accept4(): No file descriptors available

Too many open connections

accept4(): No file descriptors available

Too many open connectionsWhen using the memcached_exporter, the number of open connections can be observed at memcached_current_connections.

Cache size control

Tempo querier accesses bloom filters of all blocks while searching for a trace. This essentially mandates the size of cache to be at-least the total size of the bloom filters (the working set) . However, in larger deployments, the working set might be larger than the desired size of cache. When that happens, eviction rates on the cache grow high, and hit rate drop. Not nice!

Tempo provides two config parameters in order to filter down on the items stored in cache.

# Min compaction level of block to qualify for caching bloom filter

# Example: "cache_min_compaction_level: 2"

[cache_min_compaction_level: <int>]

# Max block age to qualify for caching bloom filter

# Example: "cache_max_block_age: 48h"

[cache_max_block_age: <duration>]Using a combination of these config options, we can narrow down on which bloom filters are cached, thereby reducing our cache eviction rate, and increasing our cache hit rate. Nice!

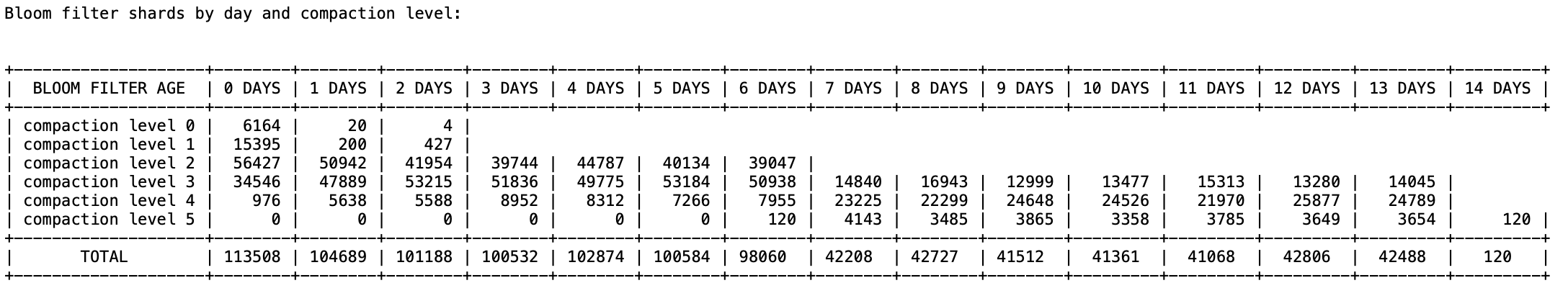

So how do we decide the values of these config parameters? We have added a new command to tempo-cli that prints a summary of bloom filter shards per day and per compaction level. The result looks something like this:

The above image shows the bloom filter shards over 14 days and 6 compaction levels. This can be used to decide the above configuration parameters.

Was this page helpful?

Related resources from Grafana Labs