Databricks datasource for Grafana

The Databricks datasource allows a direct connection to Databricks to query and visualize Databricks data in Grafana.

This datasource provides a SQL editor to format and color code your SQL statements.

Installation

For detailed instructions on how to install the plugin on Grafana Cloud or locally, please checkout the Plugin installation docs.

Note: This plugin uses dynamic links for Credentials authentication (deprecated). We suggest using Token authentication. If you run the plugin on bare Alpine Linux, using Credentials authentication it will not work. If for some reason Token based auth is not an option and Alpine Linux is a requirement, we suggest using our Alpine images.

Manual configuration

Once the plugin is installed on your Grafana instance, follow these instructions to add a new Databricks data source, and enter configuration options.

With a configuration file

It is possible to configure data sources using configuration files with Grafana’s provisioning system. To read about how it works, including all the settings that you can set for this data source, refer to Provisioning Grafana data sources.

Here are some provisioning examples for this data source using basic authentication:

apiVersion: 1

datasources:

- name: Databricks

type: grafana-databricks-datasource

jsonData:

host: community.cloud.databricks.com

httpPath: path-from-databricks-odbc-settings

secureJsonData:

token: password/personal-tokenTime series

Time series visualization options are selectable after adding a datetime

field type to your query. This field will be used as the timestamp. You can

select time series visualizations using the visualization options. Grafana

interprets timestamp rows without explicit time zone as UTC. Any column except

time is treated as a value column.

Multi-line time series

To create multi-line time series, the query must return at least 3 fields in the following order:

- field 1:

datetimefield with an alias oftime - field 2: value to group by

- field 3+: the metric values

For example:

SELECT log_time AS time, machine_group, avg(disk_free) AS avg_disk_free

FROM mgbench.logs1

GROUP BY machine_group, log_time

ORDER BY log_timeTemplates and variables

To add a new Databricks query variable, refer to Add a query variable.

After creating a variable, you can use it in your Databricks queries by using Variable syntax. For more information about variables, refer to Templates and variables.

Macros in Databricks Query

| Macro example | Description |

|---|---|

$____interval_long | Converts Grafana’s interval to INTERVAL DAY TO SECOND literal. Applicable to Spark SQL window grouping expression. |

$__time(dateColumn) | Will be replaced by an expression to convert to a UNIX timestamp and rename the column to time_sec. For example, UNIX_TIMESTAMP(dateColumn) as time_sec |

$__timeEpoch(dateColumn) | Will be replaced by an expression to convert to a UNIX timestamp and rename the column to time_sec. For example, UNIX_TIMESTAMP(dateColumn) as time_sec |

$__timeFilter(dateColumn) | Will be replaced by a time range filter using the specified column name. For example, dateColumn BETWEEN FROM_UNIXTIME(1494410783) AND FROM_UNIXTIME(1494410983) |

$__timeFrom() | Will be replaced by the start of the currently active time selection. For example, FROM_UNIXTIME(1494410783) |

$__timeTo() | Will be replaced by the end of the currently active time selection. For example, FROM_UNIXTIME(1494410983) |

$__timeGroup(dateColumn,'5m') | Will be replaced by an expression usable in GROUP BY clause. For example, *cast(cast(UNIX_TIMESTAMP(dateColumn)/(300) as signed)*300 as signed),* |

$__timeGroup(dateColumn,'5m', 0) | Same as above but with a fill parameter so missing points in that series will be added by grafana and 0 will be used as value. |

$__timeGroup(dateColumn,'5m', NULL) | Same as above but NULL will be used as value for missing points. |

$__timeGroup(dateColumn,'5m', previous) | Same as above but the previous value in that series will be used as fill value if no value has been seen yet NULL will be used (only available in Grafana 5.3+). |

$__timeGroupAlias(dateColumn,'5m') | Will be replaced identical to $__timeGroup but with an added column alias (only available in Grafana 5.3+). |

$__unixEpochFilter(dateColumn) | Will be replaced by a time range filter using the specified column name with times represented as Unix timestamp. For example, dateColumn > 1494410783 AND dateColumn < 1494497183 |

$__unixEpochFrom() | Will be replaced by the start of the currently active time selection as Unix timestamp. For example, 1494410783 |

$__unixEpochTo() | Will be replaced by the end of the currently active time selection as Unix timestamp. For example, 1494497183 |

$__unixEpochNanoFilter(dateColumn) | Will be replaced by a time range filter using the specified column name with times represented as nanosecond timestamp. For example, dateColumn > 1494410783152415214 AND dateColumn < 1494497183142514872 |

$__unixEpochNanoFrom() | Will be replaced by the start of the currently active time selection as nanosecond timestamp. For example, 1494410783152415214 |

$__unixEpochNanoTo() | Will be replaced by the end of the currently active time selection as nanosecond timestamp. For example, 1494497183142514872 |

$__unixEpochGroup(dateColumn,'5m', [fillmode]) | Same as $__timeGroup but for times stored as Unix timestamp (only available in Grafana 5.3+). |

$__unixEpochGroupAlias(dateColumn,'5m', [fillmode]) | Same as above but also adds a column alias (only available in Grafana 5.3+). |

$__interval_long macro

In some cases, you may want to use window grouping with Spark SQL.

Example:

SELECT window.start, avg(aggqueue) FROM a17

GROUP BY window(_time, '$__interval_long')will be translated into the following query based on dashboard interval.

SELECT window.start, avg(aggqueue) FROM a17

GROUP BY window(_time, '2 MINUTE')Macro examples

Below are examples when grafana has a 1m interval.

| Format | Expands to |

|---|---|

$__interval_long | 1 MINUTE |

Oauth Configuration

Oauth Passthrough with Microsoft Extra ID (Azure Active Directory)

Gather information

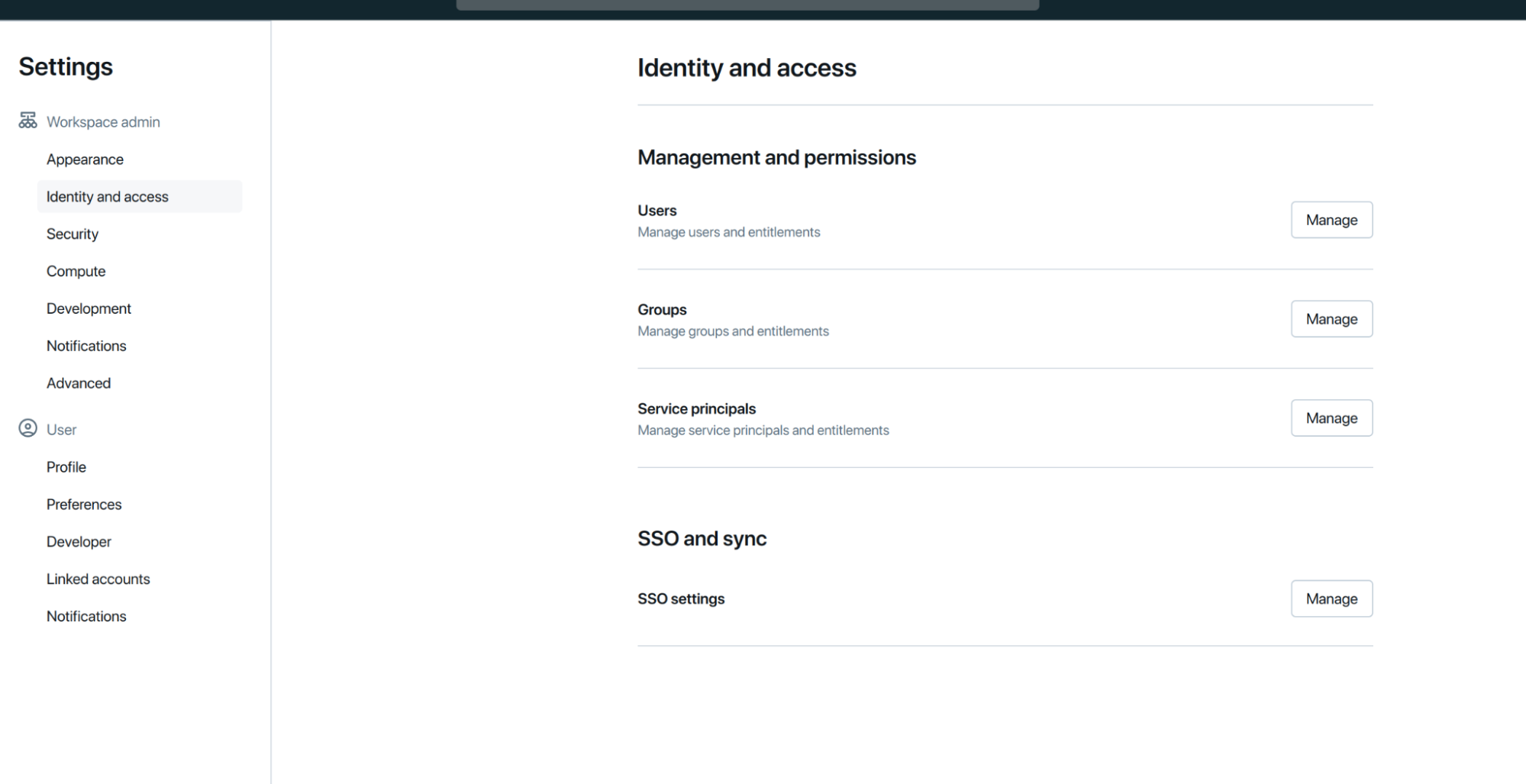

- Go to your Databricks Dashboard as an administrator under Settings > Identity and access > SSO and sync > SSO Settings > Manage:

![Databricks dashboard]()

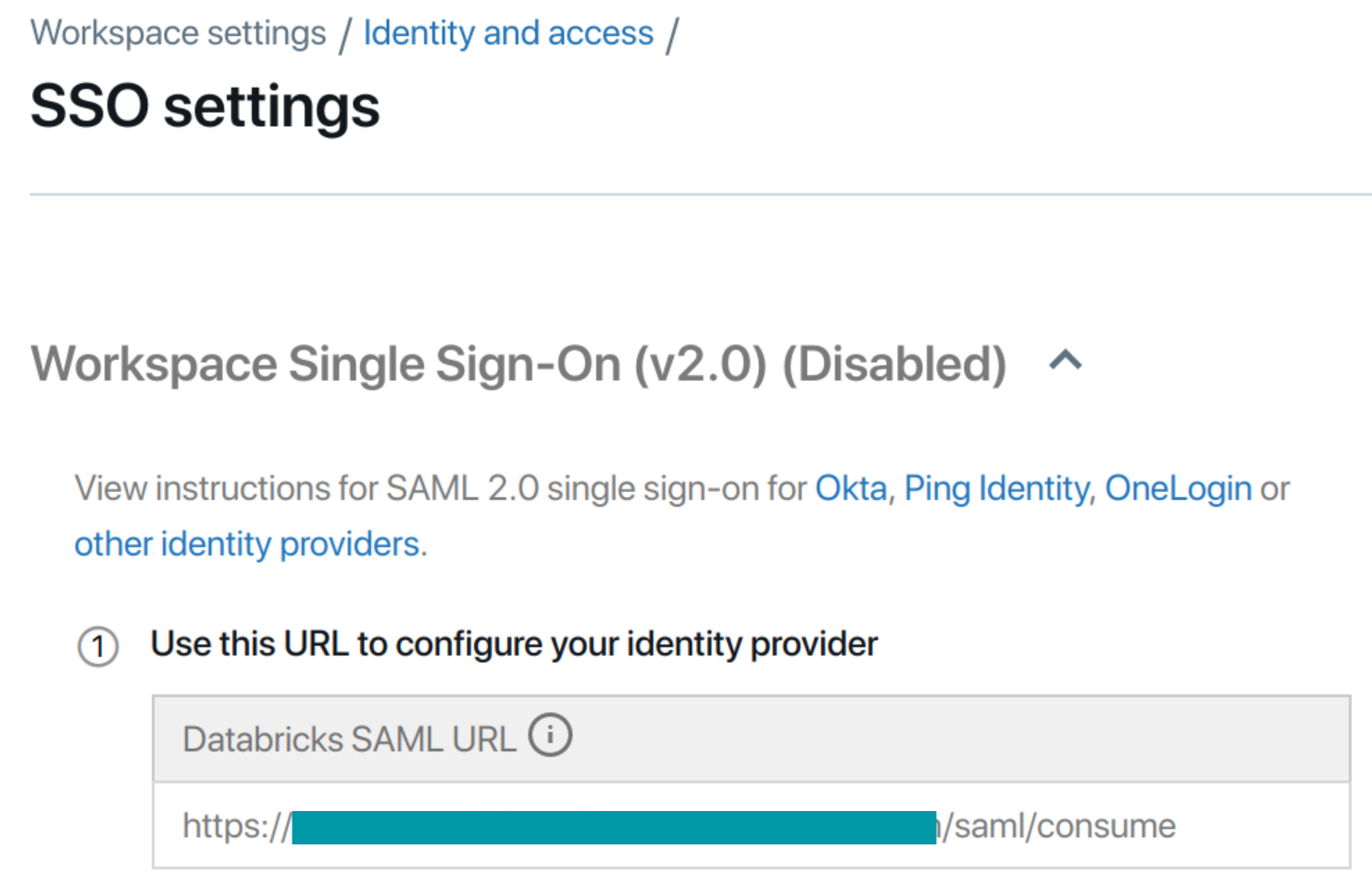

- Copy the Databricks SAML URL. (Don’t close the tab)

![Databricks dashboard]()

- Create your App Registration:

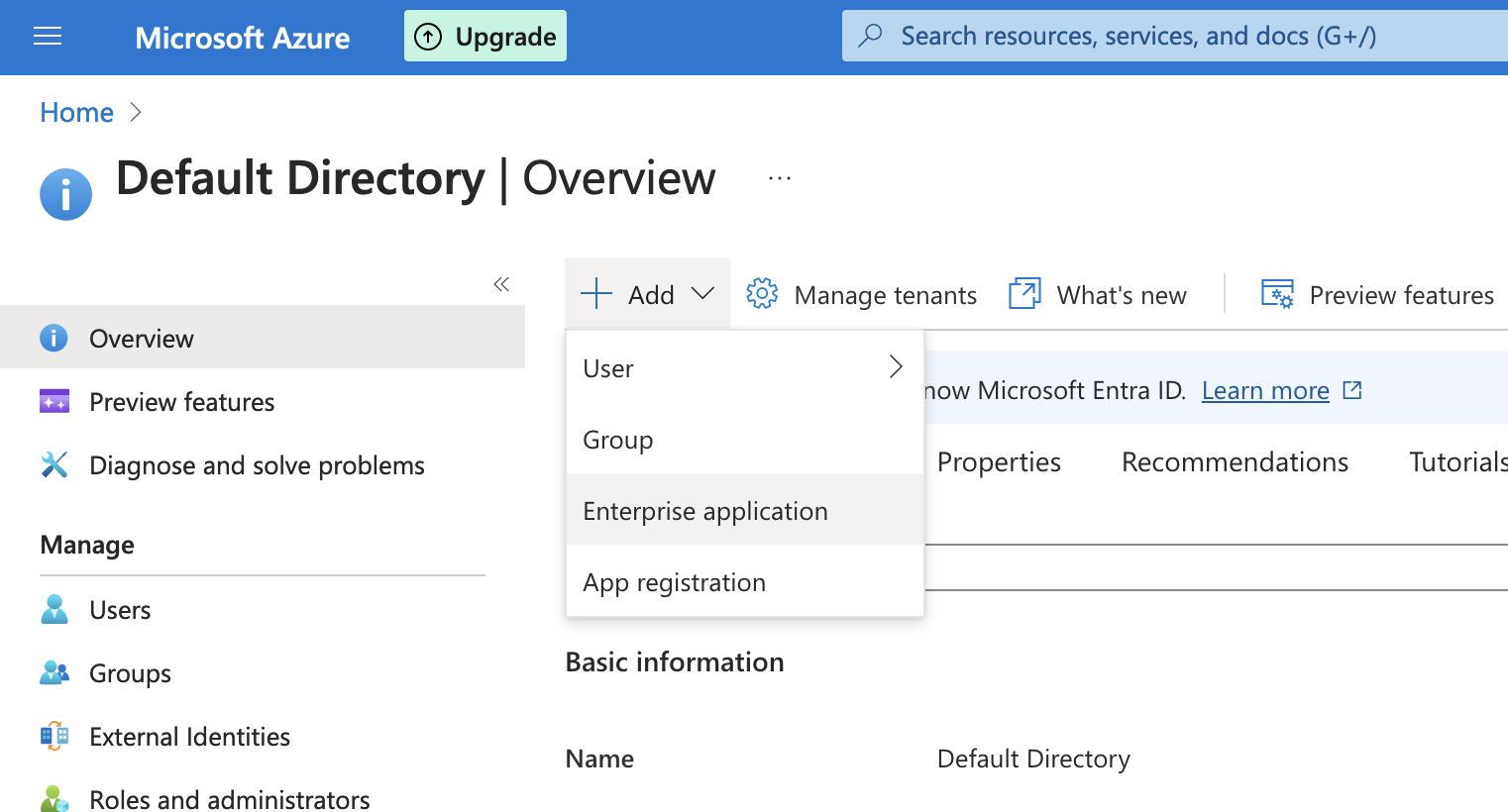

- Go to the Azure Portal: https://portal.azure.com/

- Microsoft Extra ID > Add > Enterprise Application

![Microsoft Extra ID Add Enterprise Application screen]()

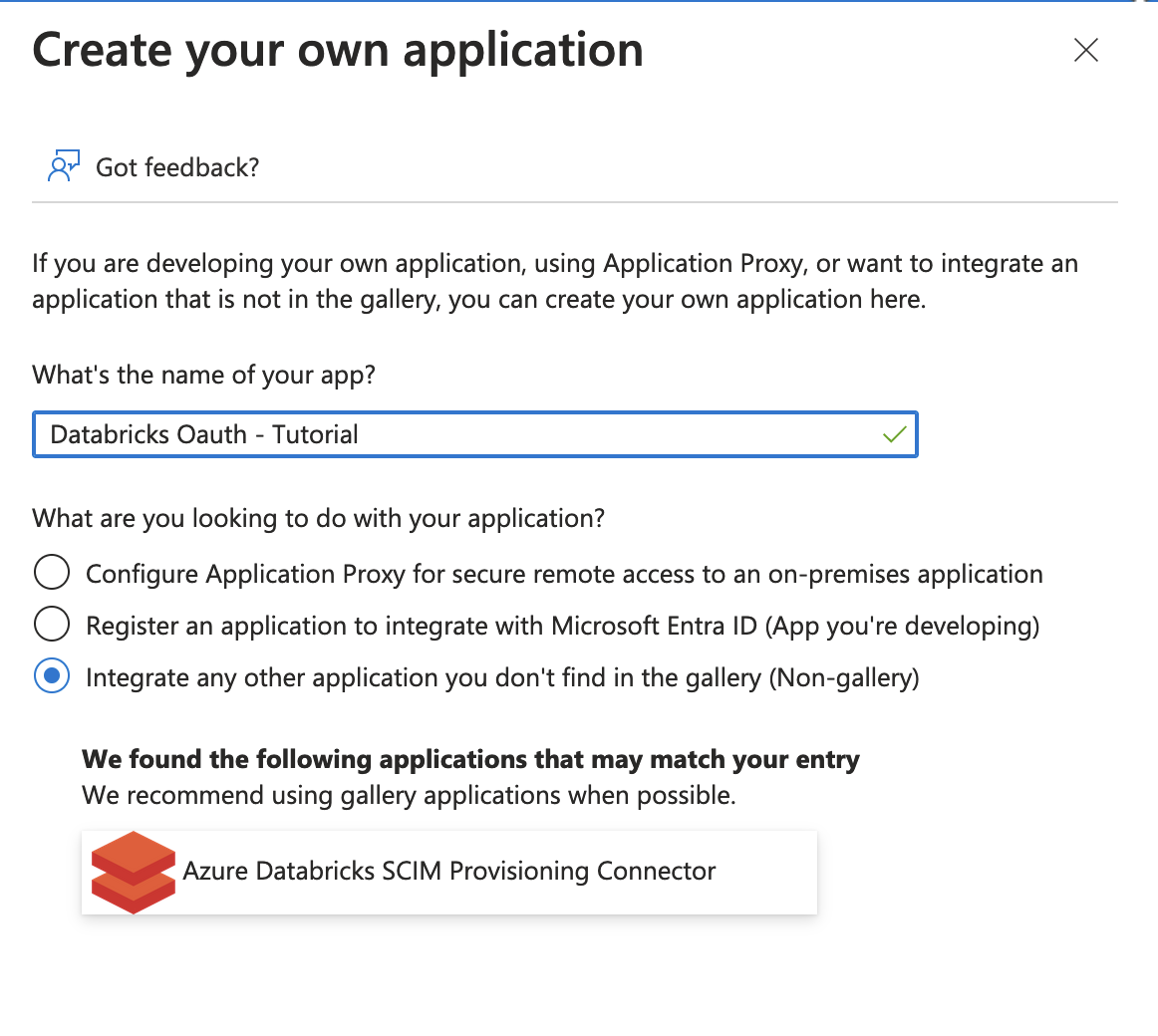

- Enter a name for the application. When asked “What are you looking to do with your application?”, choose Integrate any other application you don’t find in the gallery.

![Databricks create application screen]()

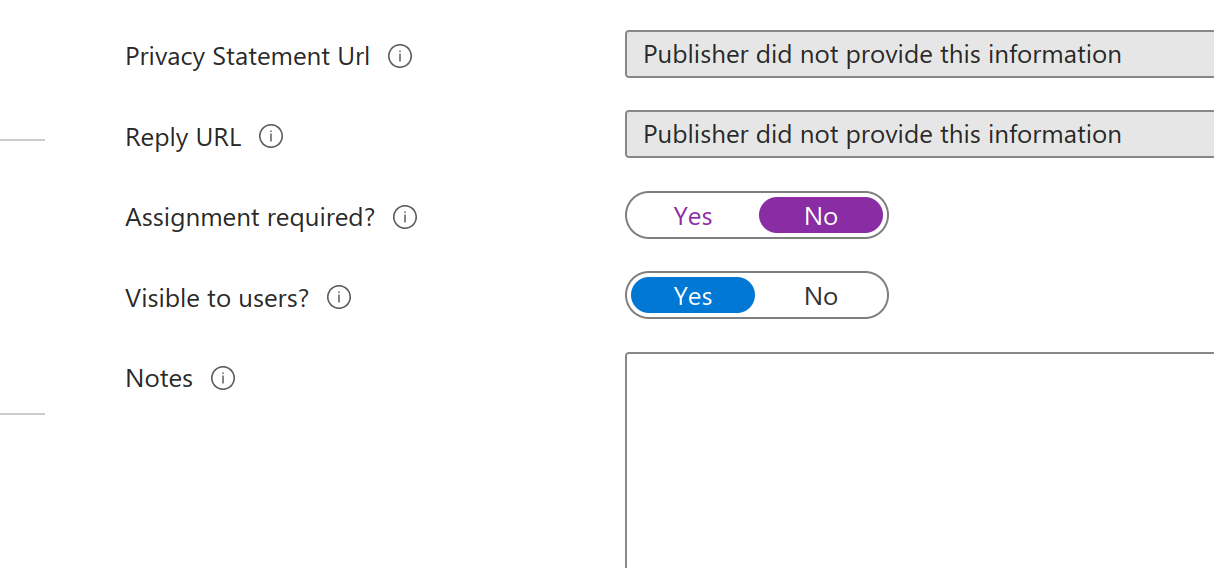

- Under Properties set Assignment Required to NO:

![Databricks create application screen]()

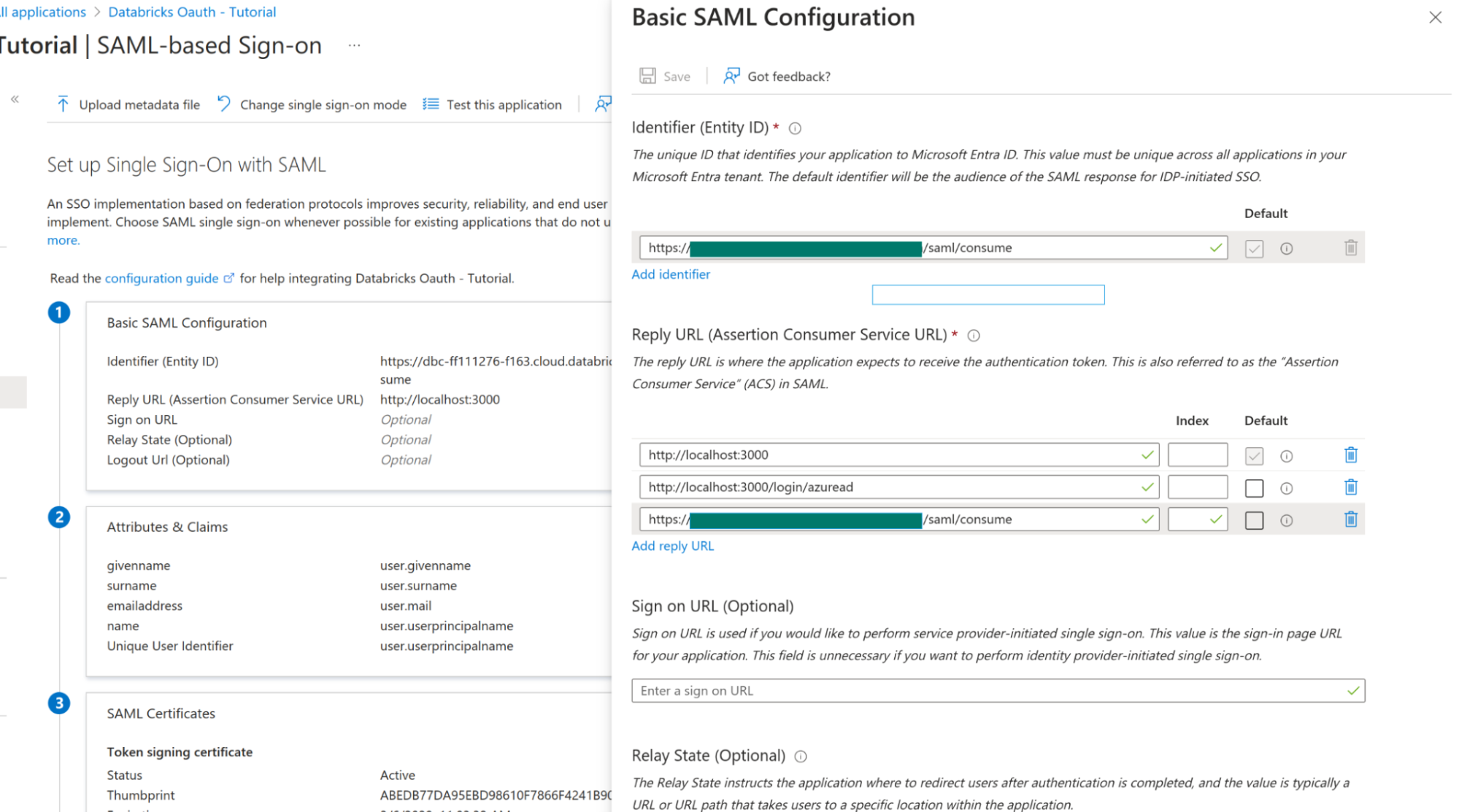

- Under Single-sign on click on SAML and under Basic SAML Configuration click edit:

- Set Identifier ID to the Databricks SAML URL saved from earlier and for Reply URLS set it also to the Databricks SAML URL as well as the Grafana redirect URLs from this guide

![Basic SAML Configuration]()

- Under SAML Signin Certificate click Edit and set Signin Option to Sign SAML response and assertion. Click Save.

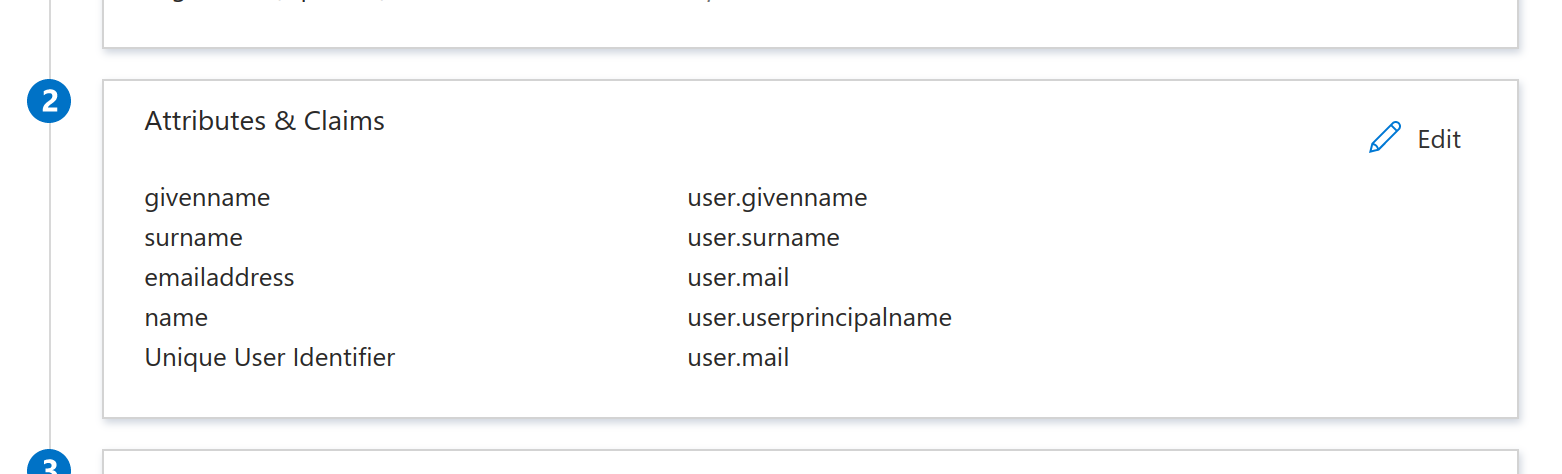

- Under Attributes & Claims click edit. Set the Unique User Identifier (Name ID) field to user.mail

![Basic SAML Configuration]()

- Under SAML Certificates next to Certificate Base 64 click Download. This will download a

.cerfile. Copy the contents for further steps in Databricks. - Also save Login URL and Microsoft Extra ID Identifier for Databricks

- Back in your Databricks SSO Settings tab

- Single Sign-On URL => Login URL (from previous step)

- Identity Provider Entity ID => Microsoft Extra Identifier (from previous step)

- X.509 Certificate => Contents from downloaded certificate

Complete Grafana Oauth Setup

- On the Azure Portal go to App Registrations > All Applications and find your application name

- On the overview page note the Application (client) ID. For config in your

conf.inifile this is the Oauth client ID as well of Directory (tenant) ID. - Click on Endpoints:

- Note the OAuth 2.0 authorization endpoint (v2) URL. This is the authorization URL.

- Note the OAuth 2.0 token endpoint (v2). This is the token URL.

- Click Certificates & secrets, then add a new entry under Client secrets with the following configuration.

- Description: Grafana OAuth

- Expires: Never

- Click Add then copy the key value. This is the OAuth client secret.

Define Required Roles

- Go to Azure Active Directory and then to Enterprise Applications.

- Search for your application and click it.

- Click Users and Groups.

- Click Add user/group to add a user or group to the Grafana roles.

- Now go back to the Azure Active Directory and then to App Registrations. Search for your app and click it.

- Go to App Roles and configure roles like described in here.

- Now Configure AD Oauth in Grafana Configuration file as described in here.

Forward Token to Users

In your grafana.ini file set:

[azure]

forward_settings_to_plugins = grafana-azure-monitor-datasource, prometheus, grafana-azure-data-explorer-datasource, mssql, grafana-databricks-datasourceFinally, under the datasource settings page set the Authentication Type to OAuth Passthrough.

Learn more

- Add Annotations.

- Configure and use Templates and variables.

- Add Transformations.

- Set up alerting; refer to Alerts overview.