Introduction to Kubernetes Monitoring

You face many challenges with Kubernetes when you are trying to perform:

- Reactive problem solving: When you react to issues without a monitoring system, you must guess the probable sources, then use trial and error to test fixes. This increases the workload, especially for newcomers who are unfamiliar with the system. The more difficult it is to troubleshoot, the more downtime increases and the more burden is placed on experienced staff.

- Proactive management: Resources that are not optimized can significantly impact both budget and performance. If a fleet is underprovisioned, the performance and availability of applications and services are at serious risk. Underprovisioning leads to applications that lag, under perform, are unstable, or do not function. Fleets that are overprovisioned run the risk of wasting money and resources, becoming costly.

Reactive response benefits

Quick issue identification, alerts, data correlation, and other features are built into Kubernetes Monitoring to streamline troubleshooting.

Priority issues at forefront

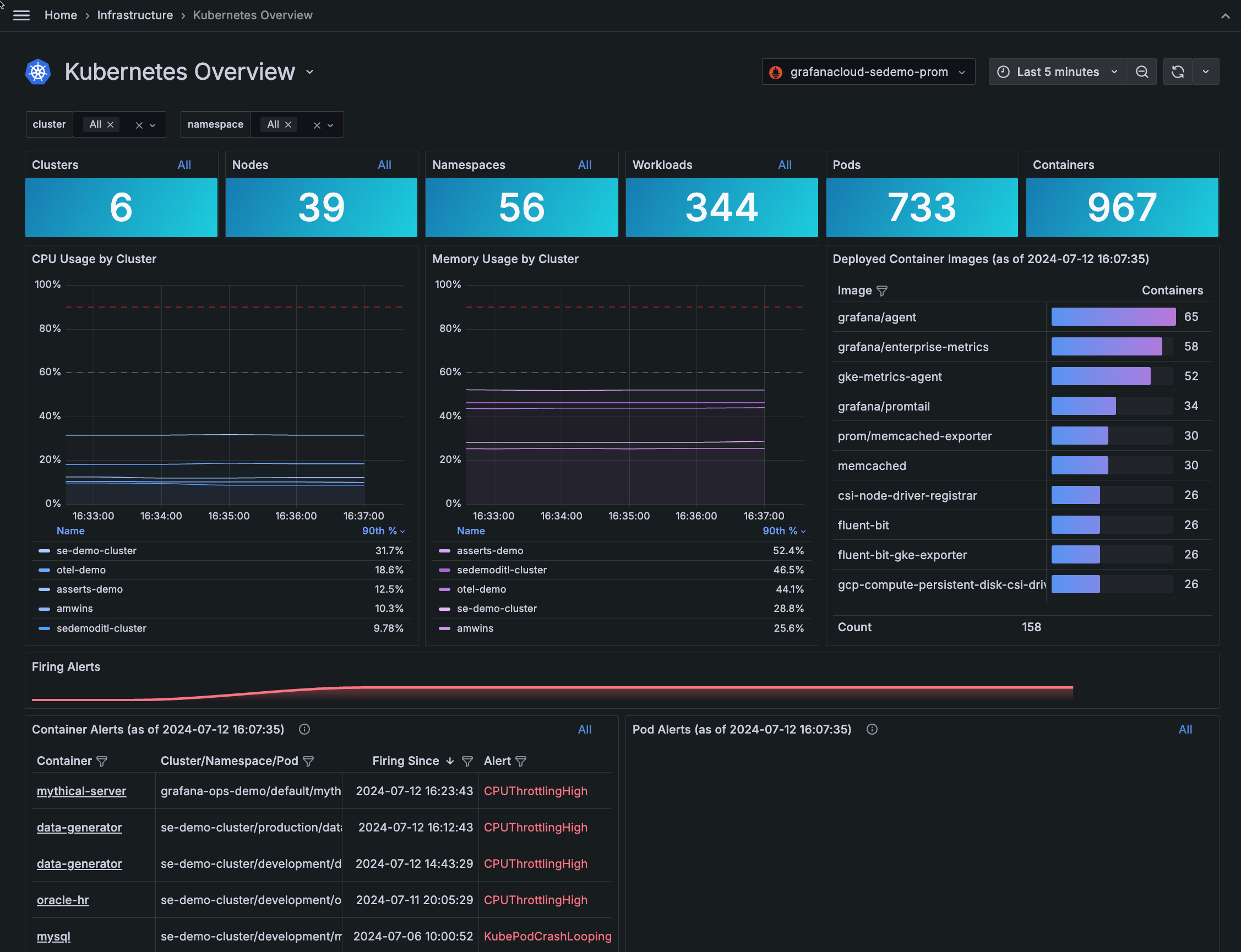

The Kubernetes Overview page provides a high-level look at counts for Kubernetes objects, CPU and memory usage by Cluster, and firing alerts for containers and Pods.

You can filter this view by Clusters and namespaces, then identify issues that require attention to begin your problem solving.

Real-time alerts

Real-time alerts inform you as soon as problems begin, so you can prevent users from being the first to find an issue. Alerts and alert rules are available out of the box, so you can customize alerts.

Logs and metrics correlation

As with metrics, Kubernetes doesn’t provide a native storage solution for logs. Logs help you identify the root cause of an issue more quickly, making troubleshooting without logs incomplete. The best way to discover reproduction steps and work towards discovering root causes is often through accessing logs from your application and Kubernetes components.

Kubernetes Monitoring uses Grafana Loki as its log aggregator, built to be compatible with Prometheus. Since Loki and Prometheus share labels, you can correlate metrics and logs to identify root causes faster. This also removes the burden of setting up and configuring multiple technologies.

Proactive management benefits

The features of Kubernetes Monitoring enable you to create and implement a strategy for proactive management.

Early error detection

Log files, traces, and performance metrics provide visibility into what’s happening in your Cluster. When you proactively monitor your Kubernetes Clusters, you have advanced warning of usage spikes and increasing error rates. With early error detection, you can solve issues before they affect your users.

Cost visibility and management

Nodes, load balancers, and Persistent Volumes usually incur a separate cost from your provider. Kubernetes Monitoring provides visibility into these costs to manage and reduce costs.

Resource efficiency management

The insight you gain into real-world Cluster usage means you can monitor your Kubernetes Cluster for resource contention or uneven application Pod distribution across your Nodes. Then you can make simple scheduling adjustments, such as setting affinities and anti-affinities, to significantly enhance performance and reliability.

You can mitigate the threat of an unstable infrastructure by monitoring resource usage of CPU, RAM, and storage:

- Ensure that there are enough allocated resources. This decreases the risk of Pod or container eviction as well as undesired performance of your microservices and applications.

- Eliminate unused or stranded resources.

Node health and resource management

Kubernetes Nodes are the machines in a Cluster that run your applications and store your data. Unhealthy Nodes can cause exponential errors, unhealthy Deployments, or other events that may be frequent or infrequent. There are two types of Nodes in a Kubernetes Cluster:

- Worker Nodes: To host your application containers, grouped as Pods

- Control plane Nodes: To run the services that are required to control the Kubernetes Cluster

While Clusters act as the spine of your Kubernetes architecture, Nodes form the vertebrae. A healthy backbone of efficient Nodes is required for your Clusters to stay up and your applications to run fast. To ensure you have healthy nodes, one solution is expensive autoscalers that purchase increasingly more cloud resources and span more Nodes. That gives you seemingly endless resources, but doesn’t pinpoint where the actual issues are. With Kubernetes Monitoring, you can take a data-driven approach for better capacity utilization, resource management, and Pod placement.

Resource usage forecasts

You need to know the number of Nodes, load balancers, and Persistent Volumes that are currently deployed in your cloud account. Each of these objects usually incur a separate cost from your provider. Auto-scaling architectures let you adapt in real-time to changing demand, but this can also create rapidly spiraling costs.

By looking at a prediction of resource usage, you have more information to forecast how much of a particular resource is required for a given project or activity. This insight allows for better planning, budgeting, and cost estimations.

What is out of the box

Kubernetes Monitoring out-of-the-box features include:

- Drilling into your data using a single interface

- Kubernetes Overvew, showing a snapshot of Cluster, Node, Pod, and container counts, as well as any issues that need attention and the alerts associated with them

- Efficiency data throughout the app for examining and refining resource usage

- Cost data globally available for analyzing and managing your infrastructure costs and potential savings

- Embedded Alerts page where you can respond to and troubleshoot alerts, and select alerting rules to view and customize them

- Immediate, in-context access to other Grafana Cloud applications for quicker analysis and resolution, including:

- Curated set of metrics to assist in preventing cardinality issues, and in-app Cardinality page for analyzing metrics

- Recording rules to increase the speed of queries and the evaluation of alerting rules

- Predictions for CPU and memory usage powered by machine learning

Get started

Get started easily by using a quick configuration process with Grafana Kubernetes Monitoring Helm chart. When you configure with the Helm chart, there’s no manual set up, and the chart includes automatic updates for all components that it installs.

Other configuration methods

There are other available methods you can use to configure Kubernetes Monitoring for your infrastructure data.

To configure data about an application running in Kubernetes, refer to Application metrics.