Introduction to Kubernetes Monitoring

Kubernetes environments are complex and change constantly. These moving parts make it hard for teams to prevent issues from causing problems quickly or manage for efficiency. Kubernetes Monitoring gives you the visibility into your infrastructure and tools to fix problems fast and prevent them from happening again.

Reactive response benefits

Reactive problem solving helps teams respond to issues as they appear. Quick issue identification, alerts, data correlation, and other features are built into Kubernetes Monitoring to shorten troubleshooting time.

Priority issues at forefront

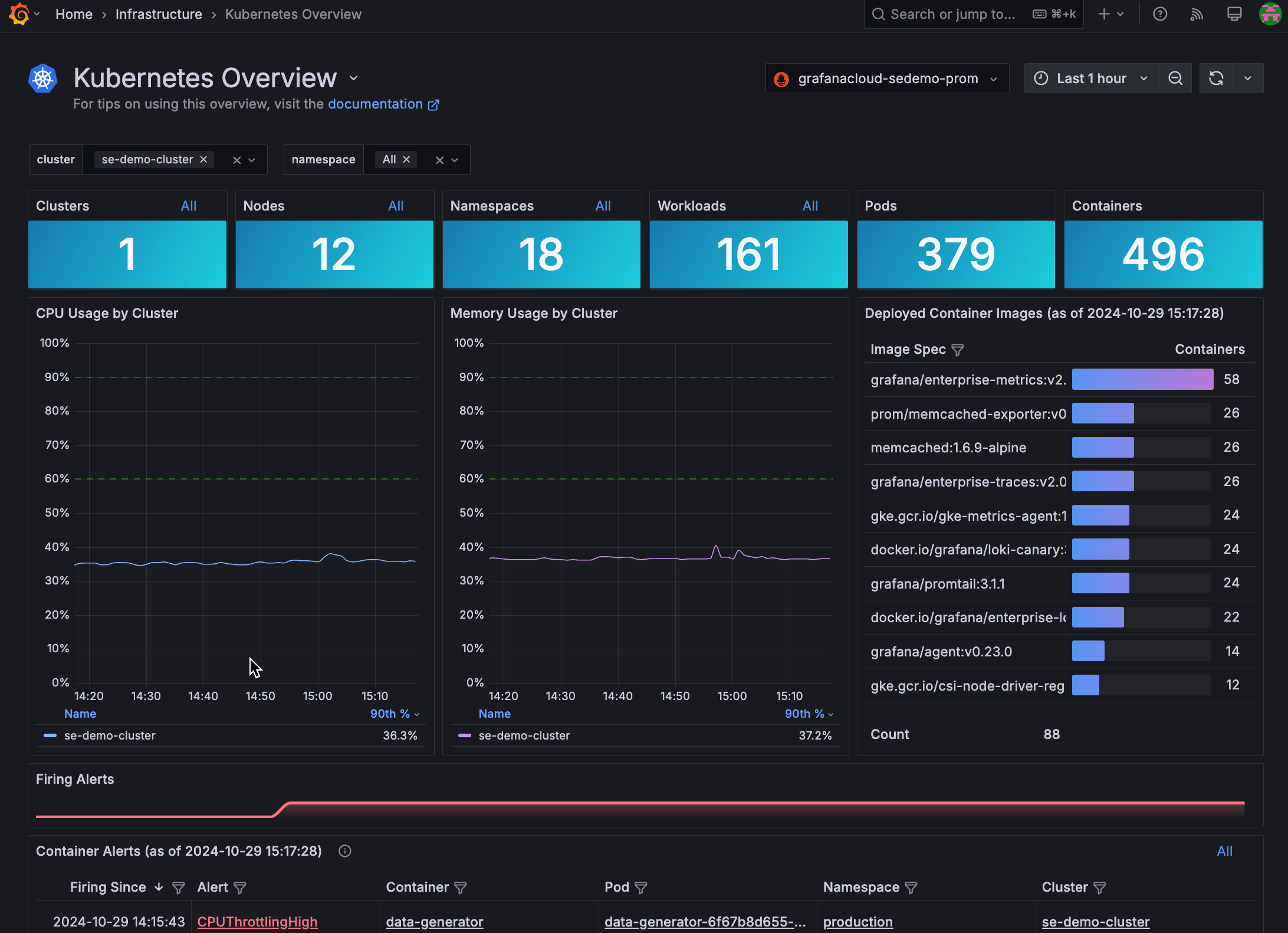

The Kubernetes Overview page provides a high-level look at counts for Kubernetes objects, CPU and memory usage by Cluster, and firing alerts for containers and Pods. You can filter this view by Clusters and namespaces, then identify issues that require attention to begin your problem solving.

Real-time alerts

Real-time alerts inform you as soon as problems begin. You can jump from alert to runbook for a quick solution, create your own alerts, and copy a built-in alert to customize it.

Logs and metrics correlation

While Kubernetes doesn’t provide a native storage solution for logs, Kubernetes Monitoring uses Grafana Loki as its log aggregator. Since Loki and Prometheus share labels, you can correlate metrics and logs to identify root causes faster without configuring and using multiple technologies.

Proactive management benefits

The features available in Kubernetes Monitoring help you create and implement a proactive strategy with a data-driven approach.

Early error detection

You can use built-in alerting for issues such as CPU throttling to learn which settings need fine tuning. Network bandwidth and saturation is available by object. The time range selector in Kubernetes Monitoring provides a look into the history of an object, which shows patterns such as spikes. Outlier Pod detection can find Pods with CPU usage differences that may lead to issues.

Cost visibility and management

Nodes, load balancers, and Persistent Volumes usually cost extra from your provider, making it important to keep track of them. Auto-scaling architectures let you adapt in real-time to changing demand, but can lead to rapidly rising costs. Kubernetes Monitoring shows these costs so you can decide what costs can be reduced. With cost prediction, you can view potential, future costs.

Resource efficiency management

You can reduce the risk of an unstable infrastructure by monitoring resource usage to:

- Ensure that there are enough allocated resources and decrease the risk of Pod eviction, as well as prevent declining performance of your microservices and applications.

- Eliminate unused or stranded resources.

Then you can make scheduling adjustments, such as setting affinities and anti-affinities, to improve performance and reliability.

Resource usage forecasts

By looking at a prediction of resource usage, you can better forecast for a project or activity.

What is out of the box

The out-of-the-box features that are part of Kubernetes Monitoring include:

- The ability to explore and troubleshoot your Kubernetes infrastructure in a single interface

- A built-in, easy deployment process using Helm chart that includes the option to use eBPF technology to automatically instrument applications with Grafana Beyla. This collects telemetry data for applications on the Cluster without adding SDKs.

- Kubernetes Overview, showing a snapshot of Cluster, Node, Pod, and container counts, as well as any issues that need attention and the alerts associated with them

- Efficiency data throughout the app for checking and improving resource usage

- Cost data globally available for analyzing and managing your infrastructure costs and potential savings

- Alerts page where you can respond to and troubleshoot alerts, and select built-in alerting rules to view and copy for customization

- Energy usage data provided by Kepler

- Immediate, in-context access to other Grafana Cloud applications for quicker analysis and resolution, including:

- Recording rules to increase the speed of queries and the evaluation of alerting rules

- Predictions for CPU and memory usage, powered by machine learning

- Selected set of metrics to assist in providing only the metrics you need

Get started

Get started by using a simple configuration process with Grafana Kubernetes Monitoring Helm chart. When you configure with the Helm chart, there’s no manual set up, and the chart includes automatic updates for all components that it installs.

Other configuration methods

There are other available methods you can use to configure Kubernetes Monitoring for your infrastructure data.

To configure data about an application running in Kubernetes, refer to Application metrics.