Compare tests

To find regressions, you can compare data from multiple test runs. Grafana Cloud k6 provides three graphical ways to compare tests over time:

- Between a recent run and a baseline.

- Between two selected runs.

- Across all runs for a certain script.

Warning

Test comparison only works on runs from the same test script. You can’t compare two different test scripts.

Use a test as a baseline

Comparing results against a known baseline is a core part of the general methodology for automated performance testing. Baseline tests are important for comparing against a control and finding differences.

Baseline tests should produce enough load to contain meaningful data and ideal results. In other words, a heavy stress test isn’t a good baseline. Think much smaller.

To set your baseline, follow these steps:

- Open the results for the test run you wish to be your baseline.

- Select the three dots in the top right corner, then set as Baseline.

Baseline tests are exempt from data-retention policies.

Select test runs to compare

To compare two test runs, follow these steps:

- Open up a test run.

- In the top right, select Compare result.

- Select the test run you want to compare the test to.

Test comparison mode

When you compare tests, the layout of the performance-overview section changes to comparison mode. Comparison mode has controls for selecting which tests to be displayed on the left and right, and the performance overview chart now renders time series for the two compared test runs.

Solid lines represent the base run, and dashed lines represent the comparison. To make a certain time series more visible, select the appropriate element in the interactive legend.

Compare scenarios

If a test has multiple scenarios, k6 presents a performance overview for each one. If the test script uses multiple protocols, k6 categorizes the overview data by protocol.

Compare thresholds

To compare thresholds, select the Thresholds tab. You can add additional data to the table for the base and target test runs.

You can compare the following threshold data from the current and previous runs:

- The

valueof the threshold. pass/failstatuses.- Previous and current test-run values for each threshold and its

pass/failstatus.

To display a separate threshold chart for each test run, select a threshold.

Compare checks

To compare checks, use the Checks tab. Here, k6 provides extra data on the table for the base and target test runs.

You can compare the following metrics from the current and previous runs:

Success RateSuccess CountFail Count

To display separate check charts for each test run, select a check.

Compare HTTP requests

To compare HTTP requests, use the HTTP tab. Here, k6 provides extra data on the table for the base and target test runs.

You can compare the following data from the current and previous runs:

- Metrics such as:

request countavgp95 response time

- Other data for individual HTTP requests.

To show separate charts, select the rows for each test run that you want to compare. You can add extra charts, such as timing breakdowns for each HTTP request.

Compare scripts

To compare scripts, use the Scripts tab. You can view the scripts for each test run in the read-only editor mode.

At the top of the tab, you can:

- See the total number of changes between the test runs.

- Click the up and down arrows to navigate on the editor to the next/previous script change.

- Select a Split or Inline view mode

The editor works similarly to other code editors, highlighting any lines that were updated, added, or removed.

Comparing scripts is a useful way to understand if any modifications to your test script have caused differences in the test results, similar to comparing other types of test data like checks or thresholds. As your test scripts evolve over time, it can be helpful to create a new test whenever significant alterations are made, as this can allow you to more accurately evaluate the performance of your systems.

Explore test trends

To compare runs for a test across time, use the performance-trending chart. The chart displays test-run metrics, using colors to signal the status of a specific run.

To view the performance-trending chart for multiple tests that belong to the same project, open the Project page:

Additionally, to view the performance-trending chart for an individual test, open the test’s page:

This last chart shows more data points over time. For more information about an individual run, hover over any bar in the chart.

By default, the data displayed in the performance-trending chart is the p95 of the HTTP response time (http_req_time).

The chart displays a summary trending metric, an aggregated value for all metric data points in the test run. k6 produces a single value for each test run using the trending metric, and then plots each value the chart. You can customize the trending metric on a per-test basis.

Customize the trending metric

To customize the trending metric used for a test:

- Navigate to the Project page.

- Select the three dots in the top-right corner of the test’s performance-trending chart.

- Select Customize trend.

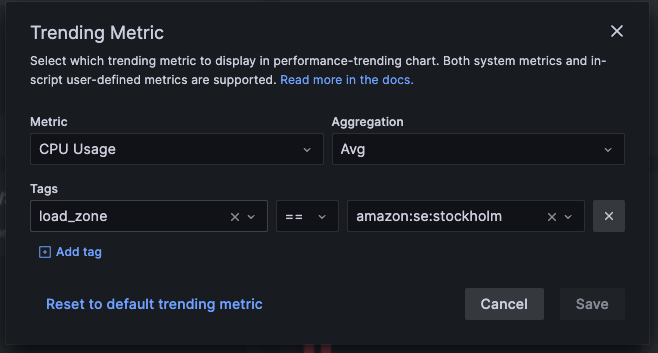

This brings up a window from which you can:

- Select the metric to use in the performance-trending chart. Note that both standard (created by all k6 test runs) and custom (user-defined) metrics are listed.

- Select aggregation function to apply to the metric. In this case, “Avg” (Average) is selected.

- Select or add one or more sets of tags & tag values. In this case, we are selecting values only from instances in the amazon:se:stockholm load zone.

After you select the desired parameters, Save to apply the changes. Note that the Save button will be enabled only after changes are made to the configuration.

To reset the configuration, use the default trending metric with Reset to default trending metric button in the bottom left corner.

k6 calculates the required values, then plots them in the performance-trending chart.