Store, query, and alert on data

Grafana Cloud gives you a centralized, high-performance, long-term data store for your metrics and logging data. Endpoints for Prometheus, Graphite, Tempo, and Loki let you ship data from multiple sources to Grafana Cloud, where you can then build dashboards that aggregate, query, and alert on data across all of these sources. Grafana Cloud Metrics and Logs offers blazing fast query performance tuned and optimized by Mimir and Loki maintainers, and horizontally scalable alerting and rule evaluation with Grafana Alerting.

Get started with Grafana Cloud Metrics and Logs, if you have existing Prometheus, Graphite and/or Loki instances

If you are moving to Grafana Cloud, but already have existing data sources set up, here’s how to start.

This page assumes you have your data source set up and running as a prerequisite.

Ship your Prometheus series to Grafana Cloud

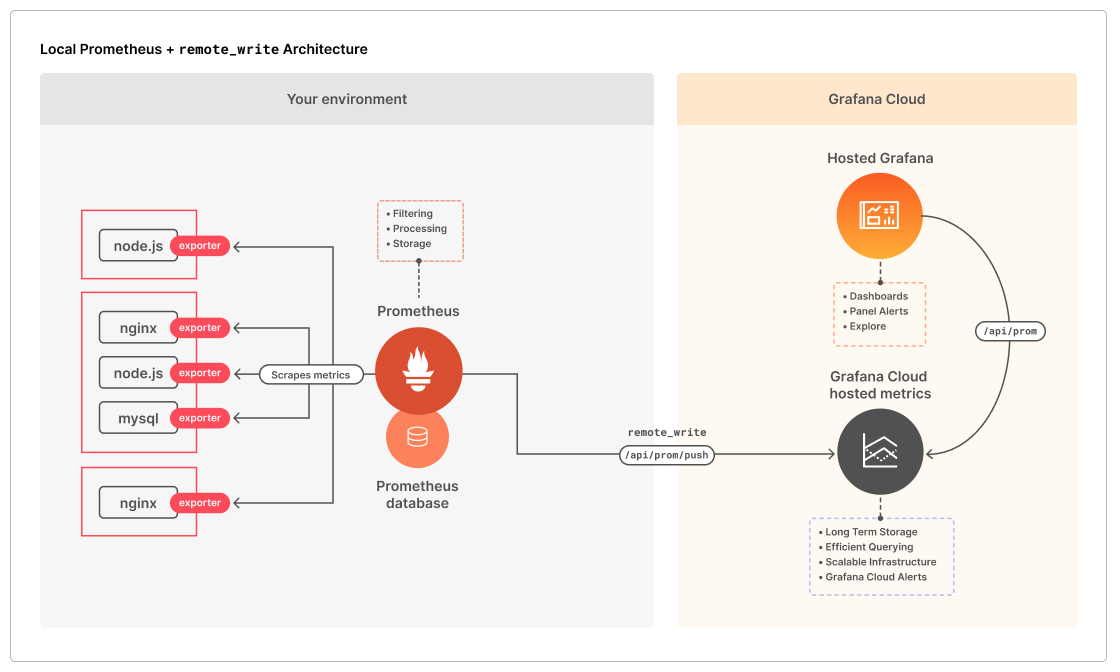

Prometheus pulls metrics. It also offers a bulk push mechanism with remote_write. For Grafana Cloud we use this to allow pushing of locally-scraped metrics to the remote monitoring system versus that remote monitoring system polling (or pulling) metrics from a set of defined targets. We created the Grafana Agent to make this even easier and seamless.

Using Prometheus’s remote_write feature, you can ship copies of scraped samples to your Grafana Cloud Prometheus metrics service. To learn how to enable remote_write, see Prometheus metrics from the Grafana Cloud docs. If you’re using Helm to manage Prometheus, configure remote_write using the Helm chart’s values file. Please see Values files from the Helm docs for more information on configuring Helm charts.

The Monitoring a Linux host using Prometheus and node_exporter provides a complete start to finish example that includes installing a local Prometheus instance and then using remote_write to send metrics from there to your Grafana Cloud Prometheus instance.

Ship your Graphite metrics to Grafana Cloud

carbon-relay-ng allows you to aggregate, filter and route your Graphite metrics to Grafana Cloud. To learn how to configure a carbon-relay-ng instance in your local environment to ship Graphite data to Grafana Cloud, please see How to Stream Graphite Metrics to Grafana Cloud using carbon-relay-ng.

Ship your Loki logs to Grafana Cloud

The Loki log aggregation stack uses Promtail as the agent that ships logs to either a Loki instance or Grafana Cloud. To learn more, see Logs and Collect logs with Promtail.

Trace program execution information with Tempo

The Tempo tracing service tracks the lifecycle of a request as it passes through applications. For more information on Tempo, see Tempo documentation.

Get started with Grafana Cloud Metrics and Logs, if you’re starting from scratch

If you are new to Grafana Cloud and would like to use Prometheus, the Monitoring a Linux host using Prometheus and node_exporter quickstart provides a complete remote_write example.

Install and configure Prometheus

Prometheus scrapes, stores, ships, and alerts on metrics collected from one or more monitoring targets. Using its remote_write feature, you can then ship these collected samples to a remote endpoint like Grafana Cloud for long-term storage and aggregation. To learn how to install Prometheus, please see Installation from the Prometheus documentation. Prometheus relies on exporters to expose Prometheus-style metrics for systems in your environment. For example, Node exporter exports hardware and OS metrics for *NIX systems. To get started with exporters, please see Exporters and Integrations.

Deploy Grafana Agent

Grafana Agent is a lightweight, push-style, Prometheus-based metrics and log data collector. It is a pared-down version of Prometheus without any querying or local storage. The agent can reduce the scraper’s memory footprint by up to 40% relative to a Prometheus instance.

With Grafana Agent, you can avoid maintaining and scaling a Prometheus instance in your environment, and split up collection workloads across Nodes in your fleet. It currently supports Prometheus metrics, Promtail-style log collection, and several built-in integrations. To learn more about configuring and deploying the Grafana Agent, please see the Grafana Agent documentation.

To roll out Grafana Agent inside of a Kubernetes cluster, use Kubernetes Monitoring. Kubernetes Monitoring provides you with preconfigured dashboards and alerts to get you started with monitoring quickly.

Was this page helpful?

Related resources from Grafana Labs