Loki 3.0 release: Bloom filters, native OpenTelemetry support, and more!

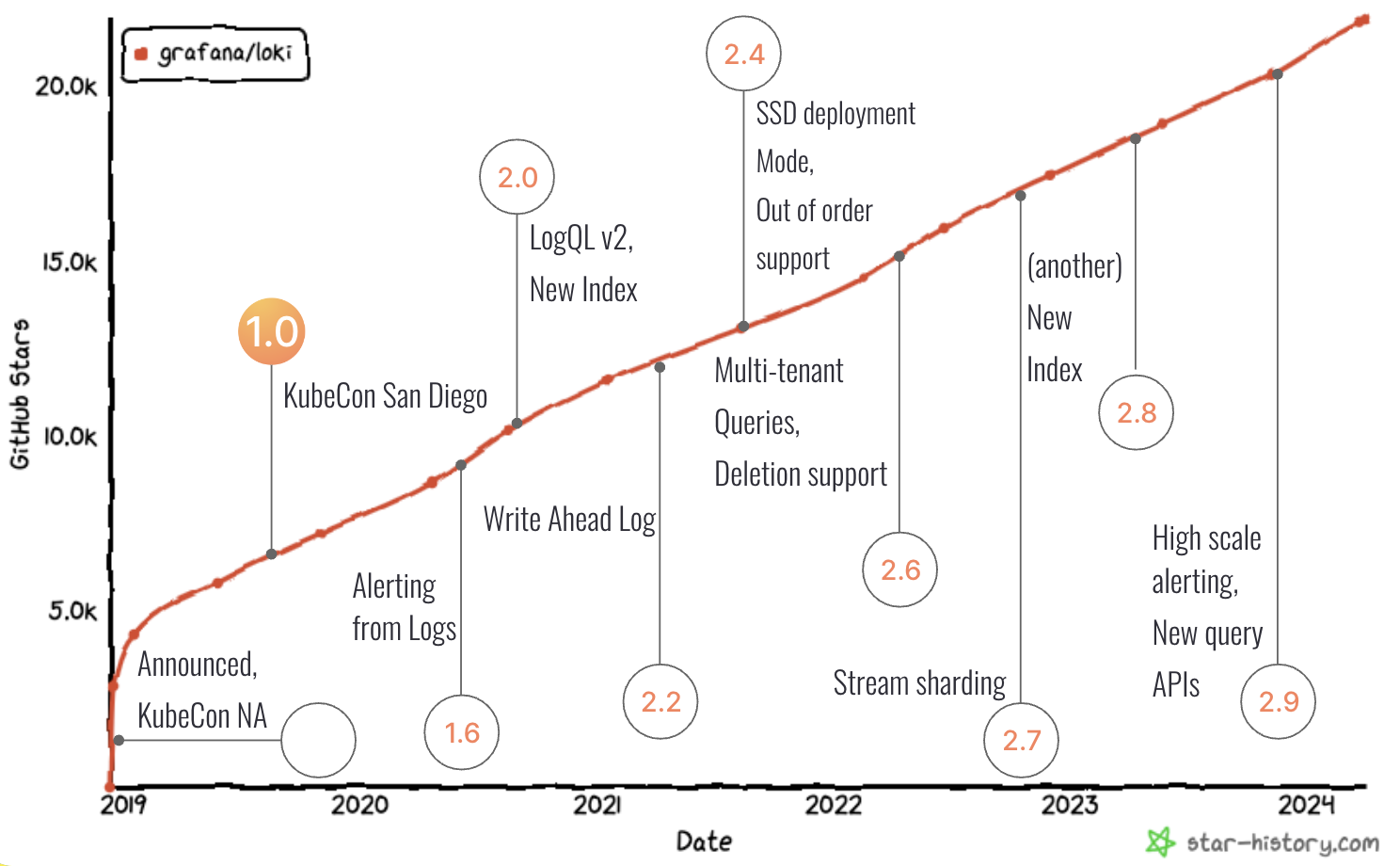

Welcome to the next chapter of Grafana Loki! After five years of dedicated development, countless hours of refining, and the support of an incredible community, we are thrilled to announce that Grafana Loki 3.0 is now generally available.

The journey from 2.0 to 3.0 saw a lot of impressive changes to Loki. Loki is now more performant, and it’s capable of handling larger scales — all while remaining true to its roots of efficiency and simplicity.

The progress has been tremendously well-received by the community (who, by the way, we cannot thank enough for helping us get here!). We hit, and blew right past, some very cool milestones over the past year — 20,000 GitHub stars and more than 100,000 active clusters, to name a few.

With Loki 3.0, we’re excited to build on the project’s success and introduce some very cool features. Read on, and you’ll see how we are staying true to our Loki roots — simplicity at scale and making the right tradeoffs for developers — while also making further strides to make Loki the easiest logs aggregation platform for developers (and more!) to use.

Let’s dive in!

Query acceleration with Bloom filters

We’re excited to share one of the coolest new additions to Loki 3.0: query acceleration with Bloom filters, an experimental feature that allows you to find the specific log data you’re looking for faster than ever.

Imagine trying to find a single, specific message hidden among millions of messages — it’s like looking for a needle in a haystack. Loki was built to find your log data very quickly when you know roughly where to look (in other words, some label selectors to filter on); but now, with the query acceleration that comes with Bloom filters, we will be able to support this “needle in a haystack” use case much better.

Before Bloom filters, Loki had to look at every. single. log. line. within all matching streams to find what you needed. If you wanted to find one piece of information from yesterday’s data, Loki would have to load everything from that day and check each log line one by one. As the amount of log data stored increases, Loki would require more compute power to process the data at query time. Because Grafana Labs operates Loki at scale, we saw an opportunity to optimize filtering specific data amidst a whole sea of data.

This is where Bloom filters come in, making Loki a lot smarter and more efficient when searching through log data. Instead of checking every log, Bloom filters enable Loki to search for strings, such as order ID or user ID, skipping large chunks of data that don’t contain the information you’re seeking. It’s like having a map that shows where the needle might be in the haystack, so you don’t have to search the whole thing.

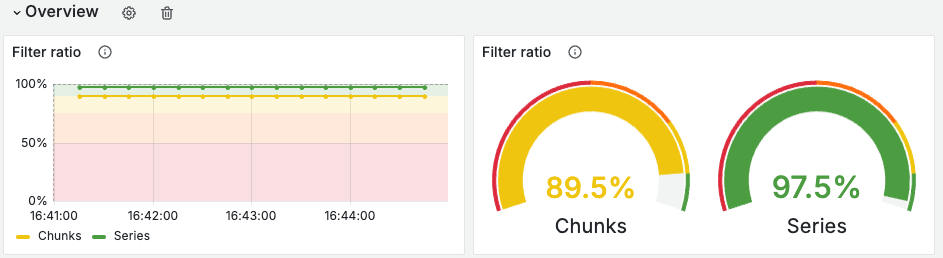

And all signs point to Bloom filters being really effective. Early internal tests suggest that with Bloom filters, Loki can skip a significant percentage of log data while running queries. Our dev environment tests show that we can now filter out between 70% and 90% of the chunks we previously needed to process a query.

Here is an example of the results we’re seeing when running one of these “needle in a haystack” searches with Bloom filters. This query includes several filtering conditions and represents a typical use case we’ve seen our customers run on Grafana Cloud Logs, which is powered by Loki. You’ll see the number of chunks and streams we are bypassing:

The results are fantastic! Touching less data means faster search return times, and less strain on resources.

This is an extremely promising experimental feature, with an even more promising future as we make it simpler and even faster in Loki! To read more about query acceleration, and learn about how to enable Bloom filters in your Loki cluster, check out our accelerated queries documentation.

Native OpenTelemetry support

The Loki team recognizes that OpenTelemetry is quickly gaining adoption. In our 2024 Observability Survey, 54% of the respondents said they were using OpenTelemetry more than they did last year. OpenTelemetry aims to provide a vendor-agnostic way to easily instrument applications or systems, regardless of their language, infrastructure, or runtime environment. This vision aligns strongly with Loki’s principle of meeting users where they are.

While it was already possible to ingest OTel logs to Loki using Loki Exporter, it didn’t provide an optimal querying experience. This previous method serialized data in JSON, which then required deserializing it at query time to interact with OTel attributes and metadata in log records. Doable, but not simple.

To provide a native OTel logging experience to users opting for OpenTelemetry, we have added native OpenTelemetry ingestion support to Loki 3.0.

Here are some of the benefits that Loki’s native OpenTelemetry endpoint brings in over the Loki Exporter:

- Simplified ingestion pipeline: By eliminating the need to put the Loki Exporter between an OpenTelemetry collector (such as Grafana Alloy) and Grafana Cloud, your log ingestion pipeline looks much more simplified. You can easily switch from any other OTel-compatible log storage to Loki by just updating the endpoint.

- Improved querying experience: By leveraging structured metadata, Loki’s native OpenTelemetry implementation lets you interact with all the OpenTelemetry attributes and log event metadata at query time without having to do any deserialization. Here are some example screen captures showing how it’s much easier to query OTel logs ingested via Loki’s native OTel ingestion endpoint vs. the Loki Exporter:

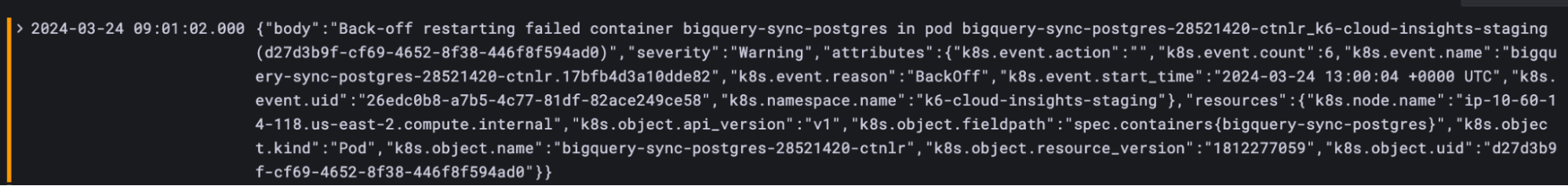

Here is how OpenTelemetry logs are stored in Loki:

- Index: Resource attributes map well to index labels in Loki since both usually identify the source of the logs. Because Loki works well with a limited set of index labels, Loki, by default, selects a few key attributes to be stored as index labels, while the remaining resource attributes are stored as structured metadata with each log entry.

- Timestamp: One of

LogRecord.TimeUnixNanoorLogRecord.ObservedTimestamp, based on which one is set. If both are not set, the ingestion timestamp will be used. - LogLine:

LogRecord.Bodyholds the log’s body. However, since Loki only supports log bodies in string format, we will stringify non-string values using the AsString method from the OTEL collector lib. - Structured metadata: Anything that can’t be stored in index labels and LogLine would be stored as structured metadata.

Check out our docs for more details on how OTel logs are stored in Loki and how to change the way data is stored in Loki with a per-tenant OTLP config.

Loki documentation improvements

Loki is all about simplicity, and we believe that better documentation makes our products easier to use. Both the Documentation and Engineering teams have been working on updating the Loki documentation to make it easier for new users to get started.

In addition to refreshing content in the Get Started section, we’ve introduced a new Quickstart topic that walks you through several sample queries so that you can see your log data in Grafana. We’ve also polished a few of our most visited sections: API docs, Configuration reference, and Storage documentation have all been updated to reflect deprecations and outdated configuration options have been removed.

Grafana Enterprise Logs (GEL) documentation has also been reorganized, and stale Loki content has been updated.

‘I don’t want to learn LogQL’

Oops… wrong blog! You’ll have to check out our other post about Explore Logs to learn what this is all about.

Cleaning up the campsite, and a word about upgrading

We took the opportunity of this major release to make several quality-of-life improvements for both users and maintainers to help make Loki easier to use. This includes improvements to our Helm chart, so that it can be easier than ever to run Loki. Please carefully review our release notes and upgrade guide as you go try Loki 3.0 out for yourself!

We’ve also used this Loki 3.0 release to align the semantic versioning of Loki, our open source offering, and Grafana Enterprise Logs (GEL), our enterprise offering that is powered by Loki. GEL 3.0 will be released on April 11. If you are using Grafana Enterprise Logs (GEL), again, please check out the release notes when available and related documentation before upgrading to GEL 3.0.

Thank you to the Grafana Loki community!

Getting to Loki 3.0 was a community effort. On behalf of Grafana Labs and the Loki team, we sincerely want to thank all of our users and contributors for their efforts and commitment.

We’d love to hear your feedback! Drop into the #loki-3 channel on Grafana Labs Community Slack or find Loki on GitHub and let us know what you think.

Grafana Cloud is the easiest way to get started with metrics, logs, traces, dashboards, and more. We have a generous forever-free tier and plans for every use case. Sign up for free now!