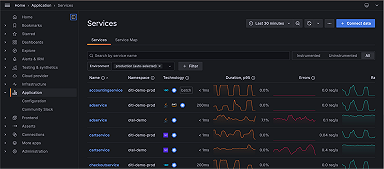

OpenTelemetry distributed tracing with eBPF: What’s new in Grafana Beyla 1.3

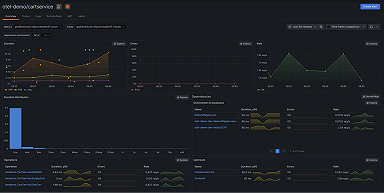

Grafana Beyla, an open source eBPF auto-instrumentation tool, has been able to produce OpenTelemetry trace spans since we introduced the project. However, the traces produced by the initial versions of Grafana Beyla were single span OpenTelemetry traces, which means the trace context information was limited to a single service view. Beyla was able to ingest TraceID information passed to the instrumented service, but was unable to propagate it upstream to other services.

Since full distributed tracing is a great debugging tool for production, we’ve made significant progress toward the goal of automatic distributed tracing in Beyla 1.2 and, most recently, in the Beyla 1.3 release. We’ve used two different approaches — automatic header injection and black-box context propagation — that work in tandem to provide as complete distributed tracing capabilities as possible.

In this blog post, we’ll outline these two approaches, including how they work, their current limitations, and how they’ll evolve.

Approach 1: Trace context propagation with automatic header injection

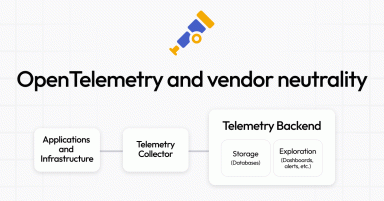

This approach should be familiar to anyone who has worked on instrumentation of services with the OpenTelemetry SDKs. The general idea is that the instrumentation intercepts incoming requests, reads any supplied trace information, and stores this trace information in a request context. Then, this request context is injected into outgoing calls to upstream services the application makes. The W3C Trace Context standard defines what the trace information context should look like, as shown on Figure 1.

According to the W3C standard, the trace information, as shown in Figure 1, is propagated through the HTTP/HTTP2/gRPC request headers. It contains a unique TraceID, which identifies all trace spans that belong to the trace. Each individual request of the trace has a unique SpanID, which is used as the Parent SpanID to create the client-server relationships in the trace. The pseudo code in Figure 2 shows how this is typically done.

Beyla uses the same approach described above, except it uses eBPF probes, carefully injected, to read the incoming trace information and inject the trace header in outgoing calls. This kind of automatic instrumentation and context propagation is currently implemented only for Go programs and has been available since the Beyla 1.2 release.

Beyla understands Go internals and tracks Go goroutine creation for context propagation of the incoming request to any outgoing calls made. This tracking works even for asynchronous requests, because Beyla is able to track thegoroutine parent-child relationships and their lifecycle. In Figure 3, we show the equivalent of the manual instrumentation shown in Figure 2, but done automatically by Beyla.

This approach works well for all Go applications, and we’ve implemented this automatic context propagation for HTTP(S), HTTP2, and gRPC. There’s one limitation, however: the eBPF helper we use to write the header information in the outgoing request is protected when the Linux kernel is in lockdown mode. This typically happens when the Kernel has secure boot enabled or it has been manually configured in integrity or security lockdown mode. Although these locked configurations are uncommon in cloud VM/container configurations, we automatically detect if the Linux kernel allows us to use the helper and we enable the propagation support accordingly.

We plan to extend the support for automatic header injection to more languages in future versions of Beyla.

Approach 2: Black-box context propagation

The idea for black-box context propagation is very simple. If a single Beyla instance monitors two or more services that talk to each other, we can use the TCP connection tuples to uniquely identify each individual connection request. Essentially, Beyla doesn’t need to propagate the context in the HTTP/gRPC headers; it can “remember” it for a given connection tuple and look it up when the request is received on the other end.

Figure 4 shows how this connection tracking works.

This approach is the same for all programming languages, so this context propagation works for any application, regardless of the programming language it is written in. Since the “remember” part of the context information is done in locally stored Beyla eBPF maps, this approach is currently limited to a single node. Connecting Beyla to external storage can eliminate this restriction and we are considering support for that in the future.

While we explained how connections are tracked between services in the black-box context propagation feature, we still have to do a bit more work to track incoming-to-outgoing requests within a service. When an incoming request is handled by the same thread as any future outgoing calls, the request tracking is straightforward. We connect the incoming requests to the outgoing requests by the thread ID.

But for asynchronous requests, we have to add more sophisticated tracking. For Go applications, we already track goroutine lifecycles and parent relationships, but for other programming languages, we added support for tracking sys_clone calls. By tracking sys_clone, we track the parent-child relationship of request threads, similarly to how we track goroutines. This works well to some extent — namely, it doesn’t work for applications that use outgoing client thread pools. Typically, the thread pools have threads pre-created, and the asynchronous handoff of the client request isn’t captured by tracking sys_clone. We plan to work on mitigating this constraint by adding more library/language-specific request tracking in the future versions of Beyla.

Next steps

Beyla 1.3 delivers OpenTelemetry distributed tracing support for many scenarios without any effort from the developer side. We believe that improving on the current limitations in future versions of Beyla will deliver distributed tracing capabilities that are equal or better than what’s possible with manual instrumentation.

We’d also love to hear about your own experiences with Grafana Beyla. Please feel free to join the #beyla channel in our Community Slack. We also run a monthly Beyla community call on the second Wednesday of each month. You can find all the details on the Grafana Community Calendar.

To learn more about distributed tracing in Grafana Beyla, you can check out our technical documentation. And for step-by-step guidance to get started with Beyla, you can reference this tutorial.