How to monitor Kubernetes clusters with the Prometheus Operator

Kubernetes has become the preferred tool for DevOps engineers to deploy and manage containerized applications on one or multiple servers. These compute nodes are also known as clusters, and their performance is crucial to the success of an application.

If a Kubernetes cluster isn’t performing optimally, the application’s availability and performance will suffer, leading to unhappy users and even revenue loss. Fortunately, several tools in the Kubernetes ecosystem can help you effectively monitor your Kubernetes cluster, including Prometheus.

In this guide, you’ll learn about the benefits of using Kubernetes operators. You’ll also learn how to deploy and use the Prometheus Operator to configure and manage Prometheus instances in your Kubernetes cluster. And finally, you’ll discover how to deploy Grafana in your Kubernetes cluster to help analyze and visualize the health of your Kubernetes clusters.

Why monitor Kubernetes clusters with Prometheus?

Prometheus is an open source tool that provides monitoring and alerting for cloud native environments. It collects metrics data from these environments and stores them as time series data, recording information with a timestamp in memory or local disk.

You can install and configure Prometheus on your Kubernetes cluster using a Kubernetes operator like Prometheus Operator.

What are Kubernetes operators?

Kubernetes operators are software extensions that extend the functionalities of the Kubernetes API in configuring and managing other Kubernetes applications. They make use of custom resources to manage these applications and their components.

Kubernetes controllers

In Kubernetes, there are control loops called Kubernetes controllers that monitor the state of the Kubernetes cluster to make sure it’s similar to or equal to the desired state. For instance, if you want to deploy a new containerized application, you create a deployment object in the cluster with the necessary details using the kubectl client or the Kubernetes dashboard. The Kubernetes controller receives information about this new object and then performs the required action to deploy your application to the cluster. Likewise, when you edit the object, the controller receives the update and performs the necessary action.

Kubernetes operators

Kubernetes operators use this Kubernetes controller pattern in a slightly different way. They’re application-specific, meaning different applications can have their own operators (which can be found on OperatorHub.io). The operators monitor the applications in the cluster and perform the required tasks to ensure the application functions in the desired state, which you configure in your custom resource definition (CRD).

Deploying Prometheus instances manually without using the Prometheus Operator or a Prometheus Helm chart can be hectic and time-consuming. You’d need to configure certain Kubernetes objects like ConfigMap, secrets, deployments, and services, which the Prometheus Operator abstracts on your behalf. The Prometheus Operator also helps you manage dynamic updates of resources, such as Prometheus rules with zero downtime, among other benefits.

Benefits of using Kubernetes operators

Operators provide multiple benefits to the Kubernetes ecosystem.

1. Packaging, deploying, and managing applications

Kubernetes operators provide smoother application deployment and management. For instance, cloud native applications with complex installation procedures and maintenance steps can be handled more easily, because you can create custom resources to automate and simplify complex installation procedures. Operators also improve the management of stateful applications like databases, so it’s easier to perform database backups and configure database applications. Using operators enables you to automate these and other complex processes.

2. Performing basic operations using kubectl

Since Kubernetes operators extend the functionality of the Kubernetes API server, they also allow you to use the kubectl client to run basic commands for custom resources such as GET and LIST, letting you manage your custom resources with commands you are already familiar with in the terminal.

3. Facilitating resource monitoring

Because Kubernetes operators perform custom tasks on an application based on the CRDs, they continuously monitor the application and cluster to check for anything that is out of place. If there is a misalignment in the application, like if the application isn’t in its expected state, the operators correct it automatically. This saves the Kubernetes administrator the trouble of identifying and correcting problems on the cluster.

How to deploy and configure the Prometheus Operator in Kubernetes

As noted earlier, Prometheus works well for monitoring your application and resources in your Kubernetes cluster. Below, we’ll show you how to use the Prometheus Operator to deploy and configure the Prometheus instance in your Kubernetes cluster.

Prerequisites

You need the following for this guide:

- A running Kubernetes cluster. You can use minikube, which supports Linux, Mac, and Windows, to set up a local cluster on your computer.

- A kubectl client installed on your computer.

Deploying the Prometheus Operator

You’re going to deploy Prometheus Operator in your cluster, then configure permission for the deployed operator to scrape targets in your cluster.

You can deploy by applying the bundle.yaml file from the Prometheus Operator GitHub repository:

kubectl apply -f https://raw.githubusercontent.com/prometheus-operator/prometheus-operator/main/bundle.yamlIf you run into the error The CustomResourceDefinition "prometheuses.monitoring.coreos.com" is invalid: metadata.annotations: Too long: must have at most 262144 bytes, then run the following command:

kubectl apply -f https://raw.githubusercontent.com/prometheus-operator/prometheus-operator/main/bundle.yaml --force-conflicts=true --server-side=trueThis command allows you to deploy Prometheus Operator CRD without Kubernetes altering the deployment when it encounters any conflicts while installing the operator in your cluster. In this scenario your cluster complains of a long metadata annotation that is beyond the limit provided by Kubernetes. However, --force-conflicts=true allows the installation to continue without the warning stopping it. Additionally, --force-conflicts only works with --server-side. For more information on –server-side and --force-conflicts click here.

Once you’ve entered the command, you should get an output similar to this:

customresourcedefinition.apiextensions.k8s.io/alertmanagerconfigs.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/alertmanagers.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/podmonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/probes.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheuses.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/prometheusrules.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/servicemonitors.monitoring.coreos.com created

customresourcedefinition.apiextensions.k8s.io/thanosrulers.monitoring.coreos.com created

clusterrolebinding.rbac.authorization.k8s.io/prometheus-operator created

clusterrole.rbac.authorization.k8s.io/prometheus-operator created

deployment.apps/prometheus-operator created

serviceaccount/prometheus-operator created

service/prometheus-operator createdWhen you run kubectl get deployments, you can see that the Prometheus Operator is deployed:

NAME READY UP-TO-DATE AVAILABLE AGE

prometheus-operator 1/1 1 1 6m1sNext, you’ll install role-based access control (RBAC) permissions to allow the Prometheus server to access the Kubernetes API to scrape targets and gain access to the Alertmanager cluster. To achieve this, you’ll deploy a ServiceAccount. Create a file named prometheus_rbac.yaml and paste the following content into it:

apiVersion: v1

kind: ServiceAccount

metadata:

name: prometheus

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/metrics

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources:

- configmaps

verbs: ["get"]

- apiGroups:

- networking.k8s.io

resources:

- ingresses

verbs: ["get", "list", "watch"]

- nonResourceURLs: ["/metrics"]

verbs: ["get"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: prometheus

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: prometheus

subjects:

- kind: ServiceAccount

name: prometheus

namespace: defaultThen, apply the file to your cluster:

kubectl apply -f prometheus_rbac.yamlYou should get the following response:

serviceaccount/prometheus created

clusterrole.rbac.authorization.k8s.io/prometheus created

clusterrolebinding.rbac.authorization.k8s.io/prometheus createdDeploying Prometheus

Now that you have deployed the Prometheus Operator into your cluster, you’re going to create a Prometheus instance using a Prometheus CRD defined in a YAML file. Create a file named prometheus_instance.yaml and paste the following:

apiVersion: monitoring.coreos.com/v1

kind: Prometheus

metadata:

name: prometheus

spec:

serviceAccountName: prometheus

serviceMonitorSelector: {}

resources:

requests:

memory: 300MiThen, run the following:

kubectl apply -f prometheus_instance.yamlIn the YAML file above, the serviceAccountName references the ServiceAccount you created earlier for the ClusterRoleBinding. serviceMonitorSelector points to a ServiceMonitor that allows Prometheus to monitor specific services in your cluster. If you wanted Prometheus to select all existing ServiceMonitors, you’d assign {} to the serviceMonitorSelector key; if you didn’t want it to select any ServiceMonitor, you wouldn’t include it in the Prometheus CRD.

To select a particular ServiceMonitor, use something like this:

serviceMonitorSelector:

matchLabels:

app: frontendAfter you apply the prometheus_instance.yaml file, a prometheus-operated service is created, which you can see when you run kubectl get svc.

Now, access the server by forwarding a local port to the Prometheus service:

kubectl port-forward svc/prometheus-operated 9090:9090Open the URL http://localhost:9090 in your browser to see a page similar to the image below:

Configuring Prometheus to monitor services and applications

In a cluster with many different applications running simultaneously, you might not want a single Prometheus instance scraping metrics from all of them. You likely have specific applications in mind that you want to scrape metrics from, and you can specify them with the ServiceMonitor CRD.

The ServiceMonitor CRD enables you to customize how you want Prometheus to operate in terms of scraping metrics. You can configure Prometheus to scrape metrics from all services that match the label Frontend or Staging. You can even configure the interval rate at which Prometheus scrapes metrics from a set of services. The ServiceMonitor CRD gives you enormous power over how Prometheus monitors services in your cluster.

Create a ServiceMonitor CRD

Next, you’re going to create an example ServiceMonitor CRD. Create a file named service_monitor.yaml and paste the following:

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

name: prometheus

name: prometheus

spec:

endpoints:

- interval: 30s

targetPort: 9090

path: /metrics

namespaceSelector:

any: true

selector:

matchLabels:

operated-prometheus: "true"The code tells Prometheus to scrape services with the operated-prometheus: "true" label every thirty seconds, which you can access on the /metrics endpoint. Additionally, this means that Prometheus will scrape its own data and monitor its own health. It is also important to note that you should change the key-values of the matchLabels which is operated-prometheus: “true” to the service labels you want to monitor. The matchLabels key-value is just an example for this article.

Apply it to your Kubernetes cluster by running the following command:

kubectl apply -f service_monitor.yamlConfiguring Prometheus Operator using CRD objects

The Prometheus Operator provides different types of CRDs you can apply in your Kubernetes cluster to manage your Prometheus application. Some already mentioned in this guide include the Prometheus CRD and the ServiceMonitor CRD. Other options include PrometheusRules, Alertmanager, and PodMonitor. You can read more on the other CRD objects in the Github documentation.

Deploying Grafana in your Kubernetes cluster

Next, let’s review how to deploy Grafana in Kubernetes. Grafana allows you to query, visualize, alert on, and understand your metrics no matter where they are stored.

In your terminal, run the following command:

kubectl create deployment grafana --image=docker.io/grafana/grafana:latest You can run kubectl get deployments to confirm that Grafana has been deployed:

NAME READY UP-TO-DATE AVAILABLE AGE

grafana 1/1 1 1 7m27sNext, you’ll create a service for the Grafana deployment:

kubectl expose deployment grafana --port 3000Forward the port 3000 to the service by running the command below:

kubectl port-forward svc/grafana 3000:3000Open http://localhost:3000 in your browser to access your Grafana dashboard. Log in with admin as the username and password. This redirects you to a page where you’ll be asked to change your password; afterward, you’ll see the Grafana homepage:

Click Data Sources, then select Prometheus and fill in your configuration details, such as your Prometheus Server URL, access, auth type, and scrape intervals.

You can’t use http://localhost:9090 as your HTTP URL because Grafana won’t have access to it. You must expose prometheus using a NodePort or LoadBalancer.

Create a file named expose_prometheus.yaml and paste the following:

apiVersion: v1

kind: Service

metadata:

name: prometheus

spec:

type: NodePort

ports:

- name: web

nodePort: 30900

port: 9090

protocol: TCP

targetPort: web

selector:

prometheus: prometheusRun kubectl apply -f expose_prometheus.yaml. Grafana will be able to pull the metrics from http://<node_ip>:30900. To view the <node_ip>, run kubectl get nodes -o wide.

Enter http://<node_ip>:30900 in the URL box, then click Save & Test:

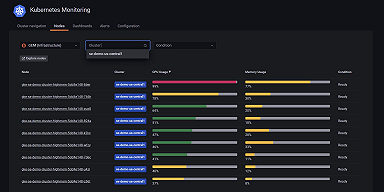

Creating a Grafana dashboard to monitor Kubernetes events

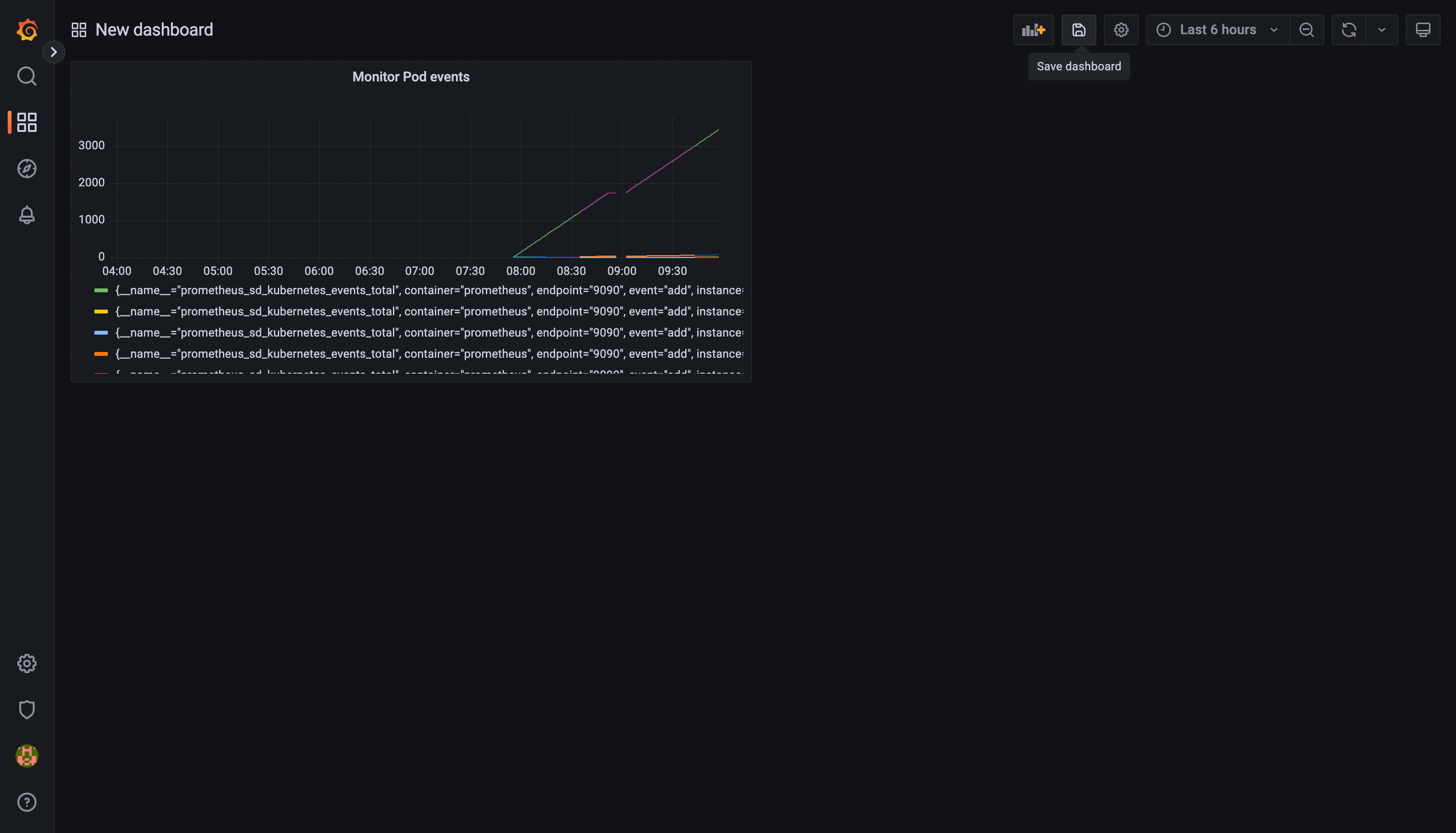

Let’s create a dashboard that shows a graph for the total number of Kubernetes events handled by a Prometheus pod. Hover over the panel on the left of the screen and select Dashboards > New dashboard, then select Add a new panel.

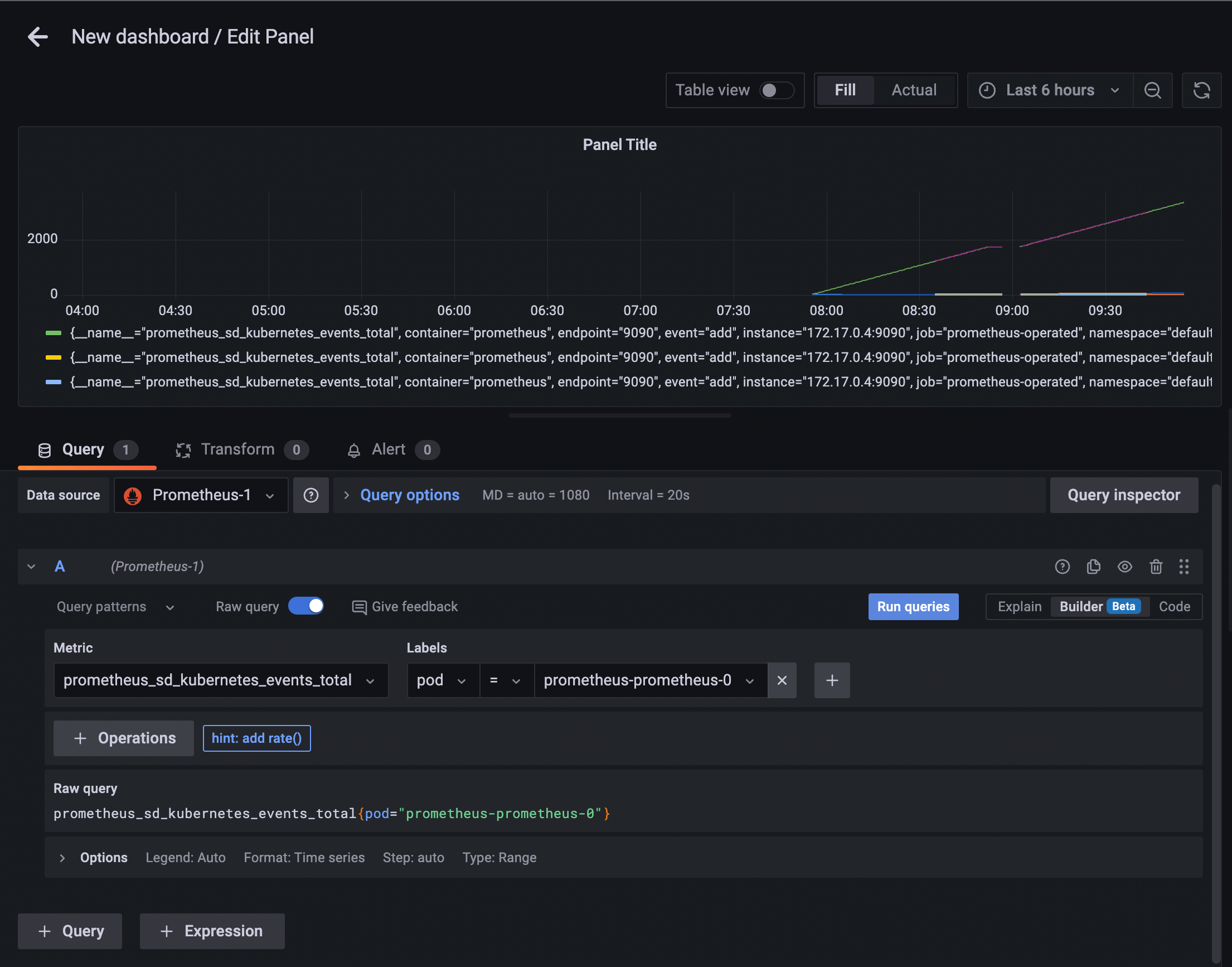

Select Prometheus as your Data source, choose prometheus_sd_kubernetes_events_total for the metric and prometheus-prometheus-0 in the labels input or you can choose any metric and labels you want to monitor. Then, click Run queries:

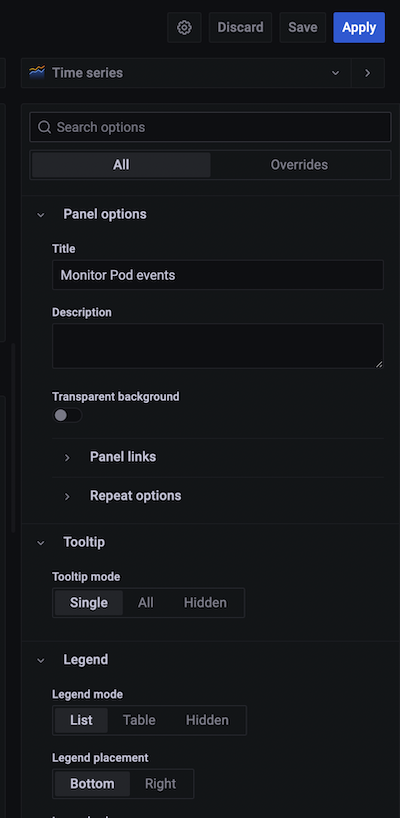

On the right-hand side of the dashboard panel configuration, you can configure details such as graph styles, the position of legends, and the title of the graph:

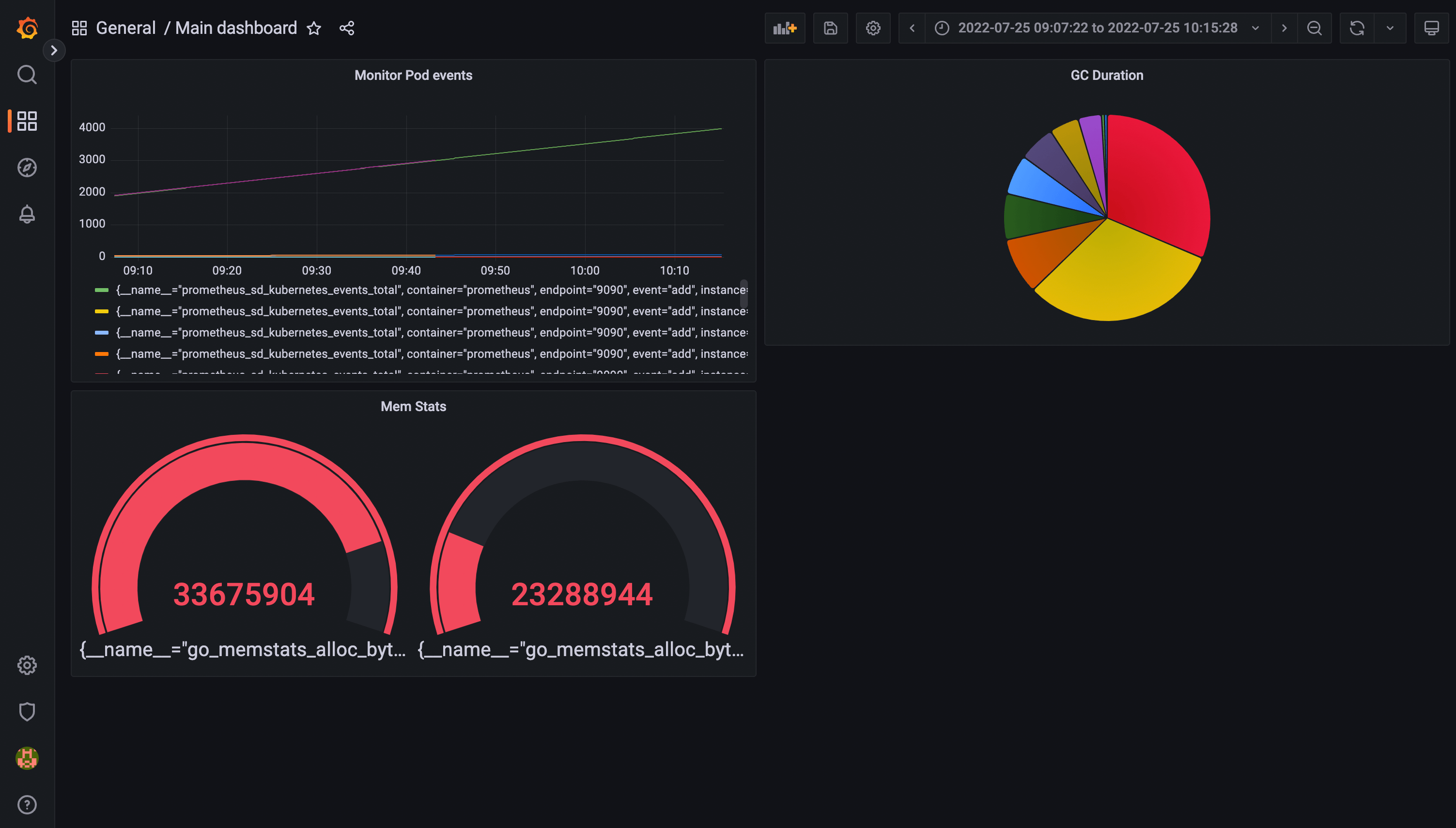

Click Apply once you’re done. You’ll be redirected to a new page containing your dashboard. You can add more dashboard panels by clicking the Add panel button or finish creating your dashboard by clicking the Save dashboard button:

Click the Save button to save your dashboard with a custom name:

Now that your dashboard is configured, you can add as many panels as you want to monitor different metrics scraped from your Prometheus instance in your cluster:

Conclusion

Kubernetes administrators and users often struggle to deploy and manage complex applications, but Kubernetes operators help. Application-specific operators simplify the deployment and management processes from the Kubernetes administrator to the Kubernetes API. The Prometheus Operator in particular makes managing Prometheus easier, with its CRDs that enable you to more easily scrape cluster and application metrics for monitoring.

While you could view an operator as yet another abstract layer to maintain in an already complex system, the efficiency it offers is not to be lightly dismissed. Automating configuration and operational processes frees up time that can be put to better, more innovative use.

If you’re interested in monitoring your Kubernetes clusters but don’t want to do it all on your own, we offer Kubernetes Monitoring in Grafana Cloud — the full solution for all levels of Kubernetes usage that gives you out-of-the-box access to your Kubernetes infrastructure’s metrics, logs, and Kubernetes events as well as prebuilt dashboards and alerts. Kubernetes Monitoring is available to all Grafana Cloud users, including those in our generous free tier. If you don’t already have a Grafana Cloud account, you can sign up for a free account today!