Introducing programmable pipelines with Grafana Agent Flow

The Grafana Agent team is excited to announce Grafana Agent Flow, our newest experimental feature in today’s Grafana Agent v0.28.0 release.

Flow is an easier and more powerful way to configure, run, and debug the Grafana Agent! 🎉

Why we built Grafana Agent Flow

Grafana Agent is a telemetry collector, optimized for the Grafana LGTM (Loki, Grafana, Tempo, Mimir) stack.

When Grafana Agent first launched, it operated like Prometheus but only isolated specific functionality around the scraping and remote_write bits. The Agent was then extended to embed support for logs and traces using Promtail and the OpenTelemetry Collector, respectively. It was also the basis for what is now the Prometheus Agent.

Grafana Agent has been successful in unifying the collection of all telemetry signals in one place, adding useful scaling mechanisms and general purpose integrations. However, the Agent team wanted to provide a more cohesive user experience that matches the mental model behind building telemetry pipelines.

Flow aims to achieve two goals at the same time:

- Reduce the learning curve: Allow new users to easily set up, inspect, and iterate on their observability pipelines.

- Empower experienced users: Allow power users to combine components in novel ways, enabling them to achieve complex workflows without the need for dedicated Agent features.

How Grafana Agent Flow works

Imagine you want to monitor a remote server. Here’s something you might sketch on a piece of paper.

How would we go about expressing this as an Agent configuration?

In the current “static” Agent mode, the YAML might look like:

metrics:

global:

remote_write:

- url: "<url>"

basic_auth:

username: "<user>"

password: "<pass>"

configs:

- name: default

scrape_configs:

- job_name: local_scrape

static_configs:

- targets: ['127.0.0.1:12345']

labels:

cluster: 'localhost'

integrations:

node_exporter:

enabled: true

set_collectors:

- “cpu”

- “diskstats”The Agent YAML subsections are compatible with the Prometheus configuration, but they’re not without issues. It’s easy to get the copying and pasting wrong, the hierarchy only makes sense if you’re already familiar with the scheme, but most importantly, it doesn’t represent the flow of data that we sketched above at all.

Here’s where Flow comes into play. Flow introduces the concept of components — small, reusable blocks that work together to form programmable pipelines.

The previous workflow can be defined in a more intuitive way by chaining the three components together:

prometheus.integration.node_exporter {

set_collectors = ["cpu", "diskstats"]

}

prometheus.scrape "my_scrape_job" {

targets = prometheus.integration.node_exporter.targets

forward_to = [prometheus.remote_write.default.receiver]

}

prometheus.remote_write "default" {

endpoint {

url = "<url>"

http_client_config {

basic_auth {

username = "<user>"

password = "<pass>"

}

}

}

}Let’s look at how this works in more detail.

Component arguments for modifying behavior

The backbone of Flow is a new approach to configuration powered by River, a new Terraform-inspired declarative language centered around the concept of expressions that tie components together.

Each component has a set of arguments that modify its behavior. Here, the set_collectors argument defines the data we want the component to report:

prometheus.integration.node_exporter {

set_collectors = ["cpu", "diskstats"]

}Arguments are also not restricted to literal values, but they can be dynamic by using River’s standard library of utility functions like env(“HOME”), concat(list1, list2), or json_decode(local.file.scheme.content).

Export fields for building pipelines

Components can also emit a number of exports as output values. In this case, the node_exporter component exports a targets field that can be used to collect the reported metrics:

prometheus.scrape "my_scrape_job" {

scrape_interval = “10s”

targets = prometheus.integration.node_exporter.targets

forward_to = [prometheus.remote_write.default.receiver]

}References to these export fields essentially bind components together in a pipeline.

Event-driven evaluation for dynamic updates

Under the hood, Flow orchestrates components via the component controller, which is responsible for scheduling them, reporting their health and debug status, and the event-driven evaluation of their arguments and exports.

The components are wired together in a DAG. Whenever a component’s export fields change for any reason, all dependents that reference those exports are updated in place.

For example, we could change the prometheus.remote_write component definition so that it uses a new component to fetch its password from a file (local.file.token.content):

prometheus.remote_write "default" {

endpoint {

url = "<url>"

http_client_config {

basic_auth {

username = “<user>”

password = local.file.token.content

}

}

}

}Each time the file contents are updated, the change is propagated to prometheus.remote_write so that it authenticates with the new password, as well as to prometheus.scrape so that it keeps forwarding metrics correctly.

How to run Grafana Agent Flow

If you have Docker installed, you can run this example by using the following command:

$ mkdir flow_example && cd flow_example

$ curl https://raw.githubusercontent.com/grafana/agent/main/docs/sources/flow/tutorials/assets/runt.sh -O && bash ./runt.sh example.riverOtherwise, feel free to fetch the binary for your platform from the v0.28.0 release page and run:

$ curl https://raw.githubusercontent.com/grafana/agent/main/docs/sources/flow/tutorials/assets/flow_configs/example.river -O

$ export EXPERIMENTAL_FLOW_ENABLED=true

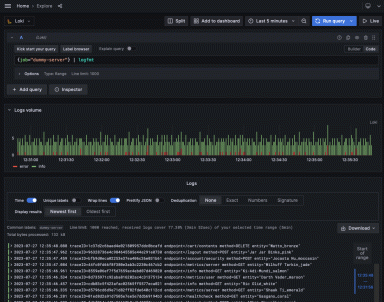

$ ./agent run example.riverOnce the pipeline is up and running, navigate to http://localhost:12345. The new web UI contains both the high-level status of all components and more detailed health and debug information for each one.

In the current “static” Agent mode, it’s often difficult to get immediate feedback on the effect of configuration changes. The new approach around components allows each Agent subsystem to expose its own fine-grained debug and health information and provide a real-time reflection of what’s happening in each step of your pipeline.

You can play around by changing the example.river configuration file and sending a request to http://localhost:12345/-/reload to pick up the configuration changes.

What’s next?

As an experimental feature, the current proof of concept only includes Prometheus metrics pipelines; support for logs and traces is on the way. We believe that Flow can serve as the foundation of a more powerful Grafana Agent, and there’s much to be excited for. Rest assured, we’ll continue maintaining and improving the current “static” Agent experience as the default mode.

We’ve prepared a number of Grafana Agent Flow tutorials for you to go through. You might also want to check out the extensive Grafana Agent Flow documentation or dive into the config language reference to get a more complete picture of what it’s all about.

As part of Grafana’s “big tent” philosophy, OpenTelemetry Collector components are coming soon, allowing users to seamlessly integrate between the Prometheus and OpenTelemetry Collector ecosystems.

Stay tuned for more news, as the set of available components will be gradually expanded with components that can read from Prometheus Operator CRDs or components that integrate with Hashicorp Vault. Our long-term roadmap also includes new clustering mechanisms and looking into a thin abstraction that allows sharing component setups, à la Terraform Modules.

Questions? Join the Grafana Agent community call!

You’re all welcome to come meet us on the next Agent Community Call to share your feedback and discuss everything about Flow!

You can also let us know what you think on our dedicated GitHub discussion for Grafana Agent Flow feedback.

If you’re not already using Grafana Cloud — the easiest way to get started with observability — sign up now for a free 14-day trial of Grafana Cloud Pro, with unlimited metrics, logs, traces, and users, long-term retention, and access to one Enterprise plugin.