Grafana Tempo 1.3 released: backend datastore search, auto-forget compactors, and more!

Grafana Tempo 1.3 has been released! We are proud to add the capability to search the backend datastore. This feature will also appear soon in Grafana Cloud Traces.

If you want to dig through the nitty-gritty details, you can always check out the v1.3 changelog. If that’s too much, this post will cover the big ticket items.

You can also check out a live demo of Tempo 1.3 in our recent webinar “Distributed tracing in Grafana: From Tempo OSS to Enterprise” which is available to watch on demand.

New features in Grafana Tempo 1.3

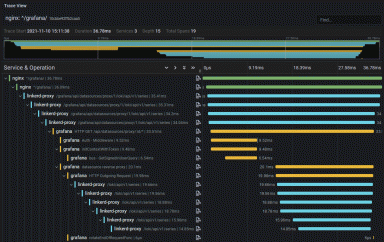

Backend datastore search

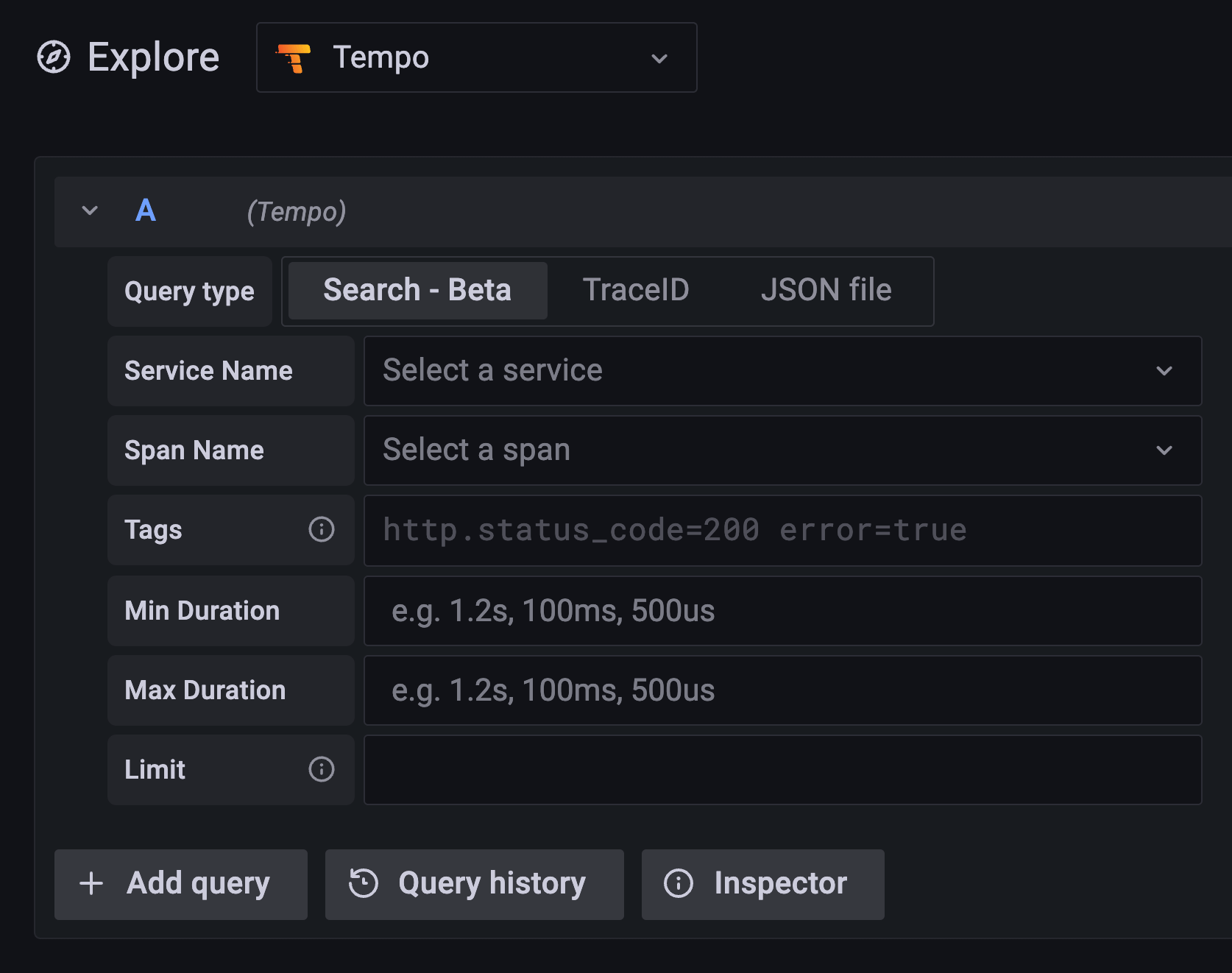

Backend datastore search is now available in Tempo 1.3! Refer to the setup documentation with Grafana. The Grafana UI is the same as for the search of recent traces.

In our internal Tempo cluster, we are currently ingesting ~180MB/s. Unfortunately, our searches can feel more like batch jobs. Searching for a few parameters can sometimes take 10-20 seconds, which is not ideal. We are committed to reducing this by an order of magnitude in order to provide the snappy searches you are used to in projects such as Loki. Our constraint is our backend formats. (Expect improvements in upcoming Tempo releases!)

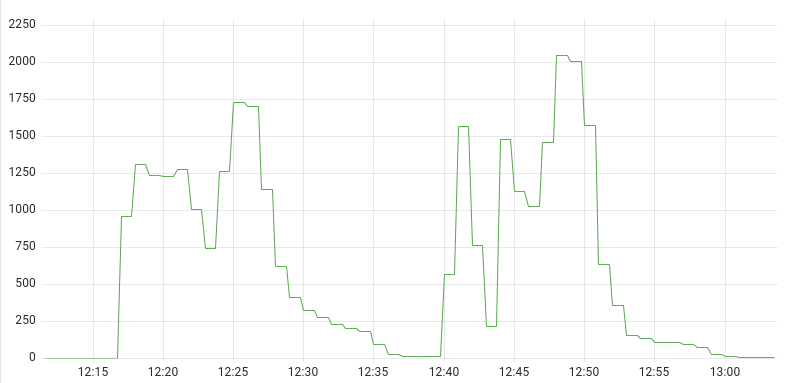

Internally, we are using serverless technologies to spawn thousands of jobs per query, which crack open your blocks and pore through the trace data underneath. Check out how to set up a serverless environment with Tempo in your installation. Internally we are hitting thousands of serverless instances:

Above: Count of serverless instances during search.

Note that serverless is not required for backend datastore search, and if you have a smaller load, the queries themselves may be able to get the job done efficiently.

Auto-forget compactors

Some users of Tempo have been seeing issues in which compactors remain in their consistent hash ring in an unhealthy state, even after exiting gracefully. We have not encountered this issue at Grafana, so it’s been difficult to diagnose and fix this issue.

In Tempo 1.3, we updated our ring code to auto-forget compactors after two failed heartbeat timeouts. This defaults to two minutes, but it is configurable with:

compactor:

ring:

heartbeat_timeout: 1m0sThis change should reduce the operational burden of running Tempo for those who were seeing the issue.

Better protection from large traces

Traces that approach 100MBs+ can be challenging for Tempo in a variety of ways. Ingesting, compacting, and querying can all be taxing for the various components due to the extreme quantity of memory required to combine traces of this size.

Tempo attempts to restrict the trace size using the following configuration, which defaults to 50MB:

overrides:

max_bytes_per_trace: 50000In Tempo 1.2 and previous versions, we stopped guarding against ingesting a large trace once the trace dropped out of memory. This generally occurs if a few seconds pass with no more spans received for the trace.

In Tempo 1.3 we have updated the code to continue refusing the large trace until the head block is cut, which can be 15-30 minutes. Since even this can be circumvented by teams pushing enormous traces over the course of hours or days, we have additional ideas (such as conversations around max trace size and improving compactors) in the works as well.

Breaking changes

Tempo 1.3 has a few, but minor breaking changes. For most Tempo operators and users, these breaking changes will have no impact.

- Support for the `Push` GRPC endpoint was removed from ingesters. This method has been deprecated since Tempo v1.0. If you are running a 1.x version this does not impact you. If you are upgrading from an 0.x version, we recommend you update to Tempo v1.2 first, and then upgrade to Tempo v1.3.

- The upgrade of our OTel collector dependency changed the default OTel GRPC port from 55680 to 4317. If you are explicitly specifying your port in the configuration, this change will not impact you.

- Two search-related configuration settings were relocated during the addition of full backend search:

query_frontend:

search_default_result_limit: <int>

search_max_result_limit: <int>was relocated to

query_frontend:

search:

default_result_limit: <int>

max_result_limit: <int>What’s next?

We need to improve our backend trace format. I repeat: We really need to improve our backend trace format. We are currently storing traces marshalled to protobuf and batched together as giant blocks in object storage. This has worked well for trace by ID lookup, but it’s not quite the right shape for efficient search.

There are a lot of options on the table for the new backend format, and the team is hard at work evaluating and testing them. We need to move toward solutions that can both retrieve one trace from among billions, as well as support tag-based search and eventually the more expressive TraceQL. You can attend our community calls for up-to-date news on search and other Tempo progress!

If you are interested in more Tempo news or search progress, please join us on the Grafana Labs Community Slack channel #tempo, post a question in the forums, reach out on Twitter, or join our monthly community call. See you there!

The easiest way to get started with Grafana Tempo is with Grafana Cloud, and our free forever tier now includes 50GB of traces along with 50GB of logs and 10K series of metrics. You can sign up for free.