A (de)bug’s life: Diagnosing and fixing performance issues in Grafana Loki's read path

Beep, beep, beeeeeeeep. Read path SLO page, again. And I’ve almost found the noisy neighbor!

That was me. And will probably be me again at some point in the future. As we continue to scale up the team that builds and runs Grafana Loki at Grafana Labs, I’ve decided to record how I find and diagnose problems in Loki. This will knock out a few birds with one stone: It forces me to write down the patterns I see in my investigations, and I can share that knowledge with our growing team and our community — those who run Loki at work, at home, or wherever.

Here, I’m going to walk through an actual investigation I did for Grafana Cloud Logs to illustrate how we can regularly use metrics to point us in the right direction, and then how to use logs to validate our guesses.

In the scenario outlined below, one tenant overconsumes resources, slowing things down for everyone. This case study uses Grafana dashboards, Prometheus, and Loki, which runs in a Kubernetes cluster.

Identify the problem

When our team gets paged, the first thing we’ll want to do is figure out what type of problem we’re looking at. Basically, are the requests finishing or are they just slooow? A bunch of HTTP 5xx’s is a lot scarier than some slow queries, so let’s attack the problem from that angle.

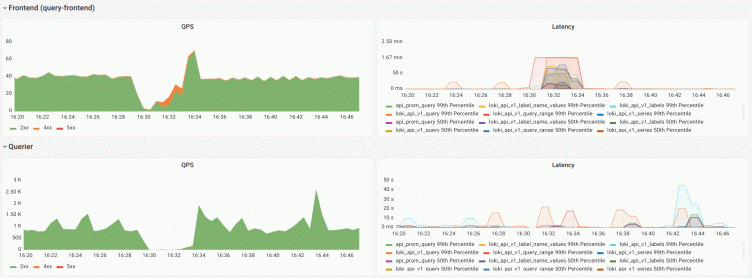

We’ll start by checking the Loki / Reads dashboard, which is included in the loki-mixin:

It looks like that’s a period of time in which our QPS (queries per second) nearly halted at the same time that our latencies skyrocketed. This makes me think that our read path is congested, queueing up queries and causing the queries that do execute to be very slow. We notice that all the query types see increased latencies during this surge, which supports the idea that either there aren’t enough queriers or that the queriers are overloaded.

Next, let’s pivot over to the Loki / Reads Resources dashboard, which is also included in the loki-mixin. This dashboard shows resource usage:

Oof, that’s a lot of memory! It looks like the querier pods jumped straight to their limits during this high-resource usage time period. Pivoting again to the Loki / Operational dashboard, we can also see this caused a bunch of the queriers to restart!

Well, that’s one way to go from bad to worse.

At this point, I feel pretty confident that our read path was entirely saturated with expensive queries that caused queriers to restart due to running out of memory. This, in turn, prevented other tenants from using these resources until queriers restarted. Afterward, we see more queries waiting in queue in the query scheduler:

Immediate action

We’ve identified the symptom as congestion, so what response should we take? Looking at the queue of waiting requests, it’s a good idea to scale out our read path, particularly the queriers. That will increase the query processing power for this Loki cluster and should help alleviate some of the load in the short term.

Tracking down the culprit

After adding some querier nodes, we use the same dashboards to notice that the problem hasn’t dissipated. Even though we’ve added queriers, the number of successfully processed QPS hasn’t changed. This is a big indication that if we increase the lanes on the highway but more cars can’t make it through, there is likely a traffic jam.

My first thought (simply because I’ve experienced this before) is that perhaps one tenant submitted queries which escape our noisy-neighbor controls. How can we identify the problem in a multi-tenant environment where we can’t distinguish how much memory or CPU is allocated to one tenant versus another?

We’ll first go to our Grafana dashboard to select the appropriate time range of our Prometheus metrics and then use “Explore” to capture the time range:

With the appropriate time range, switch to the Loki data source to extract some metrics from the querier logs for this same time period. Those metrics will help us answer the question: Which tenants consume most of the processing time in the cluster’s read path? Here’s the LogQL query:

# Who is eating the read path >:(

topk(10, sum(sum_over_time(

{cluster="<cluster>", namespace="<namespace>", container="querier"}

|= "metrics.go"

| logfmt

| unwrap duration(duration)

[5m]

)) by (org_id))The query results show us the following. The tenant identifiers are omitted:

As we can see in this graph, one tenant stands out dramatically from the others! I then ran this LogQL query with the < cluster >, < namespace >, and < offending_tenant > fields filled in, to see what type of queries were being run:

{cluster="<cluster>", namespace="<namespace>", container="query-frontend"}

|= "metrics.go"

| logfmt

| org_id="<offending_tenant>"It turns out that there were many expensive queries with extremely long lookback intervals. Think quantile_over_time(0.99, {foo=”bar”} | json | unwrap duration(response_time) [7d]). Loki splits this query by time and then needs to process 7 days worth of data for each data point, where much of this is reused. Even though we split intervals of approximately 30 minutes, each 30-minute period needs to grab the last 7 days worth of logs.

Voilà! We have our root cause.

Mitigation

In the short term, we can:

- Scale up the read path.

- Reduce the offending tenant’s

max_query_parallelismso they won’t be able to schedule as many queries at once, freeing up more of the read path for neighboring tenants. - Suggest they use recording rules for these recurring, expensive queries.

In the long term, we can:

- Scale up the read path.

- Better instrument the read path to identify/understand these issues faster and with more depth. I opened this PR after this debugging session!

- Find more effective ways to parallelize expensive queries like these, especially ones that query over long intervals.

- Implement better QoS (quality of service) controls, which make it harder for one tenant to overconsume their fair share of resources when a cluster is under load.

Parting thoughts

Phew, that was a lot to write. Thanks for making it this far! I always underestimate how difficult it is to document issues and solutions, but I hope that it helps others understand my thought processes and troubleshoot log issues faster.

As a bonus, I’ll leave one more fun query. (Please don’t mock my definition of the word “fun.”)

# out of slo instant queries by tenant

sum(count_over_time({cluster="prod-us-central-0",job="loki-prod/query-frontend"} |= "metrics.go" | logfmt | range_type="instant" and duration > 10s [1h])) by (org_id)

/ on () group_left

sum(count_over_time({cluster="prod-us-central-0",job="loki-prod/query-frontend"} |= "metrics.go" | logfmt | range_type="instant" and duration > 10s [1h]))

* 100This query tells us which percent of out-of-SLO instant queries are attributable to which tenant. I’ve used this to great effect today to discover that we had one tenant who was responsible for 98% of the out-of-SLO instant queries in an hour!

The easiest way to get started with Grafana Loki is with Grafana Cloud. We have a generous free forever tier that comes with 50GB of logs and plans for every use case. Sign up for free now!