A guide to deploying Grafana Loki and Grafana Tempo without Kubernetes on AWS Fargate

Zach is a Principal Cloud Platform Engineer working for Seniorlink on the Vela product team. He is a U.S. Army veteran, an avid rock climber, and an obsessive-compulsive systems builder.

At Seniorlink, we provide services and technology to support families caring for their loved ones at home. In the past two years we’ve expanded our programs across the United States, and so our need to observe our application systems has grown too.

We watched the development of Grafana Loki and Grafana Tempo closely as the products were announced; we planned our integration with Loki to replace an expensive and difficult to maintain Graylog (Elasticsearch) cluster, and to introduce distributed tracing using Tempo to increase our service observability.

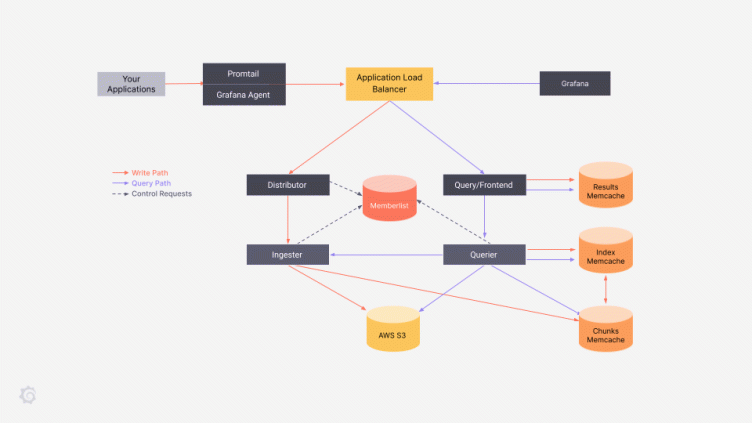

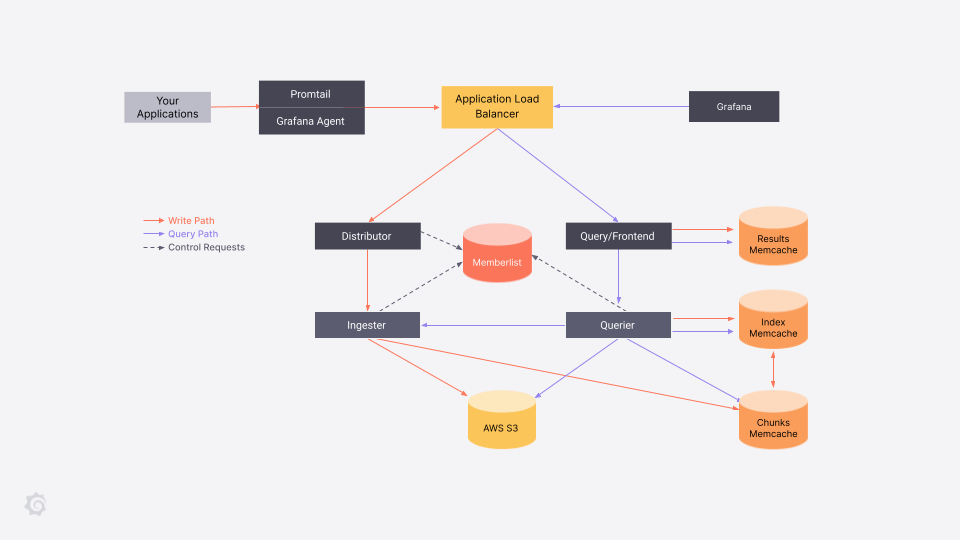

Loki and Tempo both inherit core pieces from Cortex and follow a similar architecture pattern. Data flows in through distributors and ingesters to a storage backend, and data is read through a query frontend and query workers. Every point of the system is independently scalable. Understanding the system architecture and the flow of the write and read paths will greatly assist you in implementing an AWS Fargate deployment.

Our applications are still primarily deployed on EC2. We’re not at a scale or complexity where Kubernetes is a good fit yet, but we wanted our Loki/Tempo deployments to be as ephemeral as possible. We settled on a pure AWS ECS Fargate deployment for both systems. Fargate is AWS’s serverless container orchestration solution and allows us to run container workload without worrying about host management.

The Grafana Labs team develops primarily for Kubernetes deployment, though, so this post will deal with some of the challenges to overcome in deploying Loki or Tempo to AWS Fargate. There are no Helm or Jsonnet configurations to pull and deploy off the shelf for Fargate; we had to study the Grafana Labs team’s system and synthesize it into our AWS infrastructure from scratch.

Task configuration

The first challenge of deploying to Fargate is configuration of the services.

Kubernetes offers many options of populating data into a pod (ConfigMaps, Secrets, InitContainers to name a few), but on Fargate, storage is ephemeral unless you rely on EFS (NFS mount).

The Fargate Task Definitions accept environment variables and secrets, but everything else must be built into the image or pulled at runtime. We wanted to avoid the cost and clunkiness of using EFS for just a few config files or installing AWS CLI to pull config from S3. The Loki/Tempo configurations are complex enough that specifying everything as a CLI argument would be tedious and error-prone. We chose to use the Grafana docker images as a base layer with a templated config file (using the Go template syntax) and Gomplate, a small utility that can render Go templates using a variety of data sources.

The images are then published to our AWS image repository (AWS ECR). Loki and Tempo do not natively allow configuration by environment variable, but the Gomplate utility lets us work around that so that the Fargate Task can easily populate data from AWS into the services.

All of the Loki and Tempo services can share the same configuration file as long as the `target` microservice is specified by command line parameter or environment variable, so you only need to make a single configuration.

chunk_store_config:

chunk_cache_config:

redis:

endpoint: "{{ .Env.REDIS_ENDPOINT }}:6379"

expiration: 6hOn container startup, an entrypoint script runs gomplate to render the config file with values from AWS ParameterStore, then starts Loki or Tempo as the primary container process. We add tini to the image to manage the container as PID1 and trap/forward the SIGTERM and SIGKILL signals to the application. This same pattern is used in both our Loki and Tempo images.

#!/bin/sh

/etc/loki/gomplate -f /etc/loki/loki.tmpl -o /etc/loki/loki-config.yml --chmod 640

loki -config.file /etc/loki/loki-config.ymlService discovery

When Loki and Tempo are run in a distributed system, they must coordinate data and activities among the service peers. The underlying Cortex library allows several means of doing this, such as etcd or Consul or a Gossip Memberlist.

The memberlist is most like a Kubernetes-provided headless Service, but on Fargate you need to bring in a few additional pieces. AWS ECS Discovery (i.e., AWS CloudMap) provides a similar mechanism for a Fargate deployment. ECS Discovery associates an ECS service with a Route53 A or SRV record (if you want the port discoverable) and automatically (de)registers targets as containers launch.

A namespace for the service discovery is created first, followed by a discovery definition for each service. Finally the Fargate service references the ECS Discovery namespace. Below is a sample terraform snippet of this configuration. When complete, the Memberlist configuration references a CloudMap DNS record that will contain all the ipv4 addresses of the registered Fargate Tasks.

resource "aws_service_discovery_private_dns_namespace" "this" {

name = ecs.yourdomain.com

vpc = data.aws_vpc.this.id

}

resource "aws_service_discovery_service" "ingester" {

name = "ingester"

dns_config {

namespace_id = aws_service_discovery_private_dns_namespace.this.id

routing_policy = "MULTIVALUE"

dns_records {

ttl = 5

type = "A"

}

}

health_check_custom_config {

failure_threshold = 5

}

}It is important to note that several Loki and Tempo services query the container network devices to discover their IP address and register it with the memberlist. This query is done via the Cortex libraries and by default looks for `eth0` as the device. Fargate 1.4.0 (now selected as the default “latest” in AWS) uses eth0 as an internal AWS device and has moved the container’s external network interface to `eth1`. This manifests as containers joining the memberlist but completely unable to communicate with each other because the registered address is the internal container IP and not the actual network IP. If you see memberlist entries that don’t match your VPC subnet CIDRs, you’ve hit this problem! The solution is to set the preferred network device in the Loki/Tempo configuration if you are using Fargate 1.4.0.

ingester:

lifecycler:

# for faragate 1.4.0 use eth1; use the default for other platforms

interface_names: ["eth1"]API ingress

Once Loki or Tempo is up and running, you’ll need a way to route traffic to the applicable services so that Grafana, Promtail, or the Grafana Agent can reach the microservice backends. This requires distributing traffic by path, so we turn to the AWS Application Load Balancer. The configuration differs slightly for Loki and Tempo based on each service’s API. Refer to the specific Grafana documentation to see the difference, but below is the routing configuration that allows Grafana to use the distributed query-frontend and querier services from a single entrypoint.

resource "aws_alb_listener_rule" "query_frontend" {

listener_arn = aws_alb_listener.https.arn

action {

type = "forward"

target_group_arn = aws_alb_target_group.query_frontend.arn

}

condition {

path_pattern {

values = [

"/loki/api/v1/query",

"/loki/api/v1/query_range",

"/loki/api/v1/label*",

"/loki/api/v1/series"

]

}

}

}

resource "aws_alb_listener_rule" "querier_tail_websocket" {

listener_arn = aws_alb_listener.https.arn

action {

type = "forward"

target_group_arn = aws_alb_target_group.querier.arn

}

condition {

path_pattern {

values = [

"/loki/api/v1/tail"

]

}

}

}Startup and graceful shutdown

In the event that you need to modify the official Loki or Tempo images to run initialization and configuration scripting, you will also need to ensure you handle signal trapping. As mentioned earlier, a great lightweight option for this is tini, but you could also build your own sigterm trapper in a script if that suits your organization better.

#!/bin/sh

pid=0

sigterm_handler() {

if [ $pid -ne 0 ]; then

kill -TERM "$pid"

wait "$pid"

fi

exit 143;

}

trap 'kill ${!}; sigterm_handler' TERM

# add your tasks here

pid="$!"

echo "PID=$pid"

# wait forever

while true

do

tail -f /dev/null & wait ${!}

doneGraceful shutdown is particularly of concern for the Loki and Tempo ingesters, which may have unfilled chunks that haven’t flushed to the backend. Depending on your volume of logs and traces and configuration, these chunks could sit on an ingester for an hour or longer. In a pure Fargate deployment without persistent storage, this is a problem.

A Kubernetes ingester is able to use persistent volume claims to recover the data between ingester restarts or shutdowns without loss. Trapping and forwarding the SIGTERM from Fargate is the first step in ensuring the ingesters have enough time to flush chunks to the storage. Second is increasing the `stop_timeout` parameter in the ECS Container Definition. The maximum time AWS permits is 120 seconds, after which Fargate will issue a final SIGKILL to terminate the task.

Recent changes to the Loki and Tempo service APIs also includes a flush endpoint to force the ingesters to commit chunks to the backing storage (AWS S3 in our case) and shut down the ingester. We strongly recommend using this endpoint as part of your init system or automation to prevent data loss.

Conclusion

At Seniorlink, we have been using Loki in production with AWS Fargate for over a year, and began deploying Tempo about four months ago while integrating OpenTelemetry tracing into our applications. Our Loki services currently ingest around 1,000 lines per second, and Tempo ingests an average of 250 spans and 50 traces per second from the current set of instrumented applications. Adoption of Grafana, Loki, and Tempo has enabled a single unified view into application behavior, and reduced analysis time for application troubleshooting.