How I fell in love with logs thanks to Grafana Loki

As part of my job as a Senior Solutions Engineer here at Grafana Labs, I tend to pretty easily find ways out of technical troubles. However, I was recently having some Wi-Fi issues at home and needed to do some troubleshooting. My experience changed my whole opinion on logs, and I wanted to share my story in hopes that I could open up some other people’s eyes as well. (I originally posted a version of this story on my personal blog in January.)

First, some background info:

I’ve always been a metrics person. I love charts and graphs through and through. My limited exposure to logging was mostly back in my enterprise IT days grepping through a fair share of application logs across hundreds of endpoints. Do you remember the days of creating shared NAS exports and just writing out logs until they filled up the filesystems? (Yeah, me neither . . . ahem . . .)

My home computing footprint is filled with 10Gb switches, multiple wireless access points, trays of render-farm servers, and terabytes of storage. I was having a hard time figuring out some new issues with my network, and it was difficult to see what my gear was doing all the time.

My challenge was to sort out why my wireless devices were having intermittent connection instabilities and which (if any) of my wireless access points were having the most number of issues. But all I had to work with was syslog, a standard network-based logging protocol that devices use to send messages and log events. Metrics weren’t going to save me. I needed a way to get at all of those messages!

An Internet search for “Syslog Collector” presented me with 342,000 results to start my effort. Most of the attention-grabbing “6 Free Syslog Servers” links led to a fair number of Windows utilities, and they were each pretty limited to just a few hosts at a time. The problem? I needed to collect data from more than a dozen systems and I’m running on Linux and macOS. I was looking for something easy, and I knew that finding some open source goodness might be useful.

This now becomes a tale of how I came to love logs. And Loki. <3

My initial exposure to Grafana Loki came during my first days here at Grafana Labs. Loki is an amazing solution when you want to discover and consume logs alongside Prometheus and Kubernetes for microservices, and it provides a great file and application endpoint logging aggregation system.

When I was learning to utilize Loki in some of its core use cases, I didn’t immediately make the connection that I could also use it to capture standalone network logs. However, once I understood that, I jumped right in with a new deployment of Loki.

Even though Loki’s roots come from Prometheus and Kubernetes, my goal was to build out a quick-start standalone syslog ingester. Discovering just how easy Loki was to deploy as a single binary, either via the command line or in Docker, meant I was able to get started on my project right away. After deploying Promtail (which ingests logs into Loki) I felt I was close to unlocking the mysteries of my network with just a few minutes of effort.

A look through Loki’s documentation on configuring Promtail with Syslog made me realize that Promtail by itself only works with IETF Syslog (RFC5424) — which is how I also found out my devices were limited to only RFC3164. Thankfully, I also discovered how best to solve my syslog dilemma: syslog-ng.

What’s useful about syslog-ng in my situation is that it can be spun up to listen for RFC3164 (UDP port 514) and then forward to Promtail RFC5424 on port 1514, since most of my devices only output the older style of syslog. A few quick default configuration changes were all I needed to bring together syslog-ng and Promtail and have them happily talking to each other.

syslog-ng configuration

#syslog-ng.conf

source s_local {

internal();

};

source s_network {

default-network-drivers(

);

};

destination d_loki {

syslog("promtail" transport("tcp") port("1514"));

};

log {

source(s_local);

source(s_network);

destination(d_loki);

};Promtail configuration

#promtail-config.yml

server:

http_listen_port: 9080

grpc_listen_port: 0

positions:

filename: /tmp/positions.yaml

clients:

- url: http://loki:3100/loki/api/v1/push

scrape_configs:

- job_name: syslog

syslog:

listen_address: 0.0.0.0:1514

idle_timeout: 60s

label_structured_data: yes

labels:

job: "syslog"

relabel_configs:

- source_labels: ['__syslog_message_hostname']

target_label: 'host'The relabeling in Promtail takes the hostname of the device sending messages into syslog-ng and turns it into a host label for Loki to index. Within a few minutes, I had all of my hosts streaming syslog from my network into Loki, and it was explorable within Grafana!

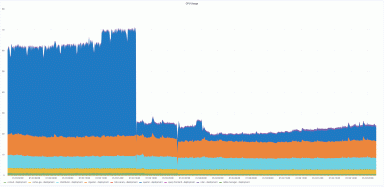

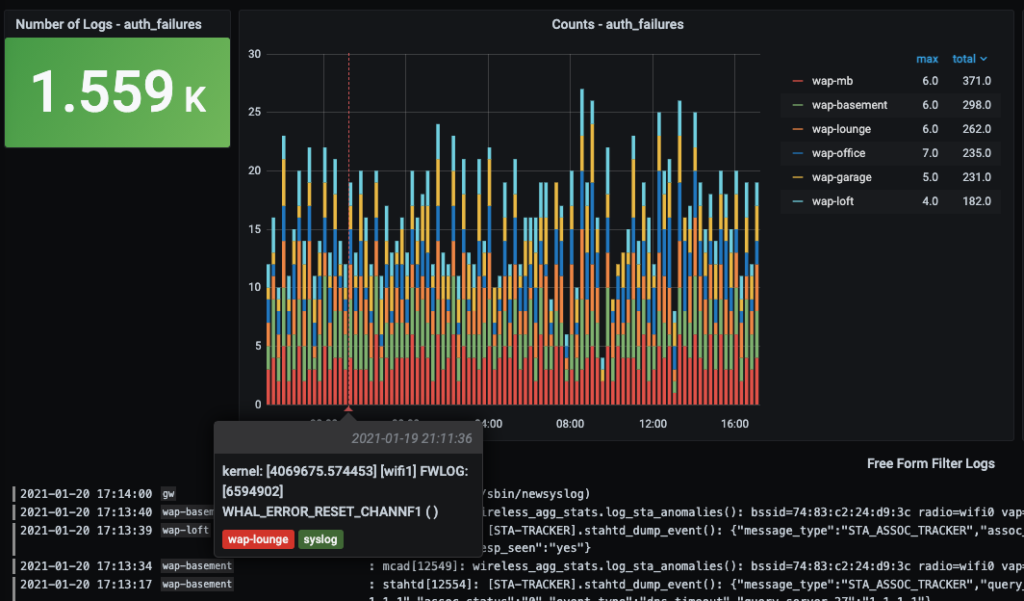

An example dashboard showing Loki ingested syslogs forwarded from syslog-ng.

A dashboard of my dreams

Around the same time as all of my network troubleshooting was happening, one of Grafana Labs’ Lead Solutions Engineers, Ward Bekker gave a presentation to our team on the upcoming launch efforts for the Loki 2.0 release. He showed us some of the new dashboarding examples and without hesitation said to me, “Dave, look how easy it is to turn logs into metrics.” He had my attention!

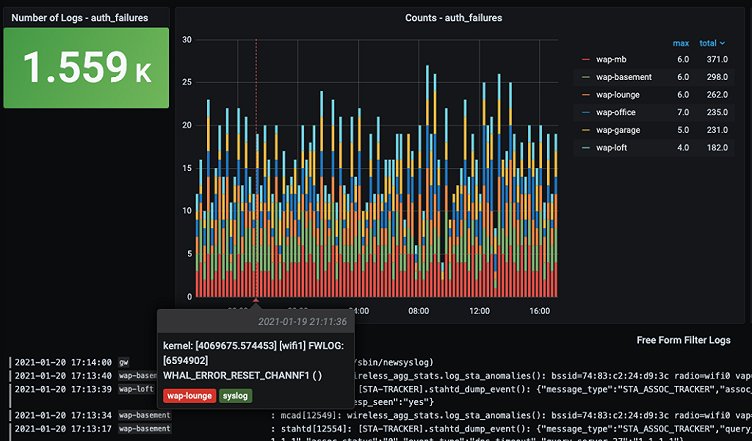

I returned to my effort to build out a dashboard, taking all of my (now easily gathered) device logs and applied the “logs to metrics” magic recently released with Loki. The net result was group summary counts over time by wireless access points!

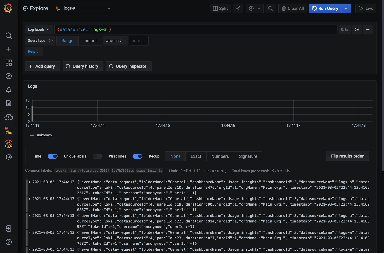

This was my first LogQL query that started my logs to metrics journey:

count_over_time({host=~"$hostname", job="syslog"}[$__interval] |="$filter”)

And this example below is showing the number of logs over time filtered by hostname ($hostname) coming from my syslog Promtail job (syslog) with a freeform search query string from my Grafana variable ($filter) auth_failures.

With a bit more dashboard tweaking, I was able to visualize other types of syslog messages from some additional network devices, such as my network gateway, my server IPMI stats, and NAS details. I could now scroll back through my log history, which up until that point was invisible to me. I also had a way of visually understanding the frequency and type of messages being collected, as well as an easy way to free-text filter all of my logs. Truly, logs AND metrics!

Bringing together Grafana, Loki, and Syslog into an all-in-one project

How I got started with Loki and kicked off my logging journey is pretty simple, and I believe it represents how quick and easy it is to connect open source solutions to solve immediate problems, even in a home lab situation.

I wanted to share the configurations so other log-averse people could see the light, so I created an “all-in-one” docker-compose project that I call Loki Syslog AIO.

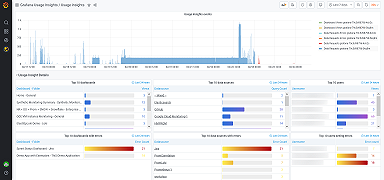

The quick example project allows you to run all of the mentioned services with docker-compose on a Linux server. Point your network devices at (hostname:514) and log into Grafana (hostname:3000) and you’ll be presented with the “Loki Syslog AIO – Overview” dashboard.

For those of you that want to see some of the behind-the-scenes details, I’ve included some prebuilt performance overview dashboards for each of the main services (Grafana, Loki, MinIO, Docker, and host metrics). You’ll see dropdown links to the “Performance Overview” at the top of the Loki Syslog AIO – Overview dashboard, including links to get you back to the starting dashboard. If you don’t have syslog devices immediately available but want to try the dashboard out, I also built an optional syslog generator container.

For more setup details and downloads, check out my Grafana Loki Syslog AIO Github repository. My Loki Dashboard example is also available in Grafana’s Community Dashboards.

And in case you’re wondering if Loki helped me figure out what was causing the dropped connections on my home lab servers, the answer is yes! They were related to high DHCP retries and too-aggressive settings on my minimum data rate controls. Thanks, Loki!

The easiest way to get started with Grafana, Prometheus, Loki, and Tempo for tracing is Grafana Cloud, and we’ve recently added a new free plan and upgraded our paid plans. If you’re not already using Grafana Cloud, sign up today for free and see which plan meets your use case.