The essential config settings you should use so you won’t drop logs in Loki

In this post, we’re going to talk about tips for securing the reliability of Loki’s write path (where Loki ingests logs). More succinctly, how can Loki ensure we don’t lose logs? This is a common starting point for those who have tried out the single binary Loki deployment and decided to build a more production-ready deployment. Now, let’s look at the two tools Loki uses to prevent log loss.

Replication factor

Loki stores multiple copies of logs in the ingester component, based on a configurable replication factor, generally 3. Loki will only accept a write if a quorum (replication_factor / 2 + 1) accepts it (2 in this case). This means that generally, we could lose up to 2 ingesters without seeing data loss. Note, in the worst case we could only lose 1 ingester without suffering data loss if only 2 of the 3 replica writes succeeded. Let’s look at a few components involved in this process.

Distributor

The distributor is responsible for receiving writes, validating them, and preprocessing them. It’s a stateless service that can be scaled to handle these initial steps in order to minimize the ingesters workload. It also uses the ring to figure out which replication_factor ingesters to forward data to.

Ring

The ring is a subcomponent that handles coordinating responsibility between ingesters. It can be backed by an external service like consul or etcd, or via a gossip protocol (memberlist) between the ingesters themselves. We won’t be covering ring details in this post, but it creates hash ring using consistent hashing to help schedule log streams onto a replication_factor number of ingesters.

ring

/ ^

/ \

/ \

v v

distributor -> ingester -> storageIngester

The ingesters receive writes from the distributors, buffer them in memory, and eventually flush compressed “chunks” of logs to object storage. This is the most important component, as it holds logs that have been acknowledged but not yet flushed to a durable object storage. By buffering logs in memory before flushing them, Loki de-amplifies writes to our object storage, gaining both performance and cost reduction benefits.

Essential configuration settings

Here are a few configuration settings in the ingester_config that are important to maintain Loki’s uptime and durability guarantees.

replication_factorThe replication factor, which has already been mentioned, is how many copies of the log data Loki should make to prevent losing it before flushing to storage. We suggest setting this to3.heartbeat_timeoutThe heartbeat timeout is the period after which an ingester will be skipped over for incoming writes. An important consequence is that this also ends up being the maximum time that the ring can be unresponsive before all ingesters are skipped over for writes, essentially halting cluster-wide ingestion. We suggest setting this to10m, which is longer than the ring is expected to possibly be unresponsive (i.e., a consul could be killed and revived well within this time).

Now, let’s look at a few less essential, but nice to have, configs:

chunk_target_sizeThe chunk target size is the ideal size a “chunk” of logs will reach before it is flushed to storage. We suggest setting this to 1.5MB (1572864bytes), which is a good size for querying fully utilized chunks.chunk_encodingThe chunk encoding determines which compression algorithm is used for our chunks in storage.gzipis the default and has the best compression ratio, but we suggestsnappyfor its faster decompression rate, which results in better query speeds.max_chunk_ageThe max chunk age is the maximum time a log stream (set of logs with the same label names and label values) can be buffered by an ingester in memory before being flushed. This threshold is generally hit for low-throughput log streams. In order to find a good balance between not having chunks cover too long of a time range and not creating too many small chunks, we suggest setting this to2h.chunk_idle_periodThe chunk idle period is the maximum time Loki will wait for a log entry before considering a stream to be idle, or stale, and therefore the chunk to be flushed. Setting this too low on slowly written log streams results in too many small chunks being flushed; setting this too high might result in streams that have churned for cardinality reasons to consume memory longer than necessary. We suggest setting this in the1hto2hrange. It can be the same asmax_chunk_age, but setting it longer means streams will always flush at themax_chunk_agesetting and will make some metrics less accurate.

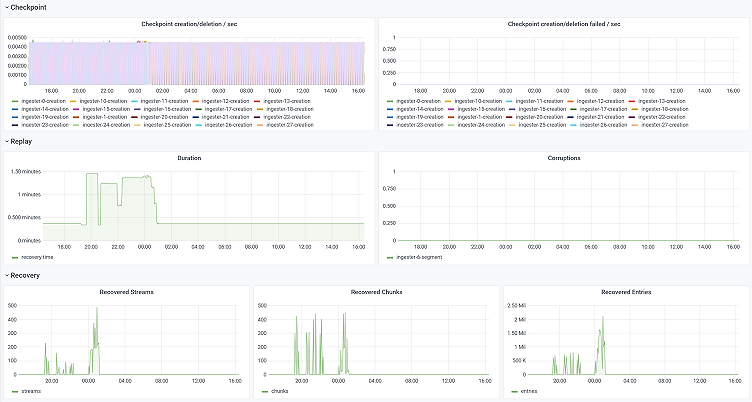

WAL

The second technique we’ll talk about is the Write Ahead Log (WAL). This is a new feature, available starting in the 2.2 release, which helps ensure Loki doesn’t drop logs by writing all incoming data to disk before acknowledging the write. If an ingester dies for some reason, it will replay this log upon startup, safely ensuring all the data it previously had in memory has been recovered.

Pro tip: When using the WAL, we suggest giving it an isolated disk if possible. Doing so can help prevent the WAL from being adversely affected by unrelated disk pressure, noisy neighbors, etc. Also make sure the disk write performance can handle the rate of incoming data into an ingester. Loki can be scaled horizontally to reduce this pressure.

Essential configuration settings

Here are a few WAL configurations we suggest that reside in a subsection of the ingester_config:

enabledWe suggest enabling the WAL as a second layer of protection against data loss.dirWe suggest specifying a directory on an isolated disk for the WAL to use.replay_memory_ceilingIt’s possible that after an outage scenario, a WAL is larger than the available memory of an ingester. Fortunately, the WAL implements a form of backpressure, allowing large replays even when memory is constrained. Thisreplay_memory_ceilingconfig is the threshold at which the WAL will pause replaying and signal the ingester to flush its data before continuing. Because this is a less efficient operation, we suggest setting this threshold to a high, but reasonable, bound, or about75%of the ingester’s normal memory limits. For our deployments, this is often around10GB.

Parting notes

That wraps up our short guide to ensuring that Loki doesn’t lose any logs. We find that between the combined assurances of the WAL and our replication_factor, we can safely say that no logs will be left behind.

The easiest way to get started with Grafana, Prometheus, Loki, and Tempo for tracing is Grafana Cloud, and we’ve recently added a new free plan and upgraded our paid plans. If you’re not already using Grafana Cloud, sign up today for free and see which plan meets your use case.