How to use LogQL range aggregations in Loki

In my ongoing Loki how-to series, I have already shared all the best tips for creating fast filter queries that can filter terabytes of data in seconds and how to escape special characters.

In this blog post, we’ll cover how to use metric queries in Loki to aggregate log data over time.

Existing operations

In Loki, there are two types of aggregation operations.

The first type uses log entries as a whole to compute values. Supported functions for operating over are:

rate(log-range): calculates the number of entries per secondcount_over_time(log-range): counts the entries for each log stream within the given rangebytes_rate(log-range): calculates the number of bytes per second for each streambytes_over_time(log-range): counts the amount of bytes used by each log stream for a given range

Example:

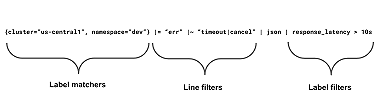

sum by (host) (rate({job="mysql"} |= "error" != "timeout" | json | duration > 10s [1m]))The second type is unwrapped ranges, which use extracted labels as sample values instead of log lines.

However, to select which label will be used within the aggregation, the log query must end with an unwrap expression and optionally a label filter expression to discard errors.

Supported functions for operating over unwrapped ranges are:

rate(unwrapped-range): calculates per second rate of all values in the specified intervalsum_over_time(unwrapped-range): the sum of all values in the specified intervalavg_over_time(unwrapped-range): the average value of all points in the specified intervalmax_over_time(unwrapped-range): the maximum value of all points in the specified intervalmin_over_time(unwrapped-range): the minimum value of all points in the specified intervalstdvar_over_time(unwrapped-range): the population standard variance of the values in the specified intervalstddev_over_time(unwrapped-range): the population standard deviation of the values in the specified intervalquantile_over_time(scalar,unwrapped-range): the φ-quantile (0 ≤ φ ≤ 1) of the values in the specified interval

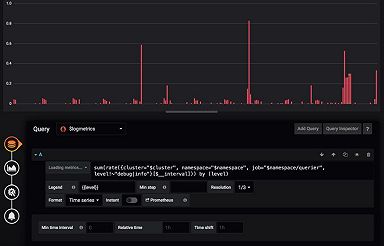

Example:

quantile_over_time(0.99,

{cluster="ops-tools1",container="ingress-nginx"}

| json

| __error__ = ""

| unwrap request_time [1m])) by (path)It should be noted that this quantile_over_time, like in Prometheus, is not an estimation. All values in the range will be sorted, and the 99th percentile is calculated.

Rate for unwrapped expressions is new and very useful. For instance, if you’re logging every time the amount of bytes fetched, then you can get the throughput of your system with rate.

A word on grouping

Unlike Prometheus, some of those range operations allow you to use grouping without vector operations. This is the case for avg_over_time, max_over_time, min_over_time, stdvar_over_time, stddev_over_time, and quantile_over_time.

This is super useful to aggregate the data on specific dimensions and would not be possible otherwise.

For example, if you want to get the average latency by cluster you could use:

avg_over_time({container="ingress-nginx",service="hosted-grafana"} | json | unwrap response_latency_seconds | __error__=""[1m]) by (cluster)For other operations, you can simply use the sum by (..) ( vector operation, as you would do with Prometheus, to reduce the label dimension after the range aggregation.

For example, to group the rate of request per status code:

sum by (response_status) (

rate({container="ingress-nginx",service="hosted-grafana”} | json | __error__=""[1m])

)You may have noticed this, too: Extracted labels can be used for grouping, and it can be powerful to include new dimensions in your metric queries by parsing log data.

You should always use grouping when building metric queries that have logfmt and json parsers because that’s the only way to reduce the resulting series — and those parsers can implicitly extract tons of labels.

Conclusion

Range vector operations are great for counting log volume, but combined with LogQL parsers and unwrapped expressions, a whole new set of metrics can be extracted from your logs and give you visibility into your system without even changing the code.

We’ve not covered LogQL parser or unwrapped expressions in detail yet, but you should definitely take a look at our documentation to learn more about how to extract new labels and use them in metric queries.

And if you’re interested in trying Loki, you can install it yourself or get started in minutes with Grafana Cloud. We’ve just announced new free and paid Grafana Cloud plans to suit every use case — sign up for free now.