All the non-technical advantages of Loki: reduce costs, streamline operations, build better teams

Hi, I’m Owen, one of the Loki maintainers, and I’m putting proverbial pen to paper to convince you why Loki is important. And this isn’t because it scales (it does) or because I work at Grafana Labs (I do). It’s because of the oft-overlooked and underrepresented organizational benefits.

Organizational benefits?! What is this, some sort of cult? Why are you avoiding the technicals?

Whoa, whoa, whoa. Now, hold on. The technicals are still valid. But they’re covered pretty well in other places (such as here, here, and here), and I think we’ve given them enough air time.

I want to talk about the things that Loki does — or better yet, what it allows you to avoid doing. The things that I learned the hard way. The things that might make sense when you’re scaling people, teams, or projects instead of datasets.

This can be split into roughly two camps: cost and process, assuming cost to be monetary and process to be organizational.

How Loki helps the bottom line

First up is a brief primer on how Loki works, which should help frame the rest. Loki is a cost-effective, scalable, unopinionated log aggregator that is largely based off of the Prometheus label paradigm and stitched together with Cortex internals to scale.

Loki ingests your logs and makes them searchable. You know, those text files containing amorphous manifestations of technical debt. The fragile, tentative storyline of your application. Things which the brevity of metrics could never express. The debug logs which seem useless when all is sunshine and rainbows but are worth their weight in gold during an outage.

In essence, Loki makes two choices which everything else inherits from:

- First, it indexes only a fraction of metadata instead of entire log lines.

- Second, it decouples its storage layers to a pair of pluggable backends: one for the index and one for compressed logs.

Why Loki only indexes the metadata

So, Loki only indexes metadata. How exactly does that make it more cost-effective to run and by how much?

With full-text indexing, it’s common for the indices themselves to end up larger than the data they’re indexing. And indices are expensive to run because they require more expensive hardware (generally RAM-hungry instances).

Loki doesn’t index the contents of logs at all, but rather only the metadata about where they came from (labels like app=api, environment=prod, machine_id=instance-123-abc).

So instead of maintaining fleets of expensive instances to serve large, full-text indices, Loki only has to worry about a tiny fraction of the data. Anecdotally, this is around ~4 orders of magnitude smaller than the data (1/10,000th).

So right off the bat, Loki minimizes what is generally the most operationally costly part of running indexed log aggregators.

Why Loki uses object storage for log storage

We’ve just covered the indexing decision Loki makes; now let’s look at how decoupled storage helps reduce cost. Loki does, after all, need to store the logs as well. It does this by sending them off in compressed chunks to a pluggable object store like AWS S3.

Compared to the expensive memory hungry instances we were talking about before, object stores are cheap as dirt very cost-effective. The logs live there until requested. In essence, the tiny label index is used to route requests to the compressed logs in object storage, which are then decompressed and scanned in a highly parallel fashion across commodity hardware.

To help transition us towards the more process-oriented benefits, I want to note that when logging is cheap, it removes the perverse incentive to log less. It’s an anti-pattern to not log those debug logs (because they’re expensive to store and retrieve). When storage is cheap, we can avoid those hard decisions and ensure we have the resources we need when combating an outage.

How Loki reduces your operational headaches

Now that we’ve covered the dollar-denominated (or your non-alliterative currency of choice) reasons why our accountants like Loki, let’s get into the nitty-gritty reasons why our operations teams favor Loki, too.

Because Loki takes an unindexed approach to logging, it avoids the reliance on structured logging to drive operational insights into log data. This means no coordinating schema definitions with preprocessing tools and the following game of whack-a-mole when trying to change these across multiple applications or teams.

The issues of building ad-hoc pipeline tools and backwards compatible migrations don’t really apply. However, it’s important to mention the trade-offs when avoiding preprocessing: At query time, we must understand how to interact with the data meaningfully.

But how much better this distinction is! Query time technical debt can be managed any number of ways and over long periods of time or never managed at all (and it is a major reason why we use logfmt for readability/grepping during query time).

On the other hand, ingestion time preprocessing requires immense upfront effort, is extremely brittle to changes, and causes organizational friction.

The problem will always be that there is a wide variety of use cases, formats, and expertise across internal groups. But one of these logging approaches gives us flexibility around that issue, and the other does not.

Loki’s lack of formalized schemas isn’t to say it can’t be used for analytics, either. But it’s tailored towards developers and operators and prefers to enable incident response over historical analysis. That said, the next release of Loki will bring powerful analytical capabilities for ad-hoc metrics.

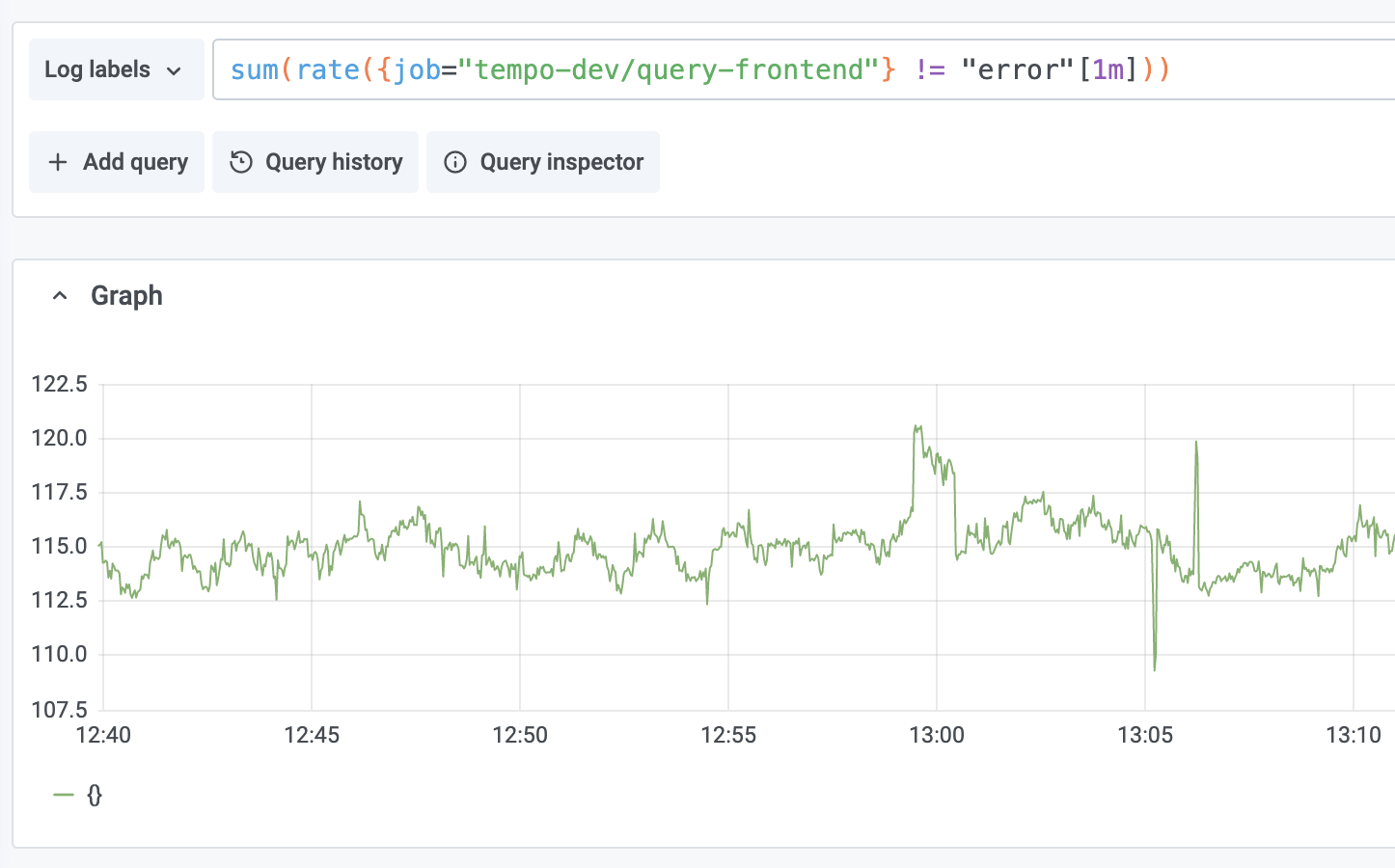

It’s not just grep, either. Its LogQL query language, modeled off of Prometheus’s PromQL, enables rapid hypothesis proving and seamless switching between logs and metrics. For instance, quickly generating error rates from log entries is as simple as this:

As mentioned before, some of my favorite things about Loki are what it enables us to avoid doing.

Remember our tiny index and schema-less data model? Loki allows us to avoid dealing with hot and cold indices, life cycle management, and one-off necromantic processes to reanimate old data when auditing concerns arise. Just ship off your old data to cheap object storage and don’t worry about managing successive tiers of performance-focused indices on expensive hardware.

Loki will create, rotate, and expire its own tiny index automatically, ensuring that it doesn’t grow too large and enabling users to transparently query any data for as long as you’ve specified retention.

Loki also handles upgrades to its internal storage version seamlessly. Want to take advantage of some new improvements? No problem. Loki keeps a reference for the boundaries between these, splits queries transparently across them, and stitches them back together. No need to worry about unloading and reloading old schema versions for compatibility.

(If you want to reduce your operational overhead even more, check out Grafana Cloud, Grafana Labs’ fully managed observability platform for metrics, logs, and dashboards at scale. Sign up for a free trial here.)

How Loki improves your teams

Before I leave you, I’d like to talk about devs vs. ops. It’s become increasingly popular (and with good reason) to marry these two.

There’s a distinction here though — don’t conflate understanding how/where software is deployed with running observability systems. Let your application developers log what they want without worrying about which logging schema they need to use to ensure that it doesn’t break some preprocessing pipeline for their observability tools.

As mentioned previously, we prefer logfmt at Grafana Labs because its simple output enables grep-friendly query time filtering/manipulation. Point being, some level of consistency is nice, but not necessary. Empower your developers and operators to focus on the essence of what they need without worrying over the paradigms of your observability system.

Loki’s lack of user-defined schema and its unindexed nature removes cognitive load from your developers and operators, allowing them to refocus on the essence of their jobs and then pivot into querying Loki when desired.

Let your operations team understand running and scaling Loki, including the ancillary needs of configuring promtail (or whichever agent you use). I suggest using labels to attach environmental identifiers to your logs, like application=api, env=prod, cluster=us-central, etc. Then users can mix and match label filters to quickly refine where problems occur and take advantage of the massively parallelizable nature of Loki’s read path to make arbitrary queries across a potentially huge dataset at low cost.

And don’t worry — open source is transferable. It ensures there’s relatively little barrier to entry for understanding Loki. No need to feel bound to hiring only from other large organizations or fear incoming engineers won’t have experience with your chosen tooling.

Loki can be run in single binary mode as an all-in-one on a single machine (like Prometheus) and then scaled out horizontally as your use case grows due to scale, redundancy, or availability concerns. We have a wide range of users that run Loki on everything from Raspberry Pis to massive, horizontally scaled clusters.

Loki doesn’t do everything, but we think it makes excellent trade-offs for its use case: a fast, cost-effective, highly scalable log aggregator with excellent integrations to the Prometheus label model that allows for effortless switching between metrics and logs.