Where did all my spans go? A guide to diagnosing dropped spans in Jaeger distributed tracing

Nothing is more frustrating than feeling like you’ve finally found the perfect trace only to see that you’re missing critical spans. In fact, a common question for new users and operators of Jaeger, the popular distributed tracing system, is: “Where did all my spans go?”

In this post we’ll discuss how to diagnose and correct lost spans in each element of the Jaeger span ingestion pipeline. I also highly recommend reviewing the official Jaeger documentation on performance tuning, as it covers some of the same territory.

Jaeger architecture

There are a fair number of configuration options available when setting up Jaeger. In this post, we are specifically going to be addressing the following setup:

In Process Client -> Jaeger Agent -> Jaeger Collector -> Kafka -> Jaeger Ingester -> Cassandra

We primarily use Go at Grafana Labs, so we will be specifically discussing the Jaeger Go Client. Additionally, all metrics will be scraped, aggregated, and queried using Prometheus. For more details on how Grafana Labs uses Jaeger, check out this blog post.

F5 is magic

But first! Before you go diving into your infrastructure attempting to piece together where a span may have been lost, just refresh.

The Jaeger UI will often find traces in your backend that match your search criteria, but they haven’t been fully ingested. This is particularly common in extremely recent traces.

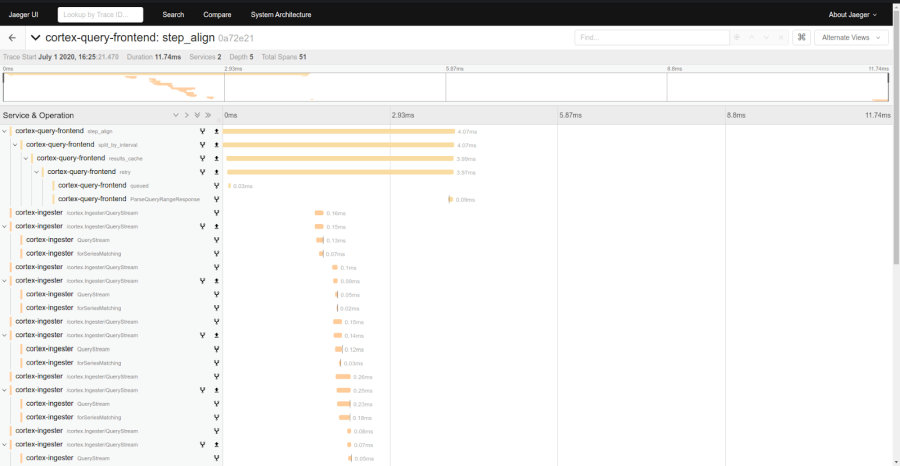

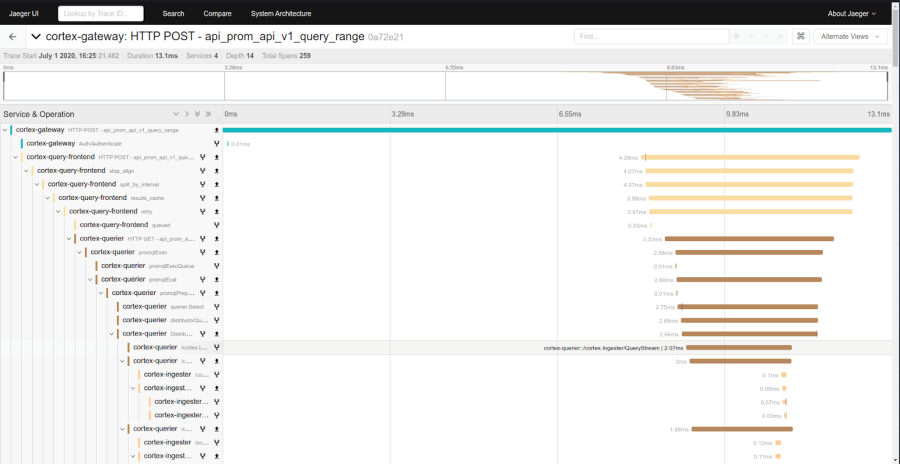

Before F5:

After F5:

If refreshing fails you, then it’s time to start looking at different elements of span ingestion and trying to figure out where spans are being dropped.

In-process client

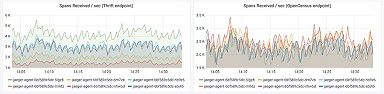

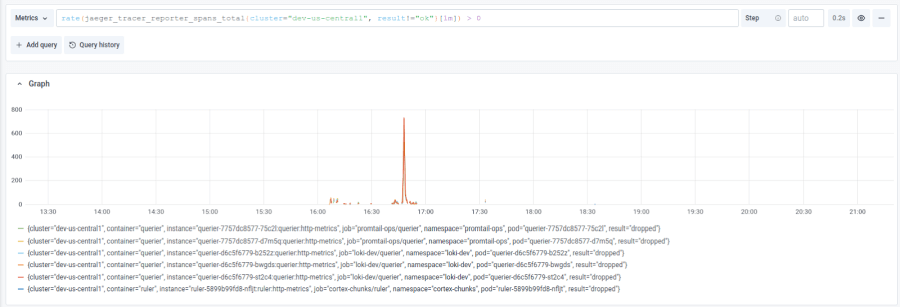

We are going to start in the process responsible for producing spans and move toward our backend, checking metrics as we go. For in-process metrics, we want to look at jaeger_tracer_reporter_spans_total. This particular metric is produced by the Jaeger Go Client and can tell us when spans are dropped before they make it off the process:

We can see here that there was a spike of dropped spans from some Loki components that resulted in broken traces.

Thrift clients also should be reporting dropped spans to the agent. These dropped spans are exposed as the metric jaeger_agent_client_stats_spans_dropped_total by the Jaeger Agent itself. Try both and see what works better for you!

The fix!

The Jaeger client internally buffers spans in a queue while it is sending them to the Jaeger Agent. Having dropped spans simply means that your process filled the queue faster than it was capable of offloading them. Two options for fixing this are:

Increase the queue size using the environment variable JAEGER_REPORTER_MAX_QUEUE_SIZE.

Move the agent closer to the process. For instance, you could run it on the same machine or in a sidecar.

Other possibilities

Unfortunately, there is another possibility at this point that’s more difficult to diagnose. By default, your process will be using Thrift Compact over UDP to communicate with the agent. UDP is a best-effort protocol, and it’s possible that packets are dropped without either the agent or the process being aware. In this case I’d recommend consulting network metrics published by a tool like node exporter.

Additionally, if your clients are up to date with the latest changes to the Thrift protocol, the agent will print jaeger_agent_client_stats_batches_received_total and jaeger_agent_client_stats_batches_sent_total. These values can be used to determine if batches are being dropped between client and agent. However, this is not universally supported.

Agent

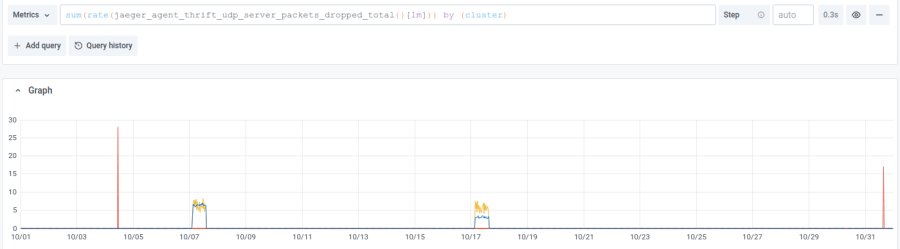

On the agent I’d recommend paying attention to the jaeger_agent_thrift_udp_server_packets_dropped_total metric. This metric will indicate if the agent is incapable of clearing its queue fast enough as it is forwarding spans to the Jaeger Collector.

The fix!

If you’re seeing spikes in this metric, consider:

Increasing the queue size. Note that there are different switches for different protocols. Most people will be using jaeger-compact.

--processor.jaeger-binary.server-queue-size int length of the queue for the UDP server (default 1000)

--processor.jaeger-compact.server-queue-size int length of the queue for the UDP server (default 1000)

--processor.zipkin-compact.server-queue-size int length of the queue for the UDP server (default 1000)Adding more Jaeger Agents to spread the load better or adding more Jaeger Collectors to consume them faster.

It’s also worth checking other metrics such as jaeger_agent_client_stats_batches_received_total to determine if there is an imbalance in traffic to your agents.

Collector

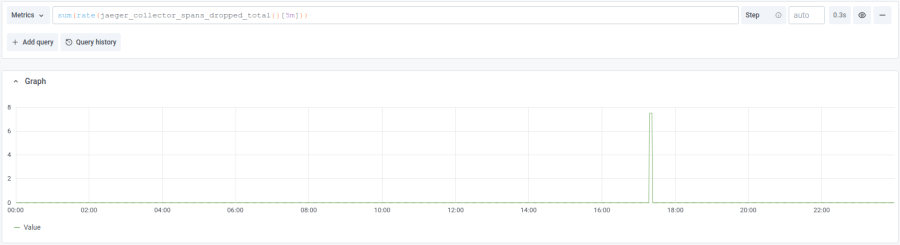

The Jaeger Collector also has a queue, but is a little different because it relies on an external backend such as Kafka, Elasticsearch, or Cassandra. In this case the metric we want to watch is jaeger_collector_spans_dropped_total. Kafka is generally very quick to consume spans, and so in our infrastructure, this is one of the least likely places to see dropped spans.

The fix!

You can probably guess the pattern now. Configure that queue!

There are two primary options to adjust the performance of the collector queue. Here at Grafana Labs, we have set the queue size to 10000 and set the number of workers to 100 (YMMV).

--collector.num-workers int The number of workers pulling items from the queue (default 50)

--collector.queue-size int The queue size of the collector (default 2000)If you’re feeling experimental, there is a new option that allows you to configure the maximum amount of memory available to the collector queue. This option can cause elevated CPU but comes with the added benefit of publishing span size metrics.

-collector.queue-size-memory uint (experimental) The max memory size in MiB to use for the dynamic queue.If you are having persistent issues here, I would also consider reviewing the jaeger_collector_save_latency histogram and adjust your backend accordingly.

Ingester

The ingester is one piece of Jaeger infrastructure that does not have an internal queue because it relies on Kafka for its queueing. Errors in the ingester and latency to the backend generally just slow down ingestion, but don’t necessarily result in dropped spans. For the ingester I’d primarily watch errors and latency to make sure that spans are being inserted into your backend in a timely manner. In our case, these are jaeger_cassandra_errors_total and the jaeger_cassandra_latency_ok histogram.

Another metric, jaeger_ingester_sarama_consumer_offset_lag, is also useful for determining if ingesters are falling behind on the Kafka queue.

The fix!

If your ingester is failing to offload spans successfully to your backend, you will need to research your backend metrics and configuration and adjust accordingly. Maybe it’s time to scale your cluster up?

To sum up: Queues, queues, and more queues

Well, that’s it! As you can see, reducing dropped spans is generally a matter of queue management. If spans are being dropped, you can increase queue sizes, reduce latency to the next stage in the pipeline, or increase the capacity of the next stage.

This guide is definitely not exhaustive. There are plenty of other metrics that Jaeger exposes to help describe your system and understand why spans may be dropping. Curl the /metrics endpoint, read the code, and build better dashboards!