The Future of Cortex: Into the Next Decade

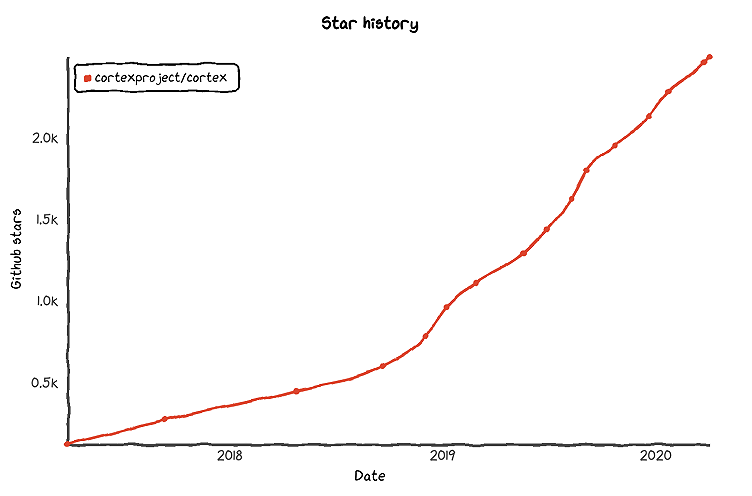

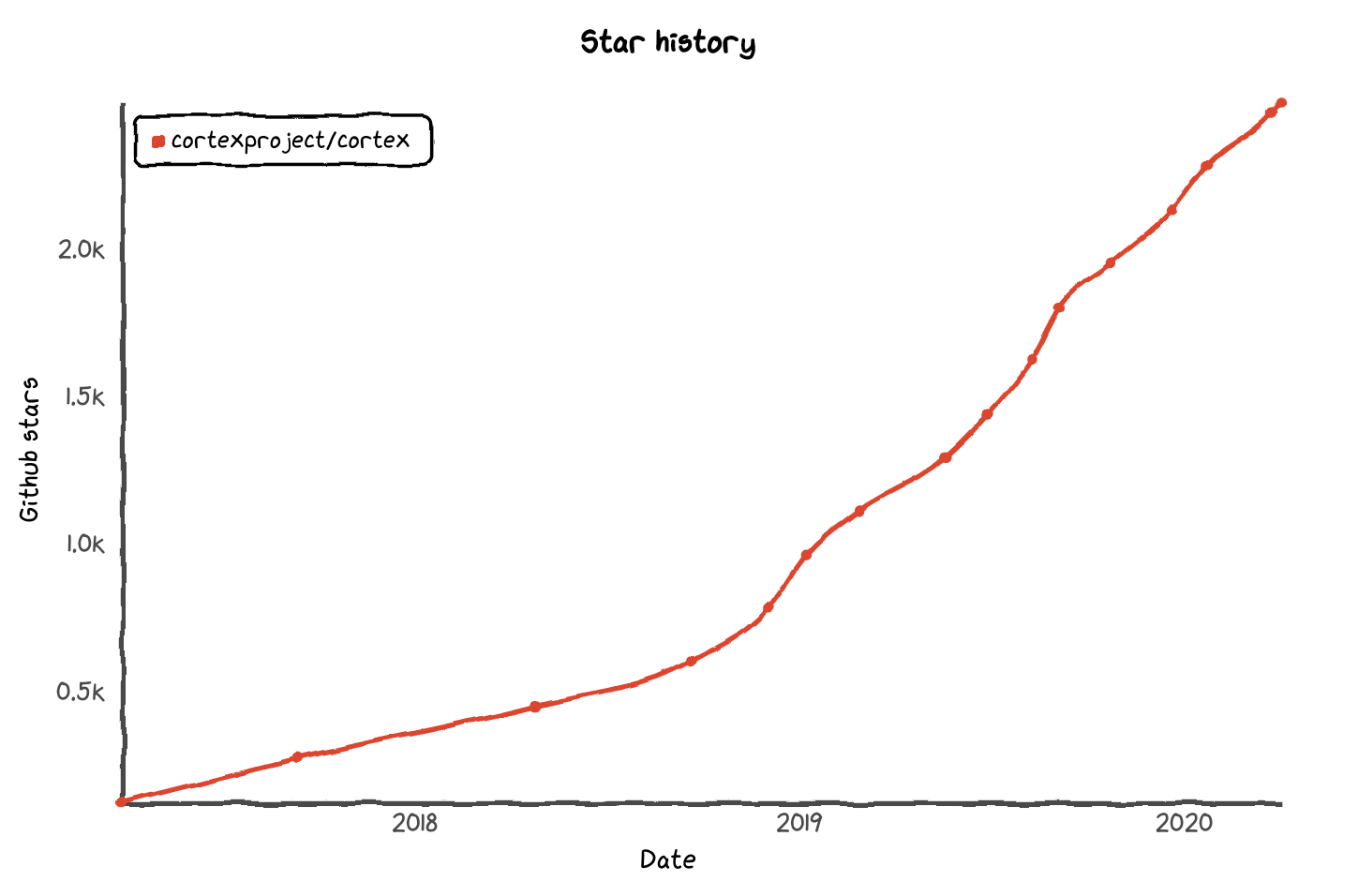

The Cortex project, a horizontally scalable Prometheus implementation and CNCF project, is more than three years old and shows no sign of slowing down. Right now, there are a lot of things going on in Cortex, but sometimes it’s not clear why we’re doing them. So I want to provide some clarity for both the Cortex community – and the wider Prometheus community – regarding our intentions, especially with regards to the Thanos Project.

A Little Background

Prometheus is a force to be reckoned with in the monitoring space. These days, everyone from hobbyists keeping track of their beer or bread production to startups and enterprises are using the data-model, and they’re building exporters or exposing Prometheus metrics for everything. But there are still challenges in the following areas:

Long-Term Storage

Prometheus defaults to 15 days of retention, so data older than 15 days is deleted. The reason for this was more of a side-effect of the older versions: The size of the disk was unknown, and 15 days seemed like a sane default. (Changing it now would be a breaking change and needs a 3.0.) Massive strides were made with the 2.0 release and they continue to be made, reducing the memory overheads for the older data that help Prometheus store large amounts of data.

Even with all these improvements, gaps remain that are inherent to the single-node architecture. If we lose a node or if the data is corrupted, it’s not easy to get that data back. The recommended solution is to run a pair of Prometheus instances, but if one of them dies, you end up with only one copy of the data and potential gaps in graphs – plus, there’s no “automatic” or easy way to replicate the lost data yet.

Global View

Prometheus is a single node system, and the way to scale it is via functional sharding (which loosely translates to each team getting their own Prometheus pair). Since Prometheus needs to be close to the systems it monitors, each different cluster needs a different Prometheus pair. Although this works, there is no seamless and efficient way to combine the data from two different Prometheus servers.

There are many solutions out there that aim to solve these two problems, but the most serious contenders (I’m not biased at all :P) are Thanos and Cortex. Both have very similar goals and have maintainers of Prometheus as the initial authors and maintainers, but they take drastically different approaches. Thanos has more users and is easier to use, while Cortex has been around longer and has been used in larger clusters. What’s more, Cortex has been through 18+ months of rigorous performance improvement sprints, which made it extremely fast for large queries. (A much deeper comparison is in the blog post “Two Households, Both Alike in Dignity: Cortex and Thanos.”)

Where Cortex Fits In

Prometheus is extremely scalable and can handle millions of series with ease. Most companies (or teams) only need one or two Prometheus servers. If what they want is just long-term storage, then Thanos is a no-brainer. It’s straightforward to set up and works pretty well for most users. It has an incremental onboarding process that gives immediate value to users and lets them add complexity as needed.

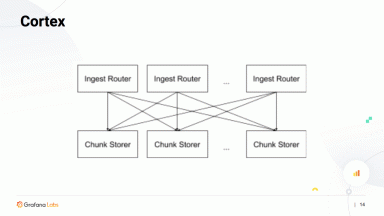

Cortex, on the other hand, requires a NoSQL store and is documented poorly in comparison – although this is something we’ve been improving. However, once you have it up and running it can scale to hundreds of millions of series and is extremely fast to query.

Cortex is more for enterprises that want a centrally-managed monitoring experience for their internal teams. It’s for companies that have 25 million or more series across all their clusters and want to store that data for years. For example, GoJek, Southeast Asia’s fastest growing transport, logistics, hyperlocal delivery and payments company, uses Cortex as their central monitoring solution, and they offer it to all their teams. The teams set up Prometheus and forward metrics to “Lens,” the system GoJek built on top of Cortex. From there, the teams not only get a fully-compatible and super-fast PromQL endpoint, but they also get visibility into the amount of metrics they’re sending and can centrally configure their alerts. Meanwhile, operators are able to centrally manage their metrics and alerting infrastructure and help teams with best-practices. With Cortex, GoJek can also enforce limits per team to make sure one of them doesn’t use too many resources and can do “billing” for accountability. Further, the system is multi-tenant, so the teams only have access to their own metrics.

This centralization allows organizations to build “centers-of-excellence” through focus and domain-specific expertise. People who are competent in Prometheus are able to handle the metrics infrastructure rather than spreading out the maintenance burden to every team in the organization. This compares to the “one Prometheus per team” model where the central operations team now has to constantly provision, tune, and upgrade many different Prometheus and also has to hit different Prometheus in different clusters to get the usage stats out. I think Cortex is key to helping these enterprises migrate to Prometheus across their entire fleet, and the previous example is the main use case for most of the adopters, rather than just simple long-term storage.

The Missing Parts

Cortex enables the enterprise workflow described above and adoption is increasing. We want to accelerate the adoption by focusing on the following:

Ending Dependence on a NOSQL Store

Nobody wants to run Cassandra or pay for Dynamo/Bigtable. One of Thanos’ many wins is its dependence on an object store for all its data needs. Most enterprises have access to or run their own S3 compatible object store, and getting access to that is very easy for the monitoring team. We should also drop our reliance on the NOSQL stores and make them optional.

Grafana Labs and DigitalOcean are already making a concerted effort to remove the dependency on a NOSQL store. Like Thanos, we’re planning on using Prometheus’ TSDB with an object store. We’re even using the Thanos code for it, and we’re actively collaborating with the Thanos community. The plan with this project is for users to be able to use Thanos and Cortex interchangeably (they can read data written by each other, with some caveats), which will open up new migration capabilities. The initial results are super positive, but they’re not without trade-offs.

Cortex has gone through rigorous performance improvements over the past couple of years and has gotten incredibly fast, especially for PromQL queries. One of Grafana Lab’s customers, which regularly does queries that span months and touch millions of series, have been impressed with the query performance (<2s for 99.9% of their queries, including some that touch millions of series). Storing everything in an object store with some local caching means that data access might be slower, and we need to ensure that we don’t give up on all the performance gains. If we can scale to ingesting millions of time series, it won’t be useful if we can’t query them back in reasonable time. We’ll know soon if we can make things fast enough, and if we can’t, we’ll look at building a node-local storage that can scale and is performant.

Auth Gateway

We’ve always said that authentication is beyond the scope of Cortex, but it’s one of the required basics for users. We’ve simplified it for users who don’t need multi-tenancy, but if they do need it, we send them to chase obscure nginx configs. One of the reasons we’ve deemed auth to be out of scope is the sheer number of methods and systems being used across companies. But we’re considering adding an auth component which does basic auth off of a simple config file. A file as simple as below would suffice and make initial onboarding much easier:

tenants:

- user: infra-team

password: basic-auth-password

- user: api-team

password: basic-auth-password2I can see organizations using this for a long time before their needs evolve and they go build their own system.

A Billing System

With Cortex, we track and store the usage per tenant but we never give out dashboards or tools that will let the administrators see this. That’s one of the first things Cortex operators build for themselves. We need to build a simple tool that puts the billing metrics not only back into the user tenant but also into an admin tenant. That way, the admins can see how much teams are using without access to their data. This would also let each team regularly monitor their usage and even alert on it.

In general, having a solid system to monitor and control tenants and their usage would be super helpful for enterprise.

Alerting/Rules UI

Most (if not all) other monitoring systems that enterprises are migrating from let users configure alerts from a UI and an API – but not Cortex or Prometheus. Alerts are critical and everyone uses them, but very few people are okay with a config-driven workflow for alerts. We firmly believe version-controlled, config-driven alerting is a better system, but it’s a massive step up for most users and one that is sometimes prohibitive to adopt. We have the opportunity to configure alerts via a UI in Cortex because the config is API driven, and we should exploit it as much as possible. This would probably be a Grafana feature, and I think it would improve the usability and adoption of Cortex.

Downsampling and Variable Retention

If possible, users want to store things forever, and a lot of companies have use-cases and requirements to store data for five to seven years. They don’t necessarily need all the metrics, and they almost certainly don’t need them at high resolutions like 15 secs. Downsampling and retention of specific metrics is something many other monitoring systems provide and something that enterprises expect. We’ve seen some demand for this, but I expect the requests will be stronger in 2020.

We should look at how to do downsampling transparently, in a way that users can write normal PromQL but have Cortex automatically adjust the resolution. We also should make deletes effective so that we can implement variable retention. Some metrics will be stored only for three months, while others will be stored for a year. Thanos already has some support for downsampling and we’re hoping to collaborate and improve on that.

Community and Documentation

First impressions of Cortex used to be that it was complicated and hard to deploy; it lacked documentation, example configurations, and had many moving parts. Over the last 12 months we’ve addressed all of these: a new website, published production configs and a single-process, single-binary mode. But the public perception is still very much based on the old state of things. We should work on changing this. If you remove the NoSQL store, the complexity is almost equivalent to Thanos. For almost every component we run (rulers, ingesters, queriers, etc.), Thanos also has an equivalent component. While not having a NoSQL store would help, current users can still use a managed solution (Bigtable/Dynamo). We should evangelize the single process mode of scaling Cortex. I really like the premise of Marco Pracucci’s talk: “Scaling Prometheus with Cortex: one time series at a time”.

But beyond that, I think we are missing success stories for Cortex. The only folks making noise about Cortex are vendors. We should try to do case studies and blog posts with EA (the video game company behind FIFA and other popular games), GoJek, and others who are leveraging Cortex at massive scale and seeing success. We should make sure that our users are encouraged to – and have the opportunity to – give conference talks. For those trying to compare Thanos and Cortex for an internal SaaS, seeing these other companies succeed would make a huge difference.

We should also add guides that help users get up-and-running with Cortex quickly and explain how to scale that up. Making it clear that they can also buy support contracts and services might help, and that should be done in a fair, vendor-neutral way that doesn’t antagonize the community.

On top of that, this year we need to focus on the UX and push for even more config clarity: We should make sure our config is consistent, clear, and documented well; we should try to automate the config generation as much as possible; and we should make our new website more useful for users who are looking for docs or getting started. With a consistent and strong new user experience, I am confident that adoption will skyrocket!

The Year Ahead

Everything outlined above will be my primary focus for 2020, and if we manage to do it all, I think both Thanos and Cortex will thrive, each with their own set of users. Through our investment in the object store-only mode, we’re contributing back fixes and performance improvements to Thanos, but they’re benefitting Cortex, too. If everything goes well, I think we can build interoperability into both projects: Start with Thanos, and once you’re big enough or require multi-tenant capabilities, transparently move to Cortex with the older data written by Thanos still queryable. Maybe both projects will end up merging – who knows?!? Regardless of what transpires, I think 2020 will be the year of enterprise Prometheus adoption!

![[KubeCon Recap] Cloud Native Architecture: Monoliths or Microservices?](/static/assets/img/blog/GrafanaMonomicrolithsSlide3.png?w=384)

![[KubeCon Recap] Configuring Cortex for Maximum Performance at Scale](/static/assets/img/blog/cortex_kubecon4.jpg?w=384)