![[PromCon Recap] A Look at TSDB, One Year In](/static/assets/img/blog/TSDB_1.jpg?w=752)

[PromCon Recap] A Look at TSDB, One Year In

This is a writeup of the talk I gave at PromCon 2019.

TSDB is the storage engine of Prometheus 2.x. Based on the Gorilla compression, it started out in an independent repo, which eventually attracted 60+ contributors and 771 stars. There were 500+ commits after the Prometheus 2.0 release. The repo was archived in August 2019, and now it’s a part of the Prometheus repo, inside the tsdb directory.

In order to use it, just import github.com/prometheus/prometheus/tsdb, and you can use it as a package.

Here are some highlights of the development over the past year.

Enhancements and Features

1. Backfilling

Before the below work, there was a blocker for backfilling; you could not have overlapping TSDB blocks. With this pull request, queries and compactions were able to support overlapping blocks and this enabled backfilling. Blocks are compacted to eventually be non-overlapping. Prometheus doesn’t have a recommended way to backfill yet, but the community is contributing to take this forward.

2. WAL Compression

WAL compression, which was added by Chris Marchbanks, uses Google’s Snappy algorithm. There’s no visible effect in the performance, and it reduces WAL size up to 50%. This option is disabled by default and can be enabled with a flag.

3. WAL Read Optimizations

About a year ago, Brian Brazil improved WAL processing by up to 3.75x faster wall time and 4.25x less CPU time with better re-use of objects and parallelization in this PR.

Recently, Chris added another optimization of decoding the WAL records from the disk in a separate goroutine and processing them in another goroutine. In some real world tests, it showed an improvement of 23% on the WAL replay time. This will be included in v2.15.0.

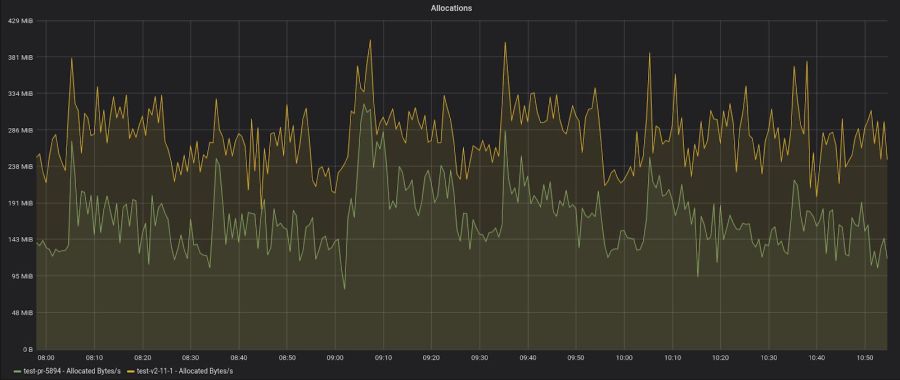

4. Allocation/Memory Optimization for Compaction

Before this optimization, we used to re-open the persistent blocks separately every time we had to compact them. This causes a spike in memory usage as we are holding duplicate copies of block indices. With this PR, we re-use the blocks that are already opened in the database and roughly halve the memory requirement of compaction.

Recently I was trying to reduce the memory requirement of the compaction by changing the way we write the index. Though that was a failed attempt, it led to the following allocation improvements for the compaction. (The numbers are for the allocated objects.)

5. Various Optimizations for the Queries

When your query hits multiple TSDB blocks, the postings coming from them have to be intersected. With this PR, that intersection was optimized.

This particular optimization converts regex queries of the form {foo="bar|baz"} to {foo="bar"} or {foo="baz"}. Regex queries are generally more expensive than mentioning fixed label value, hence breaking it down like this speeds up the query. Such queries are normally found in Grafana dashboard, so this optimization will help load the dashboards a little quicker.

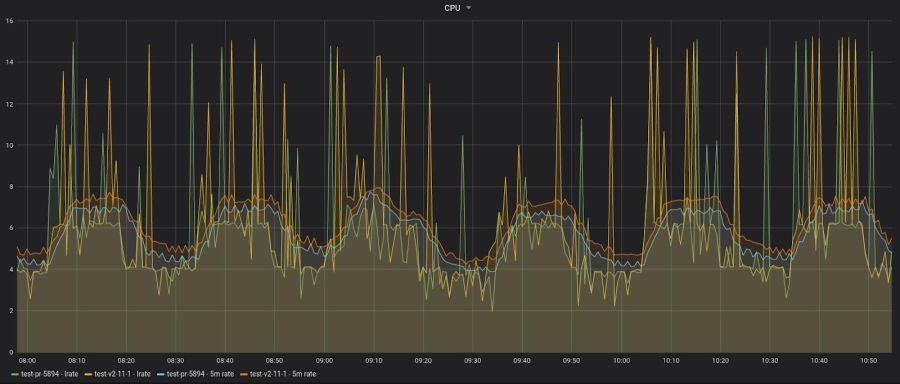

During a query, we have to iterate over all the chunks to process the samples. For this we used to create an Iterator object per chunk. Sometimes there can be millions of chunks that we have to process. With this optimization, we now reuse the Iterator and reduce the allocations required for a query. For some heavy queries, this can result in a good memory and CPU usage reduction with a little faster query execution.

Thanks to Krasi Georgiev and a bunch of GSoC students, we now have something called Prombench, which we use to run heavy benchmarks on Prometheus for every release comparing it with the previous release. I happen to have graphs for the above optimization and you can see them below.

6. TSDB Analyse Command

analyse command was added to TSDB CLI in this PR. It helps you find useful information regarding the churn and the cardinality.

7. Read-Only TSDB

This is an interface/implementation for Read Only Mode TSDB. It’s safe to use this interface with a live tsdb. TSDB CLI already uses it.

What’s Next

1. Isolation

TSDB has A, C, and D from ACID, but not I. Brian Brazil and Goutham Veeramachaneni have made some progress on this in the past, but didn’t complete the work. The plan is to rebase and continue their work and add it in early 2020.

2. Lifting Index Size Limit

TSDB stores the recent data in the memory and the old data on persistent storage in the form of blocks. Each block has its own index to map the series to the actual chunks that contain the data samples. Currently the index size is limited to 64GB because of the limit in addressable space. We use 32 bits for postings (byte sequence of the series in the index), and the posting is 16 byte aligned (hence 16*2^32=64GiB). Hitting the 64GB limit is not very common, but it’s not hard to hit it. We’ve seen index for 8M series with block spanning 9 days take 20GB.

During Google Summer of Code 2019, I mentored Alec Wang throughout this work on lifting the size limitations of the index. All details about the solution that I mentioned in the talk is present in the linked blog post.

3. Improved Checkpointing of WAL

Currently the WAL is checkpointed at regular intervals where we pack all the records together. But replaying the checkpoint still consists of replaying the WAL records one by one. In this PR I am attempting to checkpoint the actual chunks present in the Head block (in-memory block) inspired from ongoing WAL work in Cortex. With this, replaying the checkpoint would only consist of loading the chunks and putting them in the Head without an individual sample ingestion required.

Bonus: We have extended this idea to not only make checkpoint and restart time faster, but to also reduce the memory footprint of the Head block by m-mapping the chunks that we would write to the disk and not store them in the memory. You can subscribe to this GitHub issue to keep yourself updated on this, and a design doc will be floated soon.