How Loki Helped Paytm Insider Save 75% of Logging and Monitoring Costs

Paytm Insider’s motto is “to make every day less everyday,” says Hitesh Pachpor, Technical Manager for the popular platform for buying event tickets in India. “The platform is driven by the fan that is inside of us, who wants to have the most hassle-free experience when booking tickets online.”

So when tickets for a big cricket match or a major music artist’s concert go on sale, the company’s DevOps/SRE team knows it has to be prepared for a big surge in traffic, which on any given day already tops 1 million hits.

The company, which started as an event management business in Mumbai four years ago, runs a homegrown AWS, Kubernetes, and Grafana stack. “Our goal is to make sure that the systems are always up, so that our users on the mobile and web apps always have access to the platform,” says DevOps Engineer Aayush Anand.

Challenges Caused by Growing Scale

But as the platform grew in scale, the team started running into problems.

In order to debug any issues with the platform that affected user experience, “we needed logs at all times,” says DevOps Engineer Piyush Baderia. “With our growing scale, the cost of our logging and monitoring stack was increasing along with our scale, which was not ideal for us.”

Secondly, during those spikes in traffic caused by big on-sale events, “the volume of logs would increase exponentially, as well as the log-drop rate and the time to buffer and ship logs,” says Baderia. “So an issue could be occurring for customers, but we were not aware of it or we were not able to debug it properly.”

“With the high volume of logs, scaling the Elastic cluster while controlling costs was very difficult,” he adds, “and often nodes would run out of memory, causing restarts and dropped logs.”

Monitoring Everywhere

Perhaps most crucially, “we had no central place for all of our monitoring solutions,” says Baderia.

The team used different monitoring solutions for different services. Says Anand: “We had CloudWatch for logging our different applications that run within the AWS environment. Then we had our Kubernetes stack Prometheus monitoring, where we would track metrics such as the pod usage, CPU utilization, and such and such. And then we also had our application stack where we used ELK for tracing the application logs and for finding bugs and performance limitations of different services.”

As a result, “there were many different dashboards that you had to go to for looking at issues,” says Baderia. “It was becoming very difficult for us to correlate. And our alerts were not centralized at all. For a single issue, we would get three different alerts from three different places, which would also create a lot of noise and make it more difficult for us to debug those issues.”

Finding a Centralized Solution

The team realized it needed a way to “map all these logs together so that they could make more sense and so that we could find the problems in our production environment in a much faster and smoother way,” says Anand.

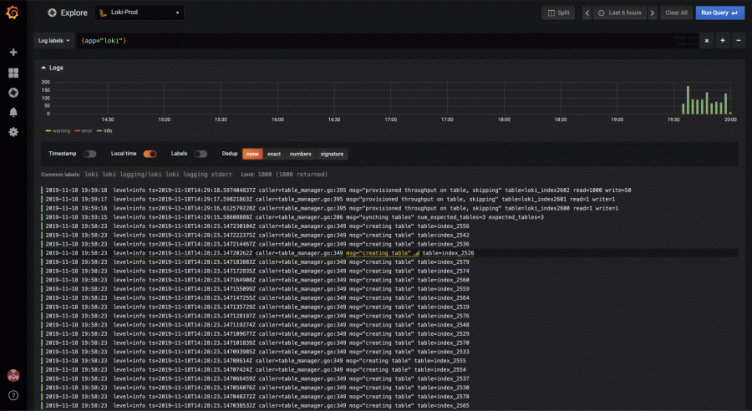

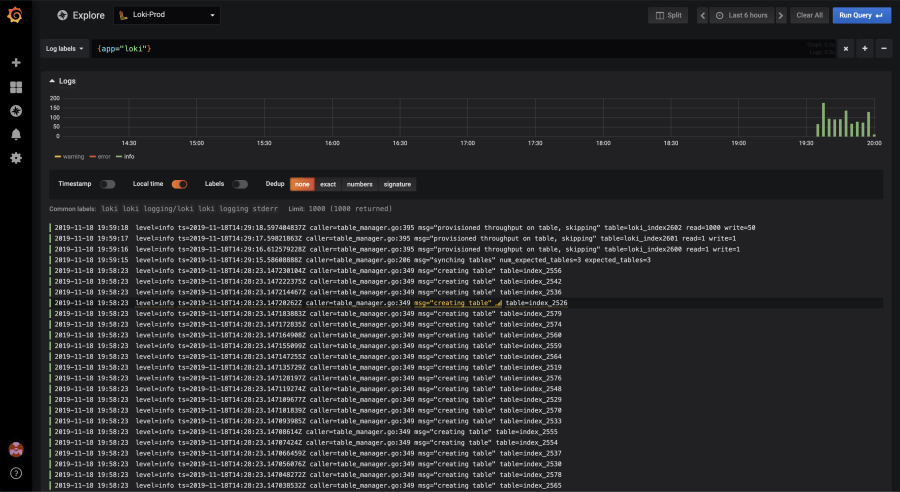

They were already using Grafana with Prometheus, and began to explore Loki. “We found out that with promtail, we can actually tail application logs and have the metrics for them in the same dashboard,” says Anand. “Using Loki deployed on Kubernetes with Grafana made sense to us.”

This solution was implemented around July 2019. “Loki was fairly easy to use and integrate into our stack,” says Baderia. “It comes with a default EBS store on AWS and has a good estimation of the resource usage and everything that we might need in the default Helm chart. But after looking through the docs and a couple of blogs, we figured that we can also use AWS DynamoDB (to store indices) and AWS S3 as a backend (to store logs), and that lets us scale horizontally as much as possible without actually worrying if our logging stack will go down.”

With that in place, the team began to reap the benefits. “Once we had all the logs as well as the metrics in the same dashboard, we were able to very quickly find out what caused a spike in the CPU utilization by just mapping the timeframe for the application logs with the metrics,” says Anand. “That decreased our time to response for different production usage substantially. We were able to figure out what’s causing the latencies and what’s causing the bugs in our application in a much faster, smarter way than what we were doing before.”

For example, one of Paytm Insider’s applications was experiencing a slow response time. “With Loki, we were able to find that this call is taking this much amount of time, but when we ran it locally it didn’t take as much time,” says Anand. “So by investigating further we found that the middleware was calling another API, which was taking longer.”

Stellar Results

Today, the team gets 2,000 logs per minute per service, and Loki centralizes logs for 25 services. They use a single Loki pod deployed as a stateful set and have promtail deployed as a Daemonset.

Before Loki, the average response time for debugging a latency issue was about 30 minutes. With Loki, used with Grafana and Prometheus, it’s down to 10 minutes. “And it’s less than that in most cases,” says Baderia.

Plus, the team has achieved nearly a 75% reduction in cost for the logging and monitoring stack by using Loki in its environment. “That has been pretty great for us because now, even though we are scaling in terms of user base, our costs are not scaling that much at least for our monitoring and logging stack,” says Baderia.

Centralized alerts have helped save time. “Now with logs and metrics in a single data source, and a single visualization tool, we can have a centralized alerting using Grafana,” says Baderia. “All of our alerts now come from a single place, and we don’t have to look anywhere else. We can just go directly to the alert and look at the status of the metrics and logs for that duration.”

And now that there’s centralized monitoring, the team has a way to correlate infrastructure and application performance.

Looking Ahead

The Paytm Insider team is working on further cost optimizations, and hoping to add more functionality. For instance, the team would like to add Loki as a data source in its dashboards, use Loki labels to enable per-API monitoring, and integrate panels into its stack to achieve horizontal scalability.

But already, Loki has “worked great for us,” says Anand.

“Since the time we installed Loki, there has not been a single incident or an instance where Loki went down for us, regardless of the volume of application logs that we were getting,” adds Baderia.

Baderia mentions in passing that the platform had just experienced a huge spike in traffic that day, because tickets for an India vs. Bangladesh T20 cricket match went on sale.

It’s no big deal now. “It went amazing,” he says. “We did not have a single log drop on Loki or a single metric drop on Prometheus.”