Lifting the Index Size Limit of Prometheus with Postings Compression

Prometheus’s TSDB (TimeSeries DataBase) stores the recent data in the memory and the old data on persistent storage in the form of blocks. Each block has its own index to map the series to the actual chunks that contain the data samples.

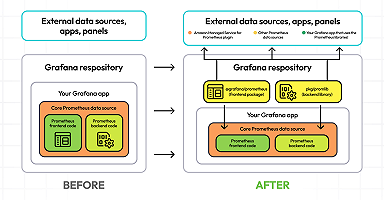

During Google Summer of Code 2019, I mentored Alec Wang throughout this work on lifting the size limitations of the index mentioned above. The work described below is up for review and should be merged soon.

Before we dive into the problem and solution, let’s look at the index format, which will introduce you to “postings.”

The Index Format

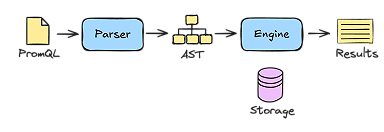

I will be talking about Prometheus’s index format and the term “posting.” The detailed format of the TSDB’s index format can be found here; I will only be focusing on the relevant part.

This is a high level view of the index format:

┌────────────────────────────┬─────────────────────┐

│ magic(0xBAAAD700) <4b> │ version(1) <1 byte> │

├────────────────────────────┴─────────────────────┤

│ ┌──────────────────────────────────────────────┐ │

│ │ Symbol Table │ │

│ ├──────────────────────────────────────────────┤ │

│ │ Series │ │

│ ├──────────────────────────────────────────────┤ │

│ │ Label Index 1 │ │

│ ├──────────────────────────────────────────────┤ │

│ │ ... │ │

│ ├──────────────────────────────────────────────┤ │

│ │ Label Index N │ │

│ ├──────────────────────────────────────────────┤ │

│ │ Postings 1 │ │

│ ├──────────────────────────────────────────────┤ │

│ │ ... │ │

│ ├──────────────────────────────────────────────┤ │

│ │ Postings N │ │

│ ├──────────────────────────────────────────────┤ │

│ │ Label Index Table │ │

│ ├──────────────────────────────────────────────┤ │

│ │ Postings Table │ │

│ ├──────────────────────────────────────────────┤ │

│ │ TOC │ │

│ └──────────────────────────────────────────────┘ │

└──────────────────────────────────────────────────┘The Series section is a list of info about all the series in the corresponding block. Each series has a serieID or reference which is the byte offset at which the series info starts. The series info includes its label values and also the reference of all its chunks in the block. So, as the time range of the block grows (more chunks), so does the data in a single series info.

The Postings 1..N represent the postings list, each label-value combination and the list of all the series references that have that label-value combination. This series reference is called a posting.

The Issue

Currently the posting is stored as a 32-bit unsigned integer, and it is 16-byte aligned. Hence the total addressable space is limited to 16*2^32=64GiB.

We have seen cases where a Prometheus handling 8 million series with block size limited to 9 days (calculated as 1/10th the retention period or 31 days, whichever is lower) had the index size reach up to 18 GiB. If we extrapolate this to a 31-day limit, it would be 62 GiB. If we again extrapolate to 10 million series, a scale which is possible with Prometheus, it would touch 77 GiB, a size which Prometheus currently cannot handle. Also note that 64 GiB is reachable at a lower scale too if the label values are big (or more in number) per series. This limit is also easily reachable in software like Thanos, which works with gigantic TSDB blocks.

Solution

As obvious as it looks, the solution to this is to use more than 32 bits for a posting (64 bits was the next in line). Simple, isn’t it? I wish.

Roadblocks:

Jumping to 64 bits directly would increase the index size.

Loading 64 bits per posting from disk is slower than 32 bits. Iterating over millions of postings would result in a painful performance hit. It’s all about performance when it comes to Prometheus queries, so we couldn’t compromise on that.

The increase in size for the postings list was tolerable, but not the slowdown of queries. So we had to compress the postings (decompression is usually faster than loading 32 more bits). Alec experimented with the following compression ideas for 64-bit postings:

Store the first posting, then store the delta to that (not the delta to the previous element, but the delta to the first number only). Find the minimum number of bits required to store the deltas. All the deltas will use those many bits in order to be able to do binary search.

Same as idea 1, but use blocks of postings, like 4KB blocks, after which we reset the first number and start a new block. Also be able to do binary search.

Same as idea 2, but store deltas with the previous number. Binary search to the right block and then iterate.

Same as idea 3, but use varint inside the block.

Roaring bitmaps. This needs a blog post of its own, so here you go.

After some thorough testing on synthetic data and some real-world data for the performance of iterating the postings list and seeking values in the postings list, we ended up converging on a subset of roaring bitmaps, which is prefix compression. This would consist of the blocks of postings like in Idea #2, but here in each block we store the first 48 bit of the postings as key, and the rest of the block consists of repeating 16 bits of all the postings with the common key.

Example (6-bit postings with key as 4 bits):

Original postings

| 011001 | 011010 | 101100 | 101101 | 101110 | 101111 |Prefix compressed postings

| 0110 | 01 | 10 | | 1011 | 00 | 01 | 10 | 11 |With 48 and 16 both being byte-aligned, the performance of iterating and seeking a postings list is comparable/similar to current numbers.

Epilogue

Kudos to Alec for the awesome work he did during his GSoC, and thanks to Goutham Veeramachaneni for his inputs during the process.