Deduping HA Prometheus Samples in Cortex

One of the best practices for running Prometheus in production environments is to use a highly available setup, in which multiple Prometheus instances all scrape the same targets. This means multiple instances have all your metrics data, so if one fails, the data is still available on another. Ideally, each instance would run on a separate machine.

Until recently, Cortex was not able to accept samples from an HA Prometheus setup without users adding a label with a unique value per Prometheus. This, unfortunately, would result in storing duplicated metrics. A common solution was to configure only one Prometheus replica to make use of remote write. If that replica failed, the best case scenario was a gap in metrics while it restarted. Now, Cortex can seamlessly accept samples from multiple Prometheus replicas while deduplicating them into one series per HA setup in real time.

The Solution

We wanted a solution that would allow multiple Promethei, scraping the same jobs, to write to the same Cortex instance without any client-visible errors, while only storing a single copy of each time series so that cost wouldn’t be doubled.

To accomplish this we rely on the client-side configuration of unique external labels for each HA instance to deduplicate before storage. The reserved labels, such as prom_ha_cluster and prom_ha_instance, are used to differentiate between replicas and only store samples from a single replica in a Prometheus HA cluster at a time. These labels can be configured per Cortex tenant.

How It Works

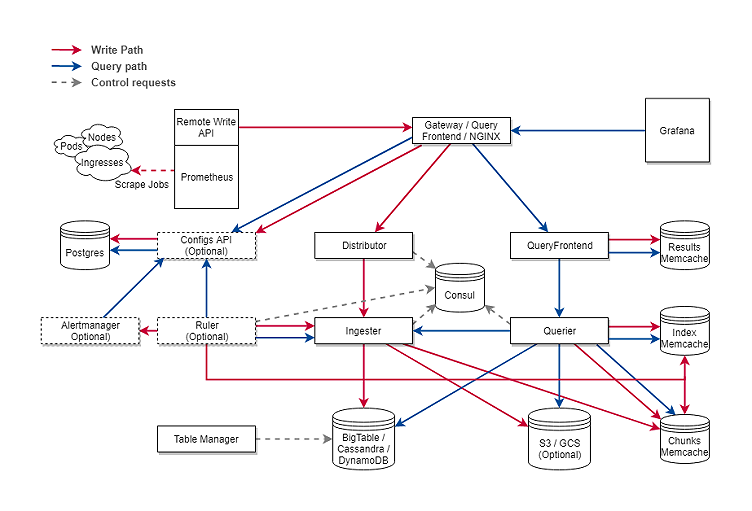

HA sample handling is done in the distributor. First, we check to see if an incoming sample contains both the previously mentioned labels. If not, we accept it by default. If both labels are found, we check the distributor’s local cache for which replicas it’s accepting samples from to decide what to do with the sample. Internally these are referred to as “elected” replicas. On each successful write to the KV store for a given cluster/replica, we store the timestamp when we elected that replica.

The decision on whether or not to accept a sample with both labels is as follows:

Is there an entry in our local cache for the cluster label?

- If not, perform a compare and swap operation (CAS) with the cluster/replica data to the KV store.

If there is an entry, does the replica label match?

If not, we need to check the timeouts.

- If the failover timeout has passed, we’ll write this new replica into the KV store via a CAS and start accepting samples from this replica. Otherwise we don’t accept the sample.

If it does, check the update timeout.

- If the update timeout hasn’t been reached, we can just accept the sample.

- If the update timeout has passed, we should perform a CAS and use the result to decide if we want to accept the sample. There has potentially been a write to the KV store by another distributor, so we don’t want to write the timestamp from this distributor as the updated elected time.

Note that in the case where the replica labels do not match but the failover timeout hasn’t passed, we still return a successful status code via HTTP 202. This way the Prometheus replicas which aren’t the current elected replica within their HA setup won’t receive errors for all their remote write calls, and therefore won’t fill their logs with error messages. As far as each replica is concerned, their remote write calls are successful.

This HA tracking code was designed to minimize writes to the KV store. The assumption is that the KV store is some kind of distributed KV store with consensus, and so writes could be expensive.

The deduplication implementation makes use of an operation called Compare and Swap. Distributed KV stores such as Consul provide this functionality using consensus.

For those unfamiliar with Compare and Swap operations, they’re essentially a way to guarantee atomic updates to a key in the KV store. Cortex’s deduplication code needs this because there are potentially multiple write operations coming in from the Distributors. For the HA tracking in Cortex, the internal CAS operation first gets the current value for the key. When we do this, we’re not only given the replica value, but also a revision number for that value. We then make a decision about whether or not to make the actual CAS call based on the value in the KV store, and, if we do, we provide that revision number.

Finally, when storing samples from HA replicas with these cluster/replica labels, we strip the replica label when we decide to accept a sample. This means that within Cortex you’ll only have a 1:1 series mapping for your current series instead of a series per replica. The cluster label is kept.

Deduping Using Consul

Consul is one of the backing KV stores that Cortex can use.

In Consul, a value called the ModifyIndex is used to enable the CAS operation. HTTP GET calls will give you the key/value you queried for but also the ModifyIndex. The client is allowed to update the value in the KV store if the ModifyIndex from the last time we read the key hasn’t changed.

Let’s say from a given distributor we’ve read the key cluster, and we get back the value replica2 and a ModifyIndex of 10. Next we check the timeouts as mentioned above (that logic is actually all passed to an internal CAS function that takes a callback). If we determine we want to write to Cortex, assuming the failover timeout has passed, we make a CAS call to the KV store, giving it the ModifyIndex from the previous read call of 10, and a new replica for cluster1, replica1.

The KV store will accept this write if the ModifyIndex hasn’t changed. If, in the meantime, a different distributor has also made the same decision — to start the failover from replica2 to replica1 — and its CAS operation finished before ours, the ModifyIndex would have changed. It could now be 11 instead of 10, and from the distributor we’re looking at, the write will be rejected. We would then store the new elected replica locally.

What’s Next

With these changes, users of Cortex can now write from each replica in an HA Prometheus setup with only a small configuration update and without Cortex having to store twice the amount of data. In the future, we also have minor changes planned for the interaction between the deduplication code and the KV store in order to further reduce writes.