How to Get Blazin' Fast PromQL

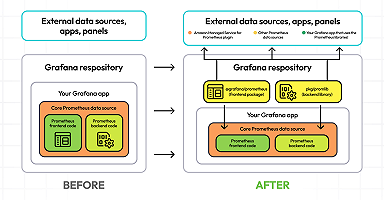

It all starts with Cortex – a horizontally scalable clustered version of Prometheus that was created three years ago and is now a CNCF Sandbox project.

Cortex uses the PromQL engine and the same chunk format as Prometheus – literally the same code base. “What we’ve really done is glue it together differently and use a bunch of distributed systems, algorithms, and consistent hashing to make it horizontally scalable,” Grafana Labs’ VP of Product Tom Wilkie explained at a recent talk. “It’s very similar in its problem statement to Thanos, but we solve it in a completely different way.”

“Cortex gives you a global view [of all your Prometheus servers]," said Wilkie. Cortex ensures there are no gaps in your charts while also providing long-term storage that is multi-tenant.

In the Prometheus world the rule of thumb is that cardinality for a single query shouldn’t be too large. Within a single Prometheus server and a single query, there shouldn’t be more than about 100,000 different time series, even when working with big aggregations.

Cortex allows for sending all your metrics into one Cortex cluster from multiple Prometheus servers, which suddenly makes this rule of 100,000 time series a lot harder to stick to. “At Grafana Labs, we’ve got about 15 Kubernetes clusters spread all around the world. And so we’ve got at least 15 Prometheus servers as well,” said Wilkie. At that scale, “it’s very easy to write a query that will touch one million series in a single query and a couple of hundred gigabytes of data – and you expect that query to return quickly.”

During his talk, Wilkie showcased how the Grafana Labs team resolved that issue and now helps execute blazing fast PromQL queries.

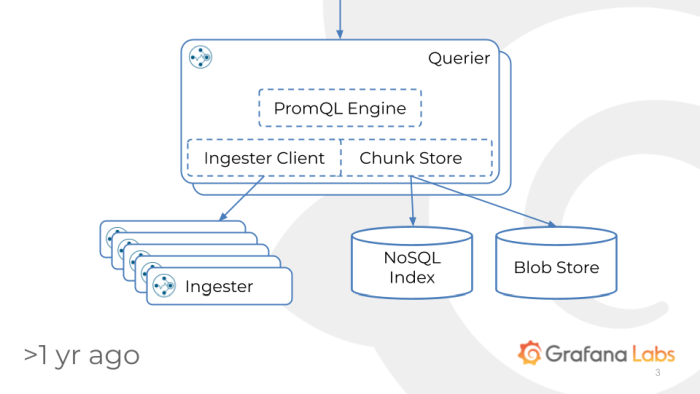

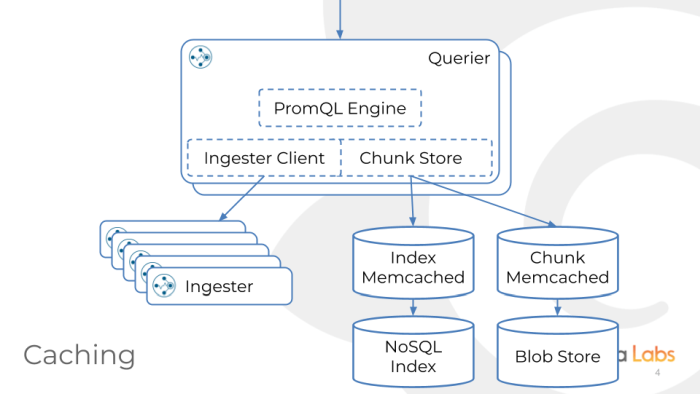

Introducing the Cortex Querier

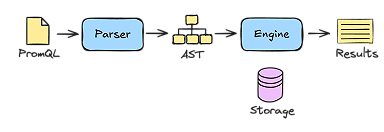

Using the same PromQL engine as Prometheus, Cortex started with the “querier.” It’s a stateless query microservice, which means “you can run as many as you like,” said Wilkie.

About a year ago, while the team was developing Cortex, the user pattern was straight-forward: If someone noticed queries are slow, the inclination was to run more queries. The problem with running more, though, is that it didn’t actually help.

“When queries are slow, it’s very rare to see a high concurrent query load. Most people load a dashboard, it reloads its graphs every five seconds if you’re a real maniac, and you’re seeing maybe four or five panels,” said Wilkie. “You’re seeing one QPS from that dashboard. That’s not a high query load. I don’t need multiple, parallel queriers to make that fast. And adding more queries is not really going to make it fast.”

There would also be bigger queries to face. “When you send one big query that’s looking back over 30 days of data, that’s just going to hit a single querier. Even worse, it’s actually going to hit a single goroutine within the querier. That is going to sit there and load all the data in the process and send you the result,” said Wilkie. “Effectively the longer you made the query, the slower it was going to get.”

Another factor the team considered was that users would be bound by the speed of the silicon on which they’re running. “Chips are getting slower. They’re not getting faster,” pointed out Wilkie. “They’re getting more cores so we can run more of these in parallel, but that doesn’t help because I don’t have a high concurrent load. I have a low concurrent load, but people are sending me larger queries for 30 days or more.”

First thing the Grafana Labs team did was add caching, specifically memcaches. Implemented by Goutham Veeramachneni, one of the Prometheus maintainers at Grafana Labs, the goal was to make sure that the index cache is as effective as possible but also doesn’t over cache and occasionally return stale results. “That was really tricky,” said Wilkie.

Luckily the chunk caches are immutable. “Once we’ve written them, we can cache them forever. They’re never going to change,” said Wilkie. “Even better, they’re actually content addressed. So if you have identical chunks, they dedupe themselves.”

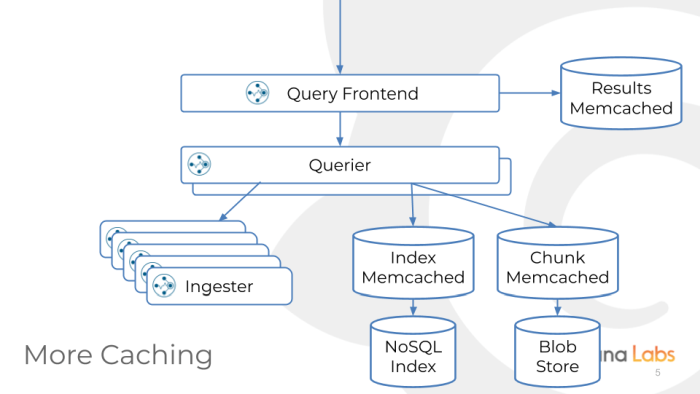

But all that didn’t really solve the problem, said Wilkie. So the team set out to build a query frontend that was inspired by code written by Comcast called Trickster.

“We set out to build our own one because we wanted to do things slightly differently," explained Wilkie. “We actually originally looked at just embedding Trickster into Cortex. One of the biggest problems I found with Trickster – and this is not a dig – is it was quite hard to embed, and they’re actually trying to fix that right now.”

“We don’t like in-process caches because we like to upgrade our software very regularly. We don’t like persistent caches on disc because discs are slow. And so are SSDs,” said Wilkie. “We have an addiction to memcache because it’s fast, and it’s separate from our processes. Our memcaches can last for days, weeks, months before we have to upgrade them. So we added caching everywhere.”

How Caching Impacts Cortex

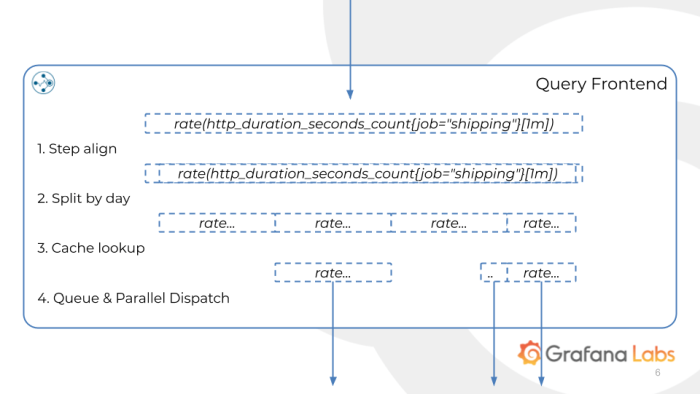

Now for an in-depth look at how the query frontend for Cortex works with caching.

Above, we’re looking for a shipping job and the rate of requests to the shipping job.

First thing that happens is to step align. Grafana Labs moves start and end times to align with the step by default now. “The reason we do this is because if you ask a querier now aligned to 15 seconds, what happens is Prometheus goes back 15 seconds from now, executes an instant query, goes back 15 seconds, executes an instant query, and so on,” said Wilkie. “If you ask a query now four or five seconds later, it goes back 15 seconds and you can see they don’t align. So you’re going to get a subtly and slightly different result.”

Aligning is important because it increases the cacheability of the results. “Without this, we couldn’t cache results with any kind of accuracy,” says Wilkie. “This is the reason why in earlier versions of Grafana, if you mash the refresh, the lines jumped about a bit.”

The next step to the query frontend is to split the query up by day. “The key thing is that these queries are now executed independently, so they are executed on multiple cores or multiple different Go routines,” said Wilkie. “Now we can scale with a multi-core processor. So if you ask me for a 30-day query, I go and issue 30 one-day queries and I execute them in parallel.”

Next the user gets to take a closer look at the results cache. “You find out you asked for this day before so you don’t need to ask Prometheus for that. You haven’t seen a certain day in the cache so maybe it got evicted or the cache forgot about it. An individual day might actually get broken down into multiple subqueries,” said Wilkie.

“You have to be really delicate here. You have to think about inclusive and exclusive boundaries,” added Wilkie. “Cortex is a multi-tenant system, so we effectively run a single, big cluster, and have hundreds of users on a single cluster or with partitioned-off data.”

To that end, “one of the things we really wanted to avoid is one user coming along with a really big query and killing all the other users. So these results actually get queued,” said Wilkie. “Then there’s a scheduler, which is really simple. It just randomly picks a queue so at least we have a really basic quality of service between users. If a user comes and mashes refresh, does a hundred QPS in the queries, everyone gets slow evenly and that user who’s doing a hundred QPS will starve themselves.”

But note, none of the features mentioned are Cortex specific. “This is just a PromQL caching library process that implements the PromQL API at the front and talks to things that implement the PromQL API at the bottom. So we can make this work for everything,” Wilkie pointed out. “We can make it work for Thanos. We can make it work for Prometheus. We can make it work for anything that’s implementing the PromQL API, which is one of the things I love about the Prometheus project: More and more things seem to implement our API and our query language, which is so cool.”

The Results

So how well does this work?

If we run a smallish, 7 day query against a publically availbile Prometheus server, it can take up to ~4s.

If we put the Cortex query frontend in front of it, with an empy cache (and hence just paralellising the query) that drops to ~1s.

And when we re-run the query such that it hits the cached result, this can drop to ~100ms.

To try it for yourself, check out the demo Wilkie put together below to show how all these components come together to vastly improve your PromQL queries:

What’s Next for Cortex

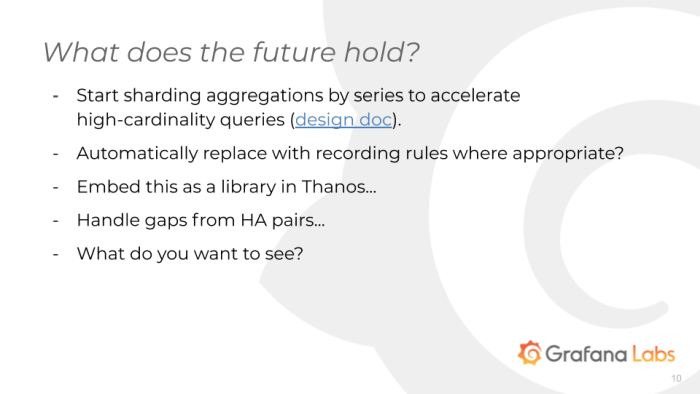

Based on the original design doc for Cortex, here are some of the big ideas for the future of the project.

“Parallelizing the queries by time is super cool and makes it faster, but what I really want to do is parallelize the queries by series as well,” explained Wilkie. “So you can think of timing one dimension series and the other dimension. I want to split each of those days into a hundred different shards that each consider 1/100 of the series.”

Ganesh Vernekar, one of the other Grafana Labs developers on the Cortex team, also made it so that Cortex can automatically identify subqueries within the expression and automatically replace them with relevant reporting rules. That feature has not been merged yet but will be coming.

Also, Wilkie says, “as we develop Cortex and break it, sometimes we have to fall back to asking our Prometheus developers why we broke Cortex so it’d be super useful if this library would handle gaps in HA pairs.”

Finally, the team wants to know: What do want to see? Is this useful? Is this easy to deploy? Does this make things faster for you? Is it reliable? Do you spot any bugs? Check out more about the Cortex project on GitHub.