Loki’s Path to GA: Query Optimization, Part One

Launched at KubeCon North America last December, Loki is a Prometheus-inspired service that optimizes storage, search, and aggregation while making logs easy to explore natively in Grafana. Loki is designed to work easily both as microservices and as monoliths, and correlates logs and metrics to save users money

Less than a year later, Loki has almost 6,500 stars on GitHub and is now quickly approaching GA. At Grafana Labs, we’ve been working hard on developing key features to make that possible. In the coming weeks, we’ll be highlighting some of these features. This post is the first of a multi-part series on our query optimization efforts.

Foreword

Loki stores log data in something we call “chunks.” Fully compressed, Loki chunks are approximately 2.1 megabytes, and can contain more than 100,000 lines of logs. Chunks are indexed by time and the hash of the Prometheus labels they hold. Fetching, filtering, and processing those chunks without consuming too much resources can be challenging, as queries can involve more than 10,000 chunks (or 1 billion lines).

In this blog we’re going to cover the changes and techniques we’ve applied to improve Loki CPU and memory consumption when loading and processing these chunks of log data. We make heavy use of the pprof tool and go benchmarks in this optimization process. I recommend this post on getting started with pprof written by Julia Evans of Shopify, and Go team member Dave Cheney’s take on how to write good benchmarks in Go. Julia does a great job of explaining how pprof is actually profiling the memory, which is very important (and particular to Go) when you’re trying to understand the behavior of your application.

Working with Go Garbage Collector

Before diving into the optimization we’ve done, it is interesting to know from a high-level perspective how the Go garbage collector works.

From the runtime documentation:

A garbage collection is triggered when the ratio of freshly allocated data to live data remaining after the previous collection reaches this percentage.

This means the GC by default will start its collection process every time memory size has doubled. You can increase the GC pace by lowering the GOGC environment variable or even turn it off using GOGC=off. This can be useful when investigating memory as well as displaying GC traces with GODEBUG=gctraces=1.

The garbage collection will search for unused allocated memory, mark it, then sweep it. The memory is not directly released to the system (this happens very slowly) but is ready to be reused by further allocations.

Now with that in mind, there are two reasons why your application consumes too much memory: A memory leak either by retaining allocated variables or by leaking goroutines; usually the latter is more common. Too many allocations in a small period of time.

In Loki we faced both. Let’s talk about how to find them and how to solve them.

How to Find and Solve

Before searching for improvement you should always set up a go test benchmark. This way you don’t get lost and you have a reference. This also gives you a better feedback loop when attempting to optimize your code. What’s nice about golang benchmark is that it also gives you pprof traces for CPU and memory.

go test ./pkg/storage/ -bench=. -benchmem -memprofile memprofile.out -cpuprofile cpuprofile.outYou can then use those traces easily with go tool:

go tool pprof -http :8080 memprofile.outBenchmark

In Loki we have set up a benchmark that iterates through 2 gigabytes of chunks, checks the heap size every millisecond, and prints it when it has increased.

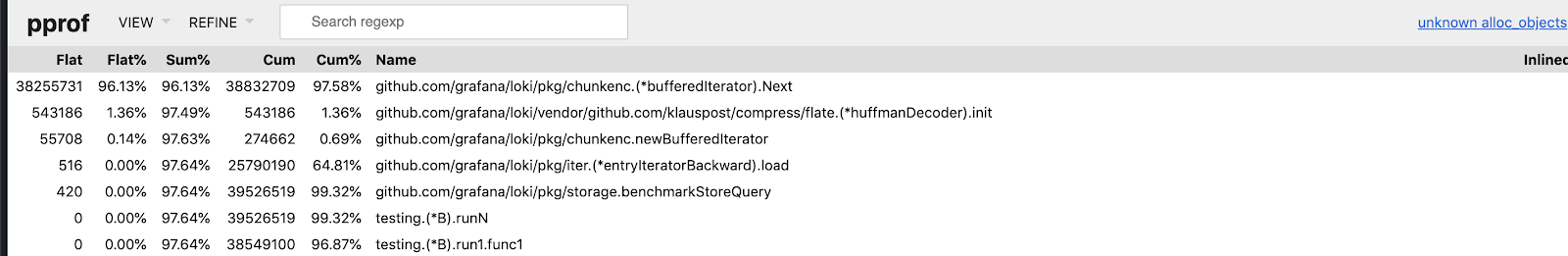

You can quickly find what is allocating memory with pprof alloc_space and alloc_object memory traces, and you want to start reducing those with the highest allocation rate. In Loki we found out that creating a gzip reader and a line buffer for every chunk was allocating a lot of objects.

This image shows an example of the top view for alloc_object (go tool pprof -http :8080 -sample_index=alloc_objects memprofile.out). Our buffered iterator’s Next function was allocating the most.

Object Pools

Thankfully Go has the concept of an object pool, which helps reuse allocated objects without retaining the memory. This is very useful for avoiding fast allocation that could potentially outrun our garbage collection.

For example, if you have a function that allocates 2 kilobytes, and your current heap size is 20 kilobytes, the garbage collection will only trigger once you have called this function 10 times, even if this was within the same goroutine. In reality, at any given time when using that function, we only need 2 kilobytes. Don’t get fooled and think that a pool will guarantee you a 2-kilobyte usage. It will most certainly do less allocations, but since objects in the pool can be garbage collected (weak references) at any time, it might still allocate multiple times.

Pools will also improve your CPU performances, as allocations can be expensive operations.

Adding pooling to our gzip compression helped. However, for our line buffer we had to push a bit more.

Since the line size is variable, we needed to find a pool that would work with a variable size of bytes. This is where we used the Prometheus pool, which is fantastic for this specific use case. It stores slices of bytes by bucket of their size, which is more efficient: If a line is very big – 10,000 bytes – it won’t fit into any bucket, and therefore will be dropped after it’s used. However, if a line is 2,000 bytes, it will fit into the 1000 to 2000 bucket and be reused any time a line fits within that bucket.

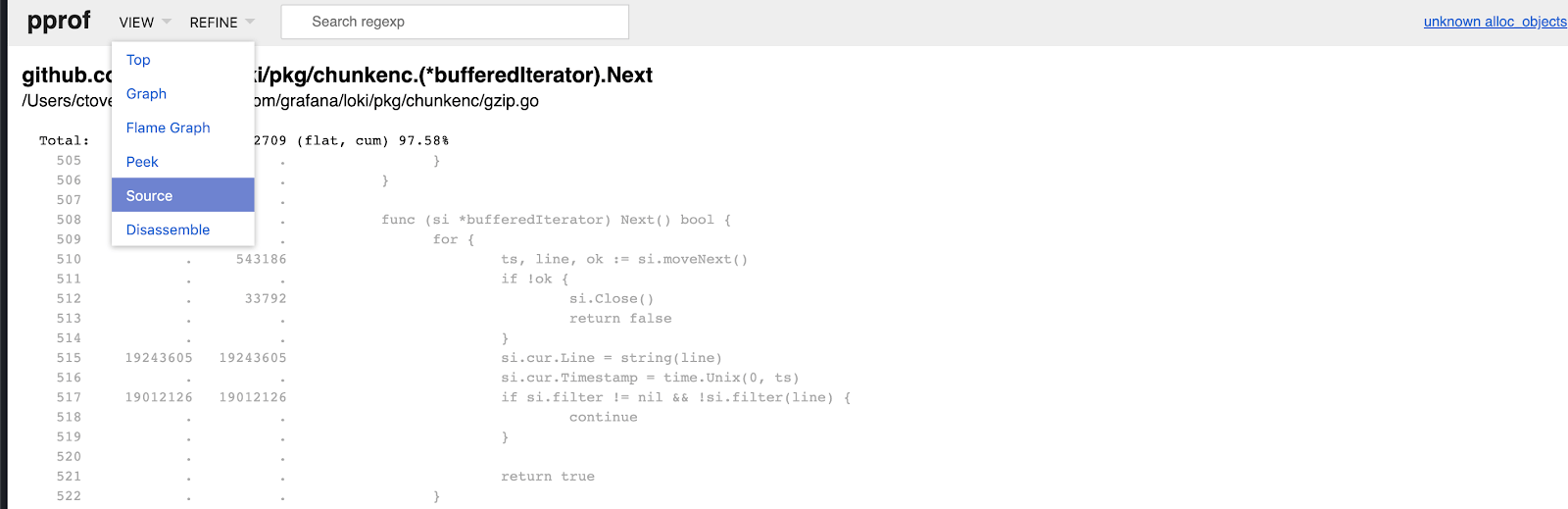

When looking at alloc_object top functions, you can select an item and use the source view to see the details of total allocations within your code.

This is how we realized that when filtering lines via regex using LogQL, a lot of allocations were wasted. The Go regex package is mostly popular when working with strings, but in our case our line buffer is a slice of bytes. Converting that buffer to a string creates a copy that we might ultimately throw away if the regex doesn’t match. Turns out there is another method: accepting slice of bytes, which helped us reduce unnecessary allocations.

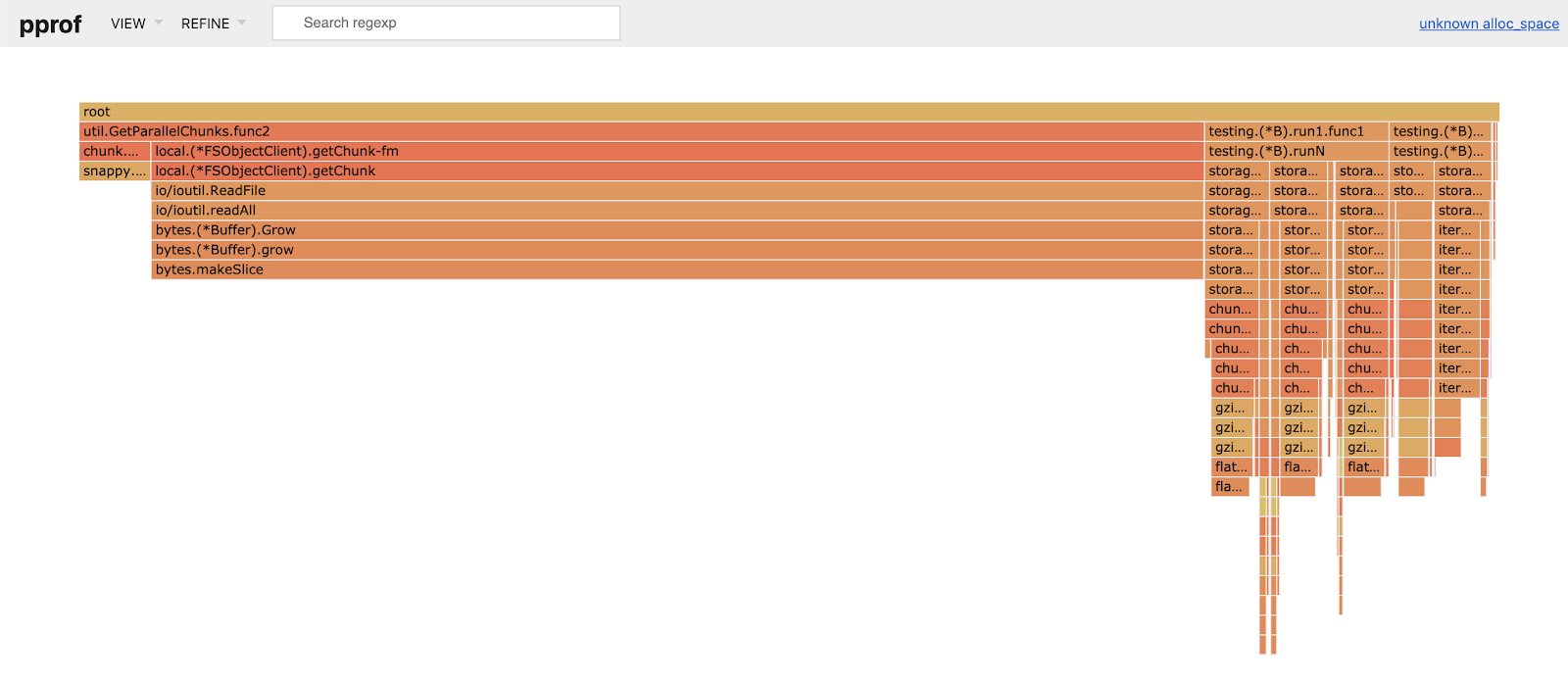

I like to use the flame graph, as it gives you a quick overview. This one is after optimization. We can clearly see that most of the allocated space now comes from fetching chunks.

Reducing the memory traffic (allocations over time) was a first step, but we could still see high usage of memory – which means we were probably leaking memory.

Finding Memory Leaks

While finding goroutine leaks is easy with pprof goroutine profile, it’s a different story to find memory retained by mistakes.

The inuse_object and inuse_space pprof traces show the memory in use by sampling allocation stack traces, as the Go runtime doc explains:

A Profile is a collection of stack traces showing the call sequences that led to instances of a particular event, such as allocation. Packages can create and maintain their own profiles; the most common use is for tracking resources that must be explicitly closed, such as files or network connections.

This means you know what allocated this memory, and that it is still in use, but you don’t know what is using it right now. This is a general shortcoming of memory profile in Go, and it is easy to be mistaken. Remember when looking at the graph view, each node represents memory allocated by the current function and still in use somewhere.

Some additional info from the go pprof docs:

The heap profile reports statistics as of the most recently completed garbage collection; it elides more recent allocation to avoid skewing the profile away from live data and toward garbage. If there has been no garbage collection at all, the heap profile reports all known allocations. This exception helps mainly in programs running without garbage collection enabled, usually for debugging purposes.

This slightly ambiguous definition makes it hard to understand how memory is held over time, as the allocation profiles do not tell you what memory is still in use if that allocation took place outside of the profile sample, and as mentions may ignore recent allocations entirely. Unfortunately there is no silver bullet here. Try to trace back those allocations by reading your code, breaking it, trying new things, repeating…. This is why you need a fast feedback loop when searching for the needle in your haystack (a.k.a. code base).

At last we found out that one of our iterators was retaining processed chunks memory.

All of these optimizations, including our benchmark, can be found inside this PR.

In parts two and three, we’ll address iterators, ingestion retention, label queries, and what’s next.

More about Loki

In other blog posts, we focus on key Loki features, including loki-canary early detection for missing logs, the Docker logging driver plugin and support for systemd, and adding structure to unstructured logs with the pipeline stage. Be sure to check back for more content about Loki.